Chapter 2 An ACER ConQuest Tutorial

This section of the manual contains 13 sample ACER ConQuest analyses. They range from the traditional analysis of a multiple choice test through to such advanced applications of ACER ConQuest as the estimation of multidimensional Rasch models and latent regression models. Our purpose here is to describe how to use ACER ConQuest to address particular problems; it is not a tutorial on the underlying methodology. For those interested in developing a greater familiarity with the mathematical and statistical methods that ACER ConQuest employs, the sample analyses in the tutorials should be supplemented by reading the material that is cited in the discussions.

In each sample analysis, the command statements used by ACER ConQuest are explained. For a comprehensive description of each command, see Chapter 4, ACER ConQuest Command Reference.

The files used in the sample analyses can be found on the ACER Notes and Tutorials website.

Before beginning the tutorials, this section starts with a description of the basic elements of the ACER ConQuest user interfaces.

2.1 The ACER ConQuest User Interfaces

ACER ConQuest is available with both a graphical user interface (GUI) and a simple command line or console interface (CMD). The ACER ConQuest command statement syntax (described in Chapter 4, ACER ConQuest Command Reference) used by the GUI and the console versions is identical. The tutorials are presented assuming use of the GUI version of ACER ConQuest.

Both the console version of the program and the GUI version are compatible with Microsoft Windows. The console version of the program is available for Mac OSX. There is no GUI version for Mac OSX.

The console version runs faster than the GUI version and may be preferred for larger and more complex analyses. The GUI version is more user friendly and provides plotting functions that are not available with the console version.

The two interfaces are described below.

2.1.1 GUI Version

Figure 2.1 shows the screen when the GUI version of ACER ConQuest is launched (double-click on the file ConQuestGUI.exe). You can now proceed in one of three ways.

- Open an existing command file (

File\(\rightarrow\)Open).2 - Open a previously saved ACER ConQuest system file (

File\(\rightarrow\)Get System File) - Create a new command file (

File\(\rightarrow\)New).

Figure 2.1: The ACER ConQuest Screen at Startup

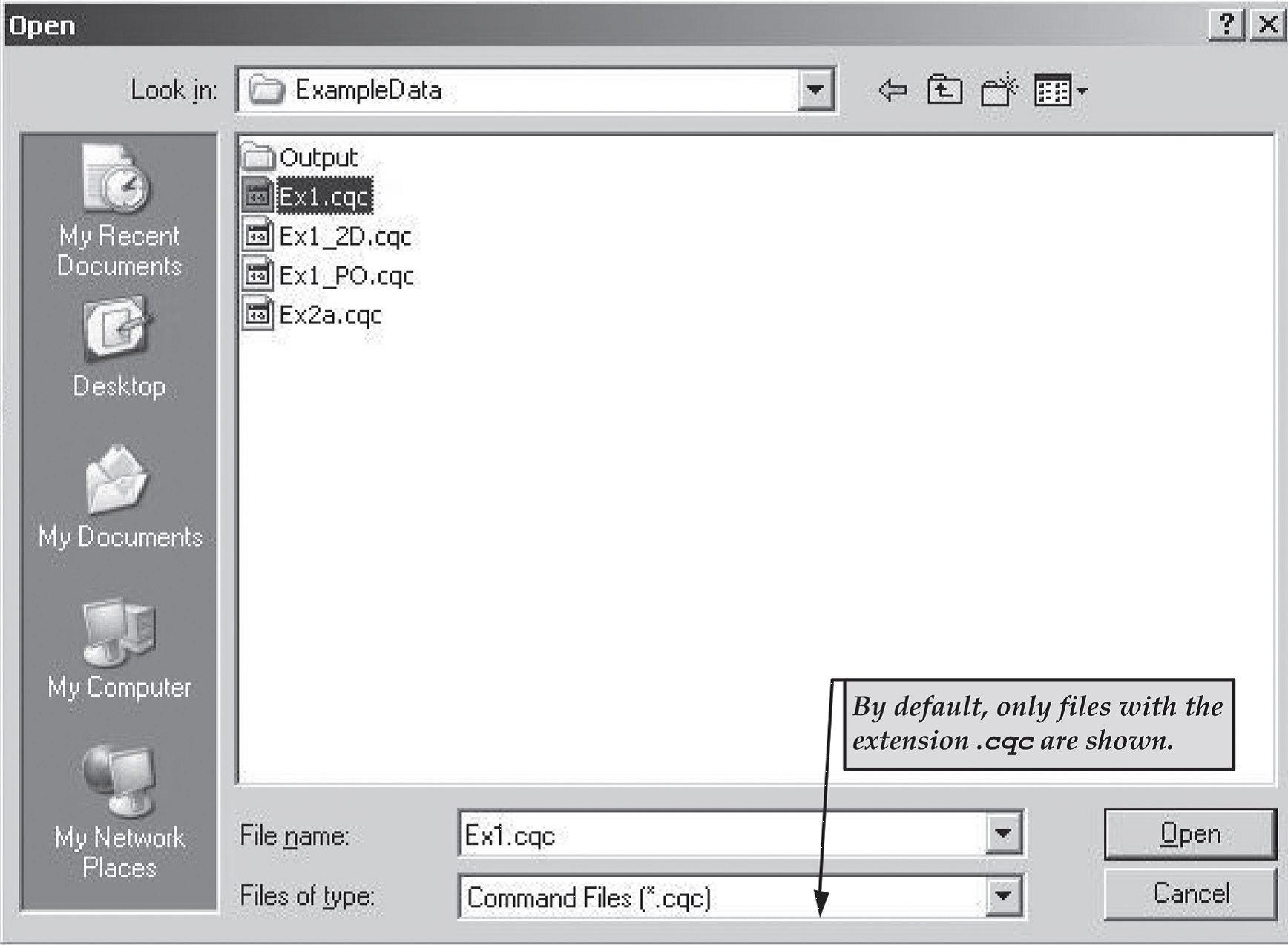

If you choose to open an existing command file, a standard Windows File/Open dialog box will appear (see Figure 2.2).

Locate the file you want to open.

Note that, by default, the list of files will be restricted to those with the extension .cqc, which is the default extension for ACER ConQuest command files.

To list other files, change the file type to All Files.

Figure 2.2: File/Open Dialog Box

If you choose to read a previously created system file, a standard Windows File/Open dialog box will appear.

Locate the file you want to open.

Note that, by default, the list of files will be restricted to those with the extension .CQS, which is the default extension for ACER ConQuest system files.

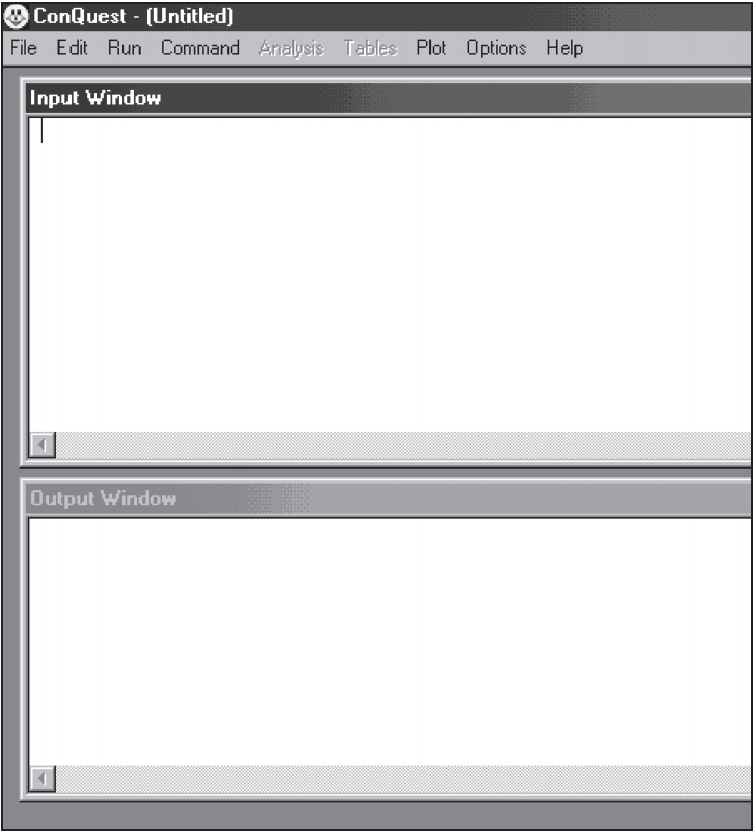

If you choose to create a new command file, or after you have selected an existing command file or system file from the File/Open dialog box, two windows will be created: an input window and an output window. These windows are illustrated in Figure 2.3.

A status bar reporting on the current activity of the program is located at the bottom of the ACER ConQuest window.

Figure 2.3: The ACER ConQuest Input and Output Windows

2.1.1.1 The Input Window

The input window is an editing window. If you have opened an existing ACER ConQuest command file, it will contain the file. If you have opened a system file or selected new, the input window will be blank.

Type or edit the ACER ConQuest command statements in the input window.

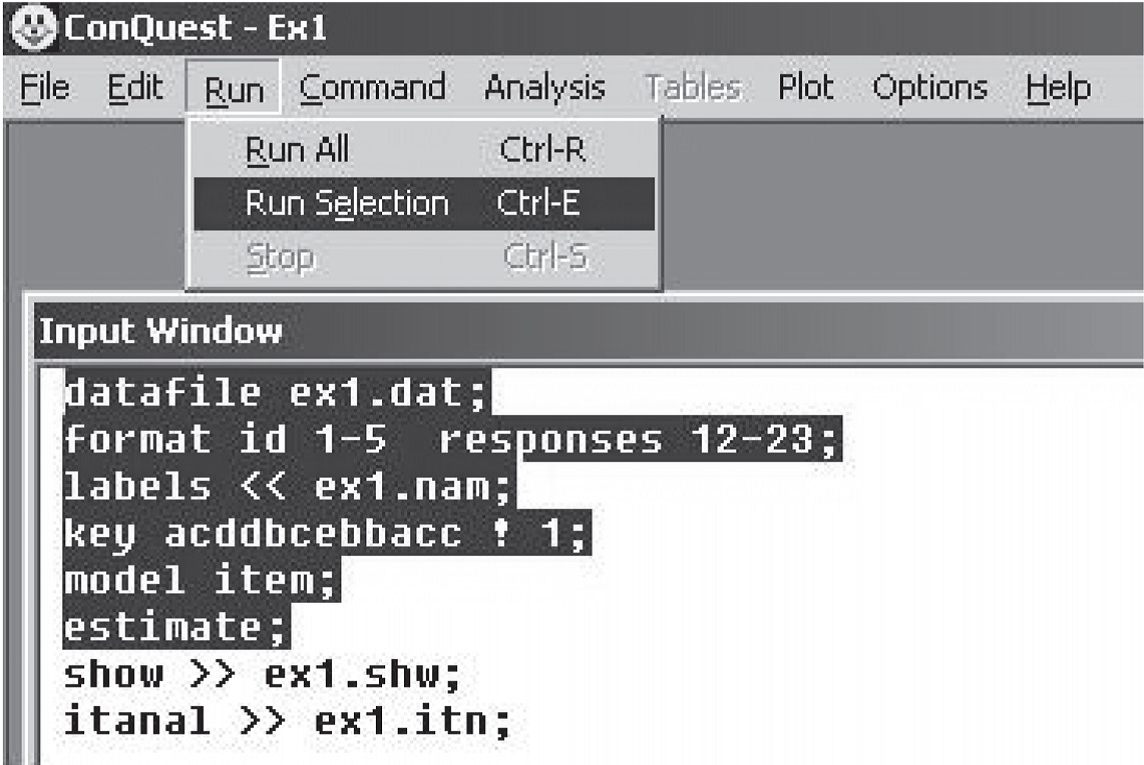

To start execution of the command statements, choose Run\(\rightarrow\)Run All, if you wish to run all of the commands in the input window.

To execute a subset of the commands then highlight the desired commands, choose Run\(\rightarrow\)Run Selection.

ACER ConQuest will execute the command statements that are selected.

This is illustrated in Figure 2.4.

If nothing is highlighted, then ACER ConQuest will not execute any commands.

Figure 2.4: Running a Selection

2.1.1.2 The Output Window

The output window displays the results and the progress of the execution of the command statements. As statements are executed by ACER ConQuest, they are echoed in the output window. When ACER ConQuest is estimating item response models, progress information is displayed in the output window. Certain ACER ConQuest statements produce displays of the results of analyses. Unless these results are redirected to a file, they will be shown in the output window.

The output window has a limited amount of buffer space.

When the buffer is full, material from the top of the buffer will be deleted.

The contents of the buffer can be saved or edited at any time that ACER ConQuest is not busy undertaking computations.

The output is cleared whenever Run\(\rightarrow\)Run All is chosen to execute all statements in the input window, whenever ACER ConQuest executes a reset statement, and whenever Command\(\rightarrow\)Clear Output is selected.

2.1.2 Console Version

The console version of ACER ConQuest provides a command line interface that does not draw upon the GUI features of the host operating system. This version of ACER ConQuest is substantially faster than the GUI version but is more limited in its functionality.

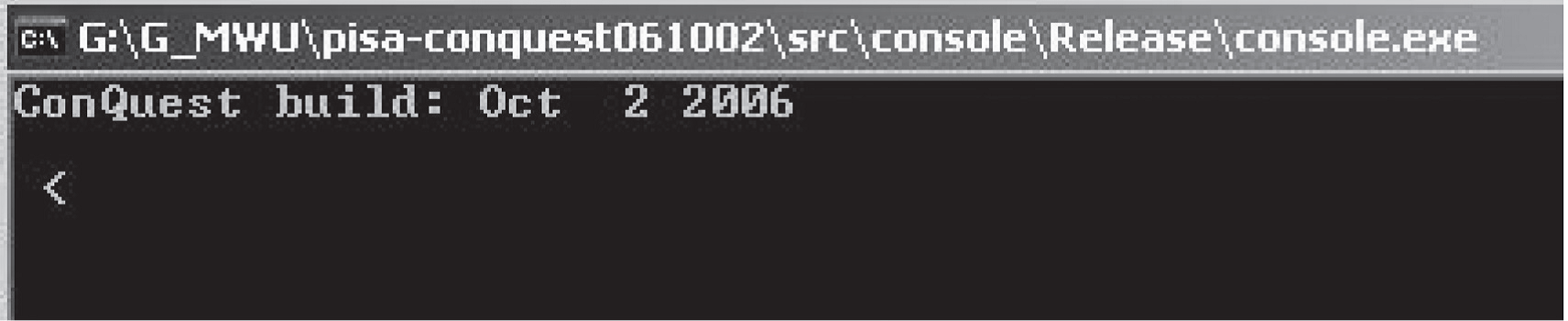

Figure 2.5 shows the screen when the console version of ACER ConQuest is started (double-click on the file ConQuestConsole.exe).

The less than character (<) is the ACER ConQuest prompt.

When the ACER ConQuest prompt is displayed, any appropriate ACER ConQuest statement can be entered.

As with any command line interface, ACER ConQuest attempts to execute the command statement when you press the Enter key.

If you have not yet entered a semi-colon (;) to indicate the end of the statement, the ACER ConQuest prompt changes to a plus sign (+) to indicate that the statement is continuing on a new line.

Figure 2.5: The Console ACER ConQuest Screen at Startup

The syntax of ACER ConQuest commands is described in section 4.1, and the remaining sections in this section illustrate various sets of command statements.

To exit from the ACER ConQuest program, enter the statement quit; at the ACER ConQuest prompt.

On many occasions, a file containing a set of ACER ConQuest statements (an ACER ConQuest command file) will be prepared with a text editor, and you will want ACER ConQuest to run the set of statements that are in the file.

For example if the file is called myfile.cqc, then the statements in the file can be executed in two ways.

In the first method, start ACER ConQuest (see the Installation Instructions if you don’t know how to start ACER ConQuest) and then type the command

submit myfile.cqc;A second method, which will work on operating systems that allow ACER ConQuest to be launched from a command line interface, is to provide the command file as a command line argument. That is, launch ACER ConQuest using

ConQuestCMD myfile.cqc;

With either method, after you press the Enter key, ACER ConQuest will proceed to execute each statement in the file. As statements are executed, they will be echoed on the screen. If you have requested displays of the analysis results and have not redirected them to a file, they will be displayed on the screen.

ACER ConQuest system files can be exchanged between the console and GUI versions. For large analyses it may be advantageous to fit the model with the console version, save a system file and then read that system file with the GUI version, for the purpose of preparing output plots and other displays.

2.1.3 Temporary Files

While ACER ConQuest is running, a number of temporary files will be created. These files have prefix “laji” (e.g., laji000.1, laji002.1, etc.). ACER ConQuest removes these files before closing the program. If these temporary files remain when ACER ConQuest is not running, you should remove them, as these files are typically large in size.

2.2 A Dichotomously Scored Multiple Choice Test

Multiple choice items are perhaps the most widely applied tool in testing. This is particularly true in the case of the testing of the cognitive abilities or achievements of a group of students.3 The analysis of the basic properties of dichotomous items and of tests containing a set of dichotomous items is the simplest application of ACER ConQuest. This first sample analysis, shows how ACER ConQuest can be used to fit Rasch’s simple logistic model to data gathered with a multiple choice test. ACER ConQuest can also generate a range of traditional test item statistics.4

2.2.1 Required files

The files used in this sample analysis are:

| filename | content |

|---|---|

| ex1.cqc | The command statements. |

| ex1_dat.txt | The data. |

| ex1_lab.txt | The variable labels for the items on the multiple choice test. |

| ex1_shw.txt | The results of the Rasch analysis. |

| ex1_itn.txt | The results of the traditional item analyses. |

(The last two files are created when the command file is executed.)

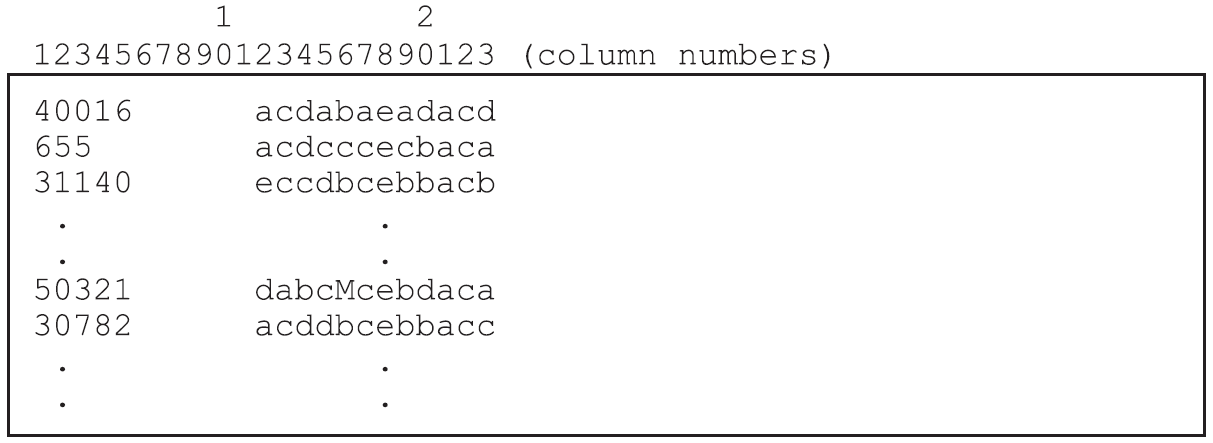

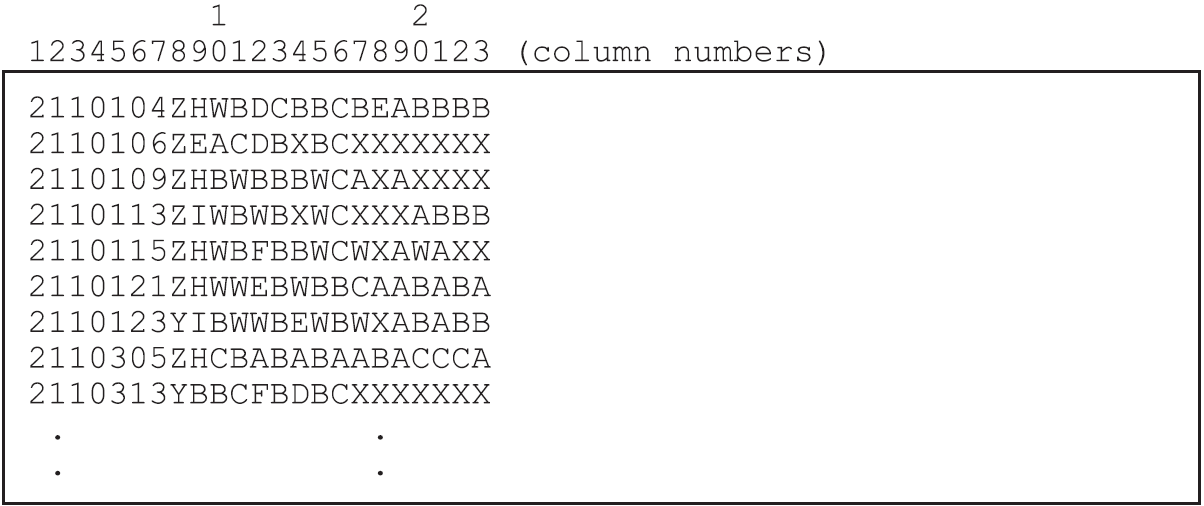

The data used in this tutorial comes from a 12-item multiple-choice test that was administered to 1000 students.

The data have been entered into the file ex1_dat.txt, using one line per student.

A unique student identification code has been entered in columns 1 through 5, and the students’ responses to each of the items have been recorded in columns 12 through 23.

The response to each item has been allocated one column; and the codes a, b, c and d have been used to indicate which alternative the student chose for each item.

If a student failed to respond to an item, an M has been entered into the data file.

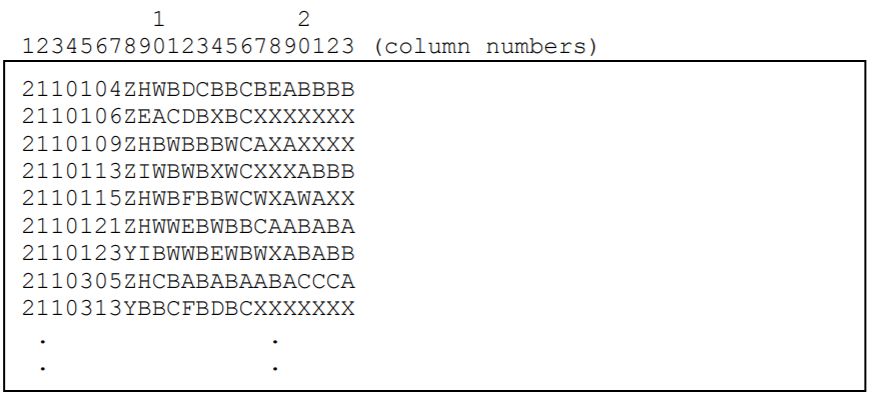

An extract from the data file is shown in Figure 2.6.

Figure 2.6: Extract from the Data File ex1\\_dat.txt. Each column of the data file is labelled so that it can be easily referred to in the text. The actual ACER ConQuest data file does not have any column labels.

2.2.2 Syntax

In this sample analysis, the Rasch (1980) simple logistic model will be fitted to the data, and traditional item analysis statistics are generated.

ex1.cqc is the command file used in this tutorial, and is shown in the code box below.

A list explaining each line of syntax follows.

The syntax for ACER ConQuest commands is presented in section 4.1.

ex1.cqc:

datafile ex1_dat.txt;

format id 1-5 responses 12-23;

labels << ex1_lab.txt;

key acddbcebbacc ! 1;

model item;

estimate;

show >> results/ex1_shw.txt;

itanal >> results/ex1_itn.txt;

/* rout option is for use in R using conquestr: */

plot icc ! rout=results/icc/ex1_;

plot mcc! legend=yes;Line 1

Thedatafilestatement indicates the name and location of the data file. Any file name that is valid for the operating system you are using can be used here.Line 2

Theformatstatement describes the layout of the data in the fileex1_dat.txt. Thisformatstatement indicates that a field that will be calledidis located in columns 1 through 5 and that the responses to the items are in columns 12 through 23 of the data file. Everyformatstatement must give the location of the responses. In fact, the explicit variableresponsesmust appear in theformatstatement or ACER ConQuest will not run. In this particular sample analysis, the responses are those made by the students to the multiple choice items; and, by default,itemwill be the implicit variable name that is used to indicate these responses. The levels of theitemvariable (that is, item 1, item 2 and so on) are implicitly identified through their location within the set of responses (called the response block) in theformatstatement; thus, in this sample analysis, the data for item 1 is located in column 12, the data for item 2 is in column 13, and so on.EXTENSION: The item numbers are determined by the order in which the column locations are set out in the response block. If you use the following:

format id 1-5 responses 12-23;

item 1 will be read from column 12. If you use:

format id 1-5 responses 23,12-22;

item 1 will be read from column 23TIP: In some testing contexts, it may be more informative to refer to the response variable as something other than

item. Using the variable nametaskorquestionmay lead to output that is better documented. Altering the name of the response variable is easy. If you want to use the nametasksrather thanitem, simply add an option to theformatstatement as follows:

format id 1-5 responses 12-23 ! tasks(12);

The variable nametasksmust then be used to indicate the response variable in other ACER ConQuest commands. For example in themodelstatement in Line 5.Line 3

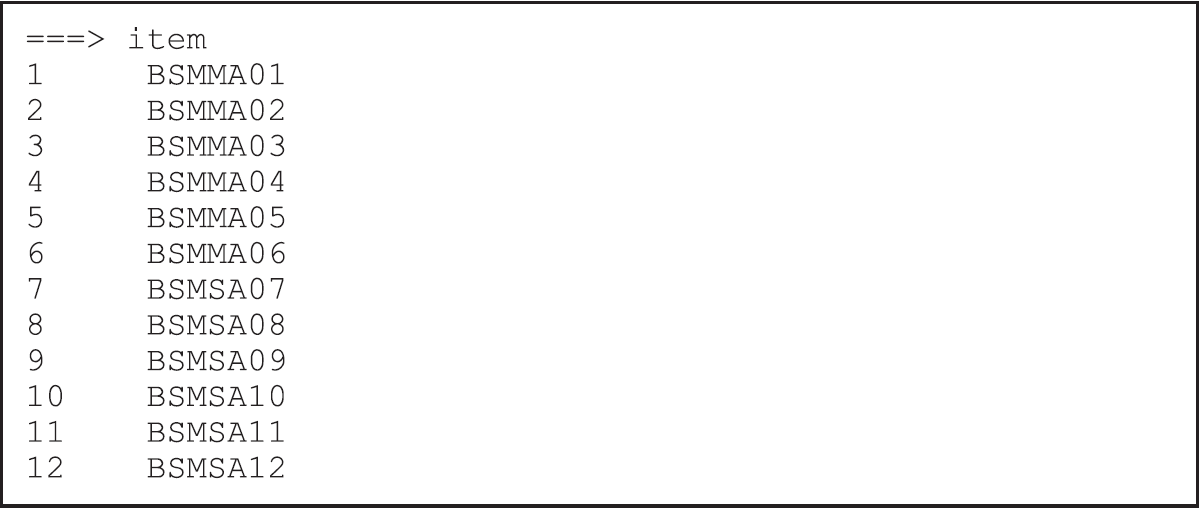

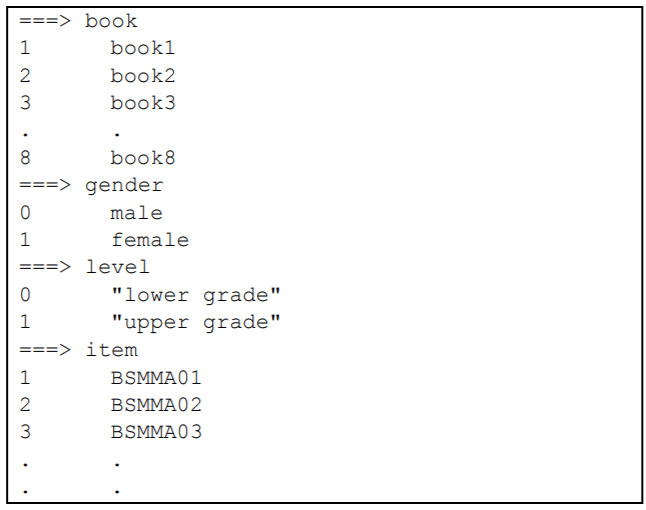

Thelabelsstatement indicates that a set of labels for the variables (in this case, the items) is to be read from the fileex1_lab.txt. An extract ofex1_lab.txtis shown in Figure 2.7. (This file must be text only; if you create or edit the file with a word processor, make sure that you save it using the text only option.) The first line of the file contains the special symbol===>(a string of three equals signs and a greater than sign) followed by one or more spaces and then the name of the variable to which the labels are to apply (in this case,item). The subsequent lines contain two pieces of information separated by one or more spaces. The first value on each line is the level of the variable (in this case,item) to which a label is to be attached, and the second value is the label. If a label includes spaces, then it must be enclosed in double quotation marks (“ “). In this sample analysis, the label for item 1 isBSMMA01, the label for item 2 isBSMMA02, and so on.TIP: Labels are not required by ACER ConQuest, but they improve the readability of any ACER ConQuest printout, so their use is strongly recommended.

Figure 2.7: Contents of the Label File ex1_lab.txt.

Line 4

Thekeystatement identifies the correct response for each of the multiple choice test items. In this case, the correct answer for item 1 isa, the correct answer for item 2 isc, the correct answer for item 3 isd, and so on. The length of the argument in thekeystatement is 12 characters, which is the length of the response block given in theformatstatement.If a

keystatement is provided, ACER ConQuest will recode the data so that any response a to item 1 will be recoded to the value given in the key statement option (in this case,1). All other responses to item 1 will be recoded to the value of thekey_default(in this case, 0). Similarly, any responsecto item 2 will be recoded to1, while all other responses to item 2 will be recoded to 0; and so on.Line 5

Themodelstatement must be provided before any traditional or item response analyses can be undertaken. When undertaking simple analyses of multiplechoice tests, as in this example, the argument for themodelstatement is the name of the variable that identifies the response data that are to be analysed (in this case,item).Line 6

Theestimatestatement initiates the estimation of the item response model.NOTE: The order in which commands can be entered into ACER ConQuest is not fixed. There are, however, logical constraints on the ordering. For example,

showstatements cannot precede theestimatestatement, which in turn cannot precede themodel,formatordatafilestatements.Line 7

Theshowstatement produces a sequence of tables that summarise the results of fitting the item response model. In this case, the redirection symbol (>>) is used so that the results will be written to the fileex1_shw.txtin your current directory. If redirection is omitted, the results will be displayed on the console (or in the output window for the GUI version).Line 8

Theitanalstatement produces a display of the results of a traditional item analysis. As with theshowstatement, the results are redirected to a file (in this case,ex1_itn.txt).Line 10

ThePlot iccstatement will produce 12 item characteristic curve plots, one for each item. The plots will compare the modelled item characteristic curves with the empirical item characteristic curves. Note that this command is not available in the console version of ACER ConQuest.Line 11

ThePlot mccstatement will produce 12 category characteristic curve plots, one for each item. The plots will compare the modelled item characteristic curves with the empirical item characteristic curves (for correct answers) and will also show the behavior of the distractors. Note that this command is not available in the console version of ACER ConQuest.

2.2.3 Running the Multiple Choice Sample Analysis

To run this sample analysis, start the GUI version. Open the file ex1.cqc and choose Run\(\rightarrow\)Run All.

Alternatively, you can launch the console version of ACER ConQuest, by typing the command5 (on Windows) ConQuestConsole.exe ex1.cqc.

ACER ConQuest will begin executing the statements that are in the file ex1.cqc and

as they are executed, they will be echoed on the screen (or output window).

When ACER ConQuest reaches the estimate statement, it will begin fitting Rasch’s

simple logistic model to the data, and as it does so it will report on the progress

of the estimation.

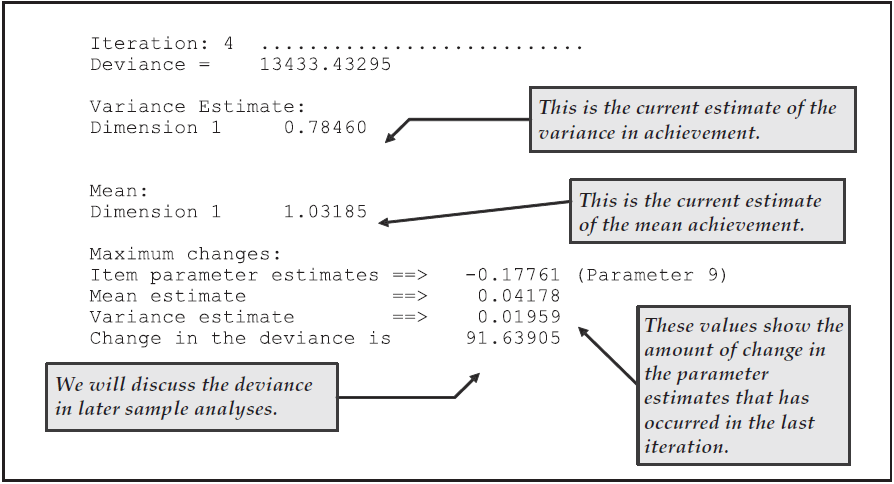

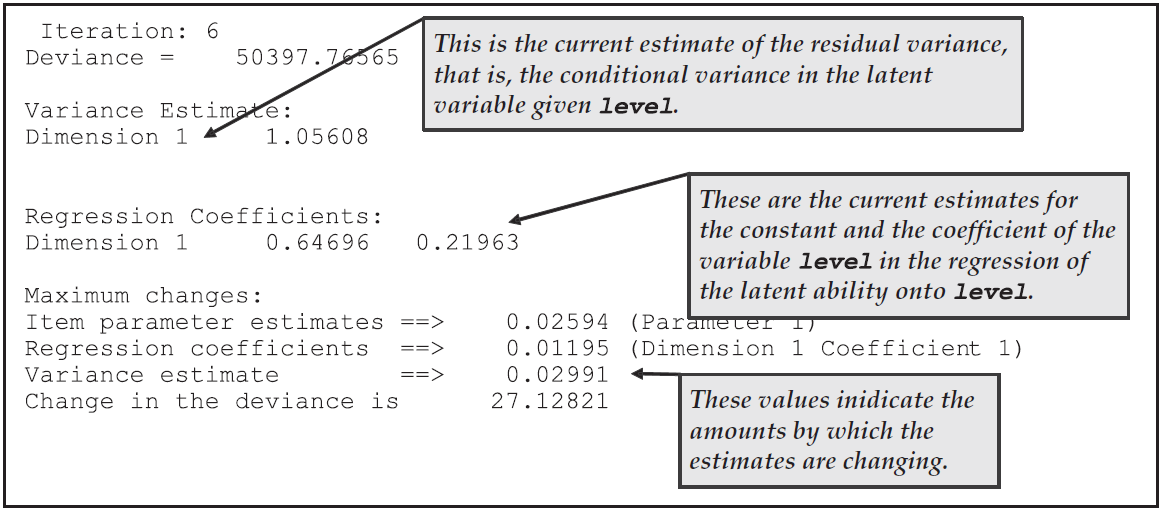

Figure 2.8 is an extract of the information that is provided during the

estimation (in this case, the changes in the estimates after four iterations).

Figure 2.8: Reported Information on the Progress of Estimation

After the estimation is completed, the two statements that produce text output (show and itanal) will be processed and then, in the case of the GUI version two sets of 12 plots will be produced.

In this case, the show statement will produce all six of its tables.

All of these tables will be in the file ex1_shw.txt.

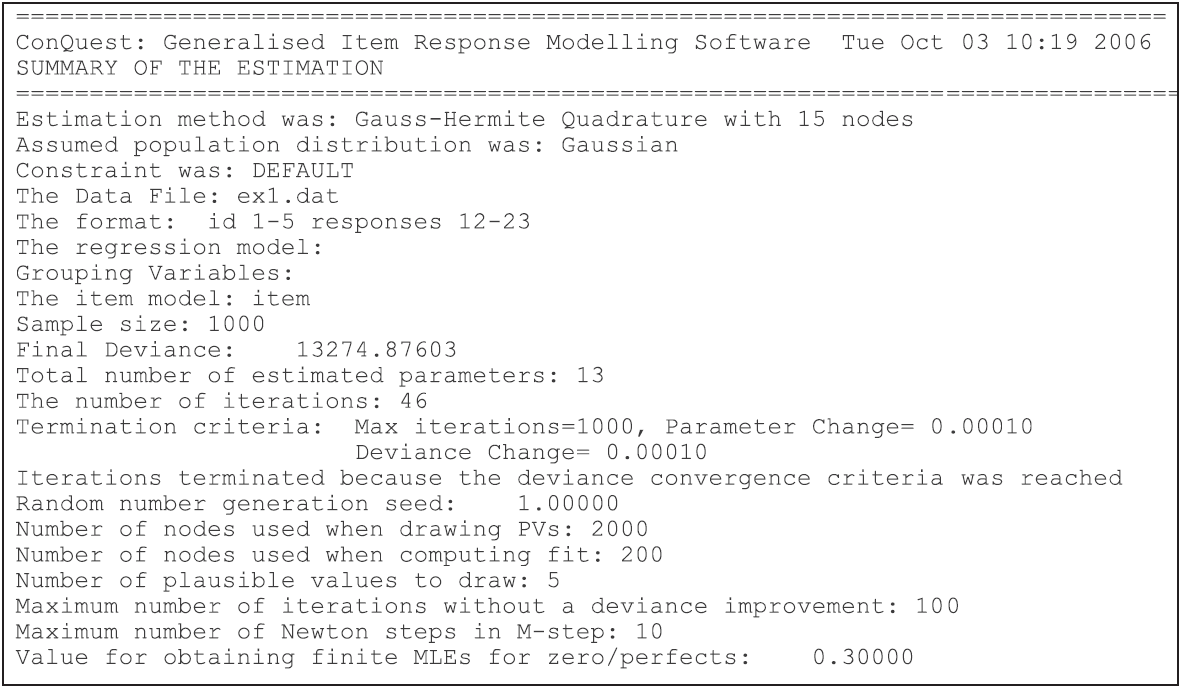

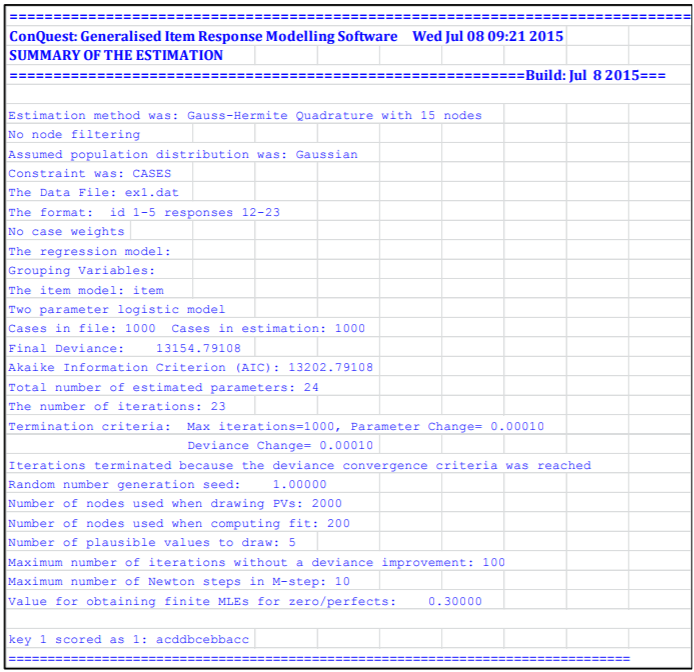

The contents of the first table are shown in Figure 2.9.

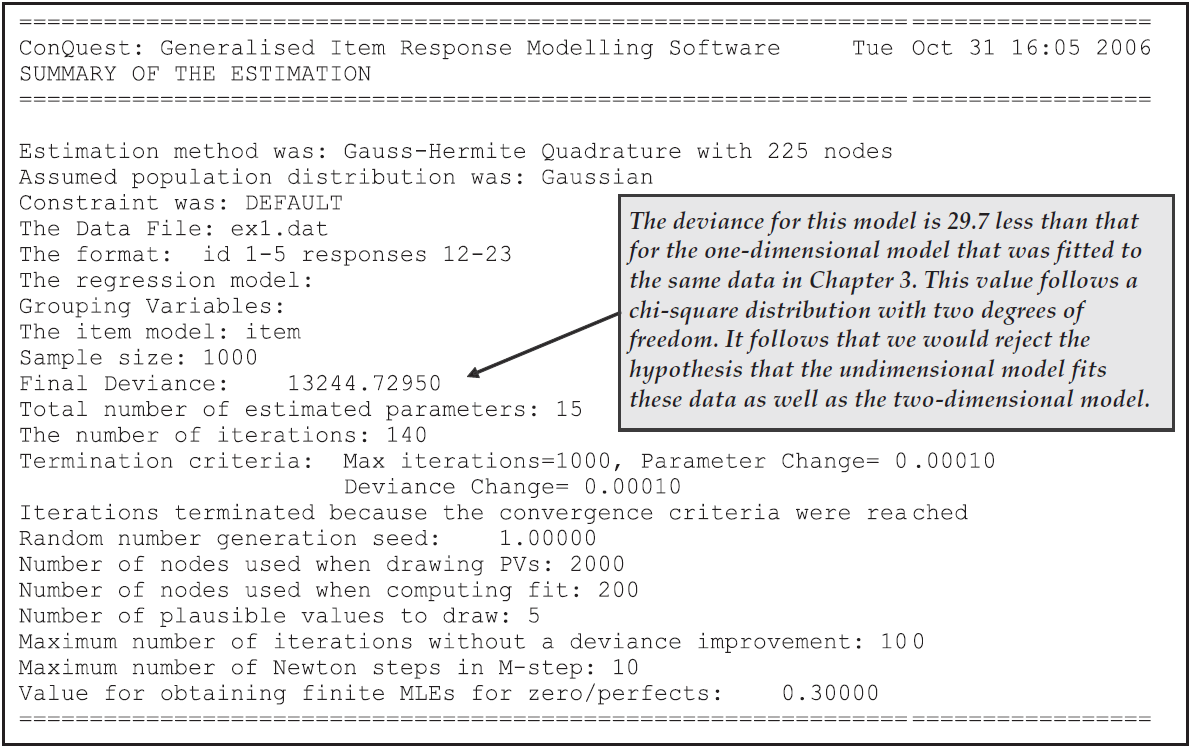

This table is provided for cross-referencing and record-keeping purposes. It indicates the data set that was analysed, the format that was used to read the data, the model that was requested and the sample size. It also provides the number of parameters that were estimated, the number of iterations that the estimation took, and the reason for the termination of the estimation. The deviance is a statistic that indicates how well the item response model has fit the data; it will be discussed further in future sample analyses.

As Figure 2.9 shows, in this analysis 13 parameters were estimated. They are: (a) the mean and variance of the latent achievement that is being measured by these items; and (b) 11 item difficulty parameters. Following the usual convention of Rasch modelling, the mean of the item difficulty parameters has been made zero, so that a total of 11 parameters is required to describe the difficulties of the 12 items.

Figure 2.9: Summary Table

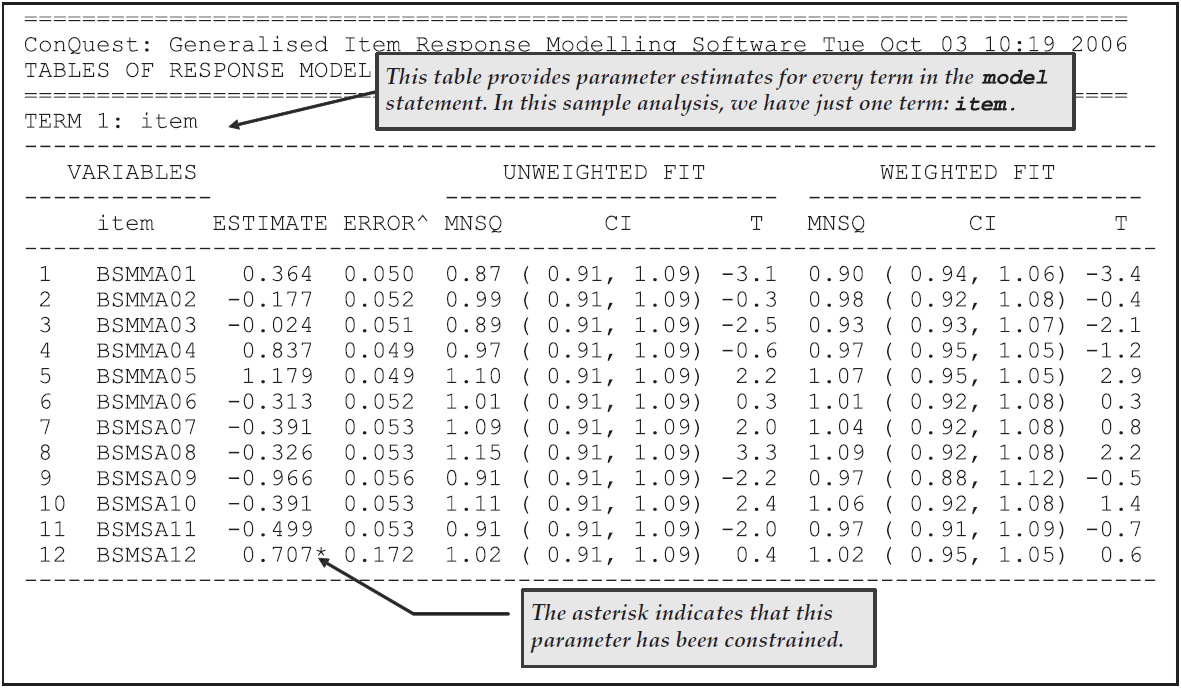

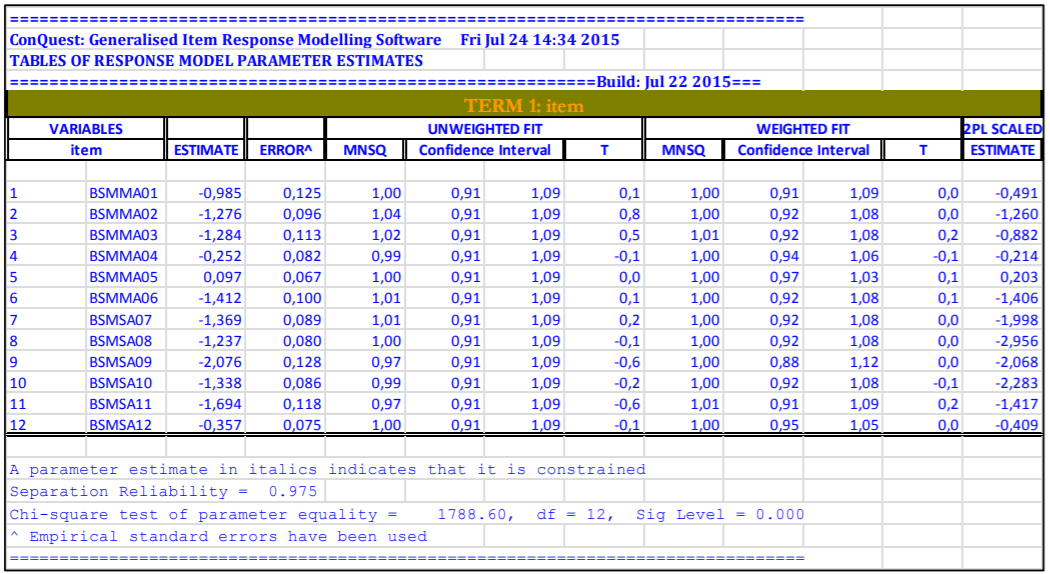

Figure 2.10 shows the second table from the file ex1_shw.txt.

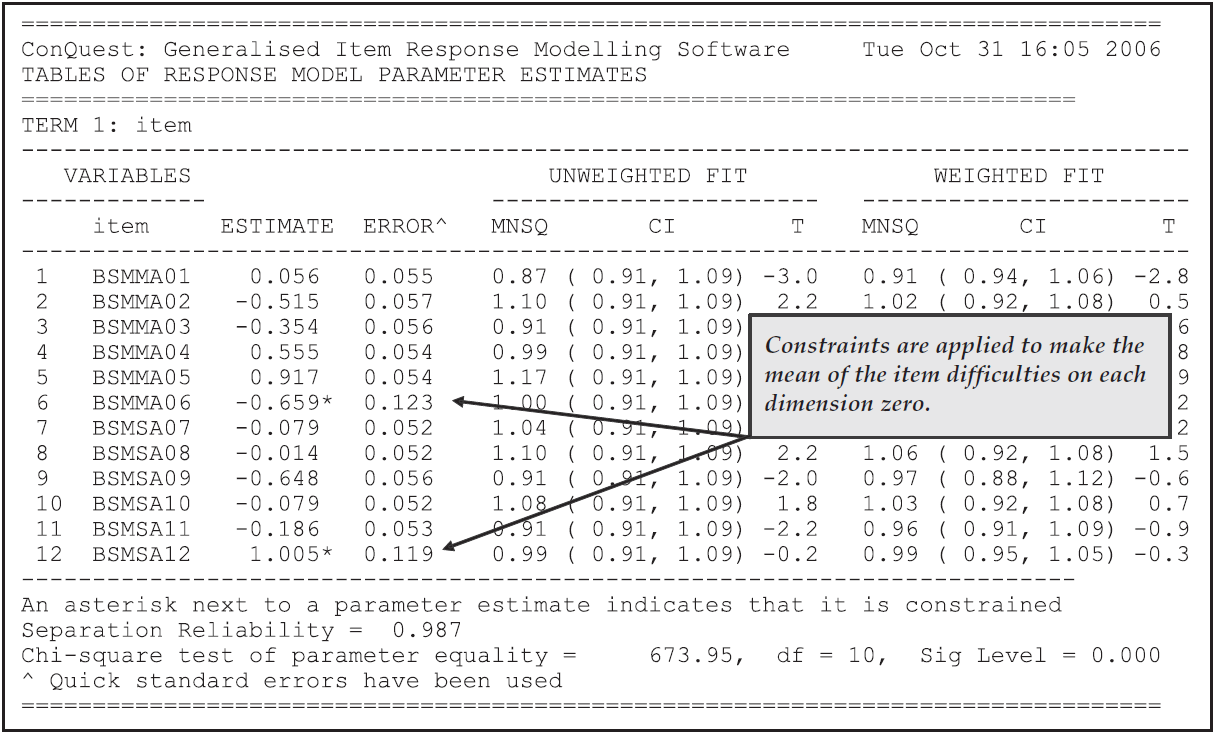

This table gives the parameter estimates for each of the test items along with their standard errors and some diagnostics tests of fit.

The estimation algorithm and the methods used for computing standard errors and fit statistics are discussed in Chapter 3.

In brief, the item parameter estimates are marginal maximum likelihood estimates obtained using an EM algorithm, the standard errors are asymptotic estimates given by the inverse of the hessian, and the fit statistics are residual-based indices that are similar in conception and purpose to the weighted and unweighted fit statistics that were developed by Wright & Stone (1979) and Wright & Masters (1982) for Rasch’s simple logistic model and the partial credit model respectively.

For the MNSQ fit statistics we provide a ninety-five percent confidence interval for the expected value of the MNSQ (which under the null hypothesis is 1.0). If the MNSQ fit statistic lies outside that interval then we reject the null hypothesis that the data conforms to the model. If the MNSQ fit statistic lies outside the interval then the corresponding T statistics will have an absolute value that exceeds 2.0.

At the bottom of the table an item separation reliability and chi-squared test of parameter equality are reported. The separation reliability is as described in Wright & Stone (1979). This indicates how well the item parameters are separated; it has a maximum of one and a minimum of zero. This value is typically high and increases with increasing sample sizes. The null hypothesis for the chi-square test is equality of the set of parameters. In this case equality of all of the parameters is rejected because the chi-square is significant. This test is not useful here, but will be of use in other contexts, where parameter equivalence (e.g., rater severity) is of concern.

Figure 2.10: The Item Parameter Estimates

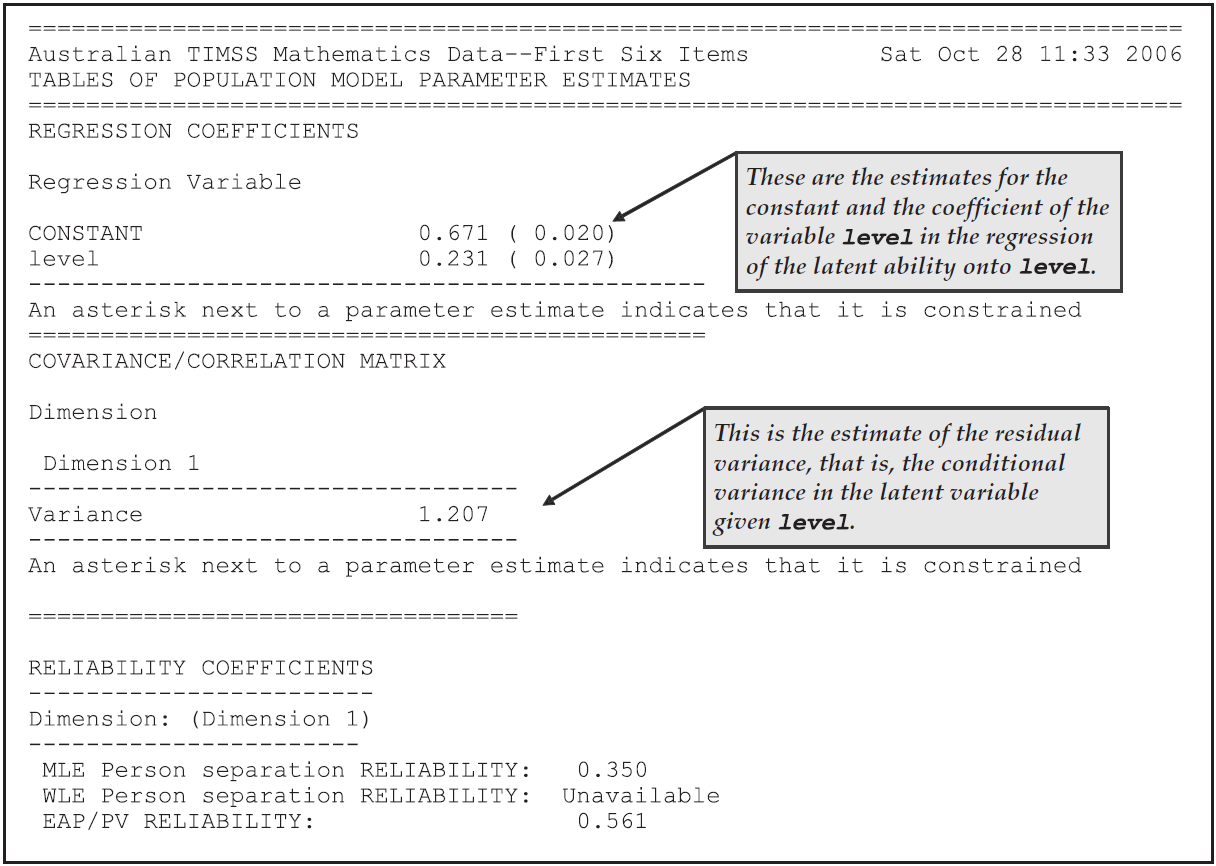

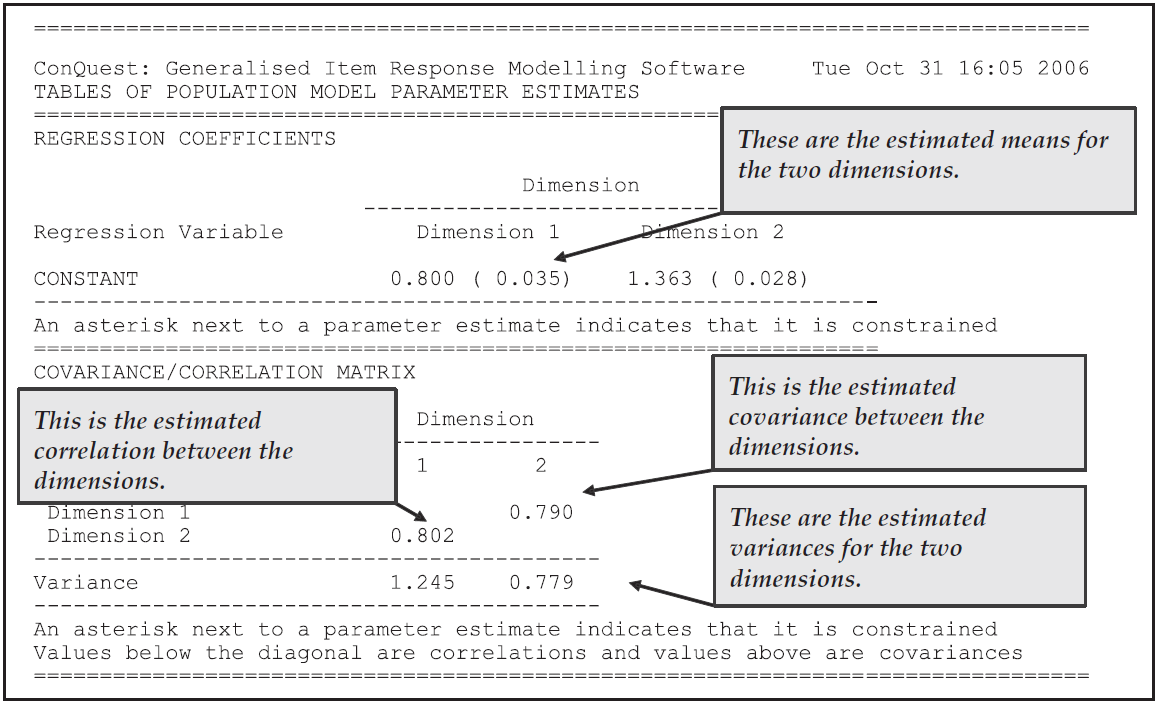

The third table in the show statement’s output (not shown for the sake of brevity) gives the estimates of the population parameters.

In this case, these are simply estimates of the mean of the latent ability distribution and of the variance of that distribution.

In this case, the mean is estimated as 1.070, and the variance is estimated as 0.866.

Extension: In Rasch modelling, it is usual to identify the model by setting the mean of the item difficulty parameters to zero. This is also the default behaviour for ACER ConQuest, which automatically sets the value of the ‘last’ item parameter to ensure an average of zero. In ACER ConQuest, however, you can, as an alternative, choose to set the mean of the latent ability distribution to zero. To do this, use the set command as follows:

set lconstraints=cases;

If you want to use a different item as the constraining item, then you can read the items in a different order. For example:

format id 1-5 responses 12-15, 17-23, 16;

would result in the constraint being applied to the item in column 16. But be aware, it will now be called item 12, not item 5, as it is the twelfth item in the response block.

This table also provides a set of reliability indices.

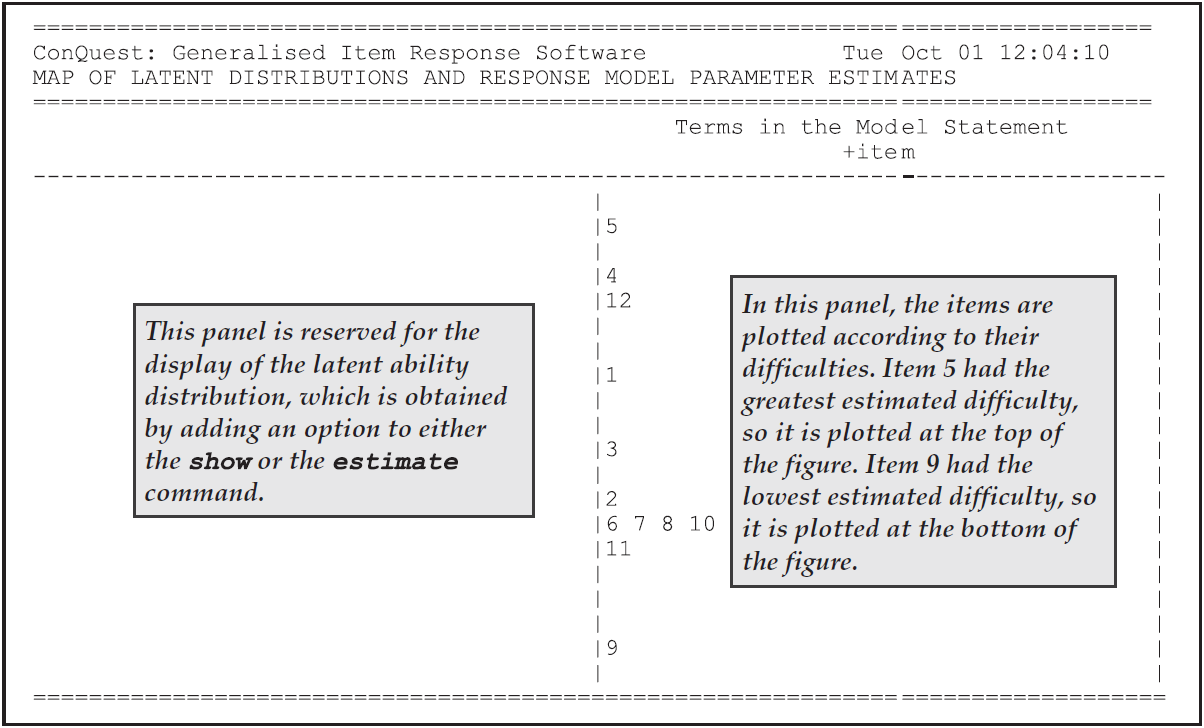

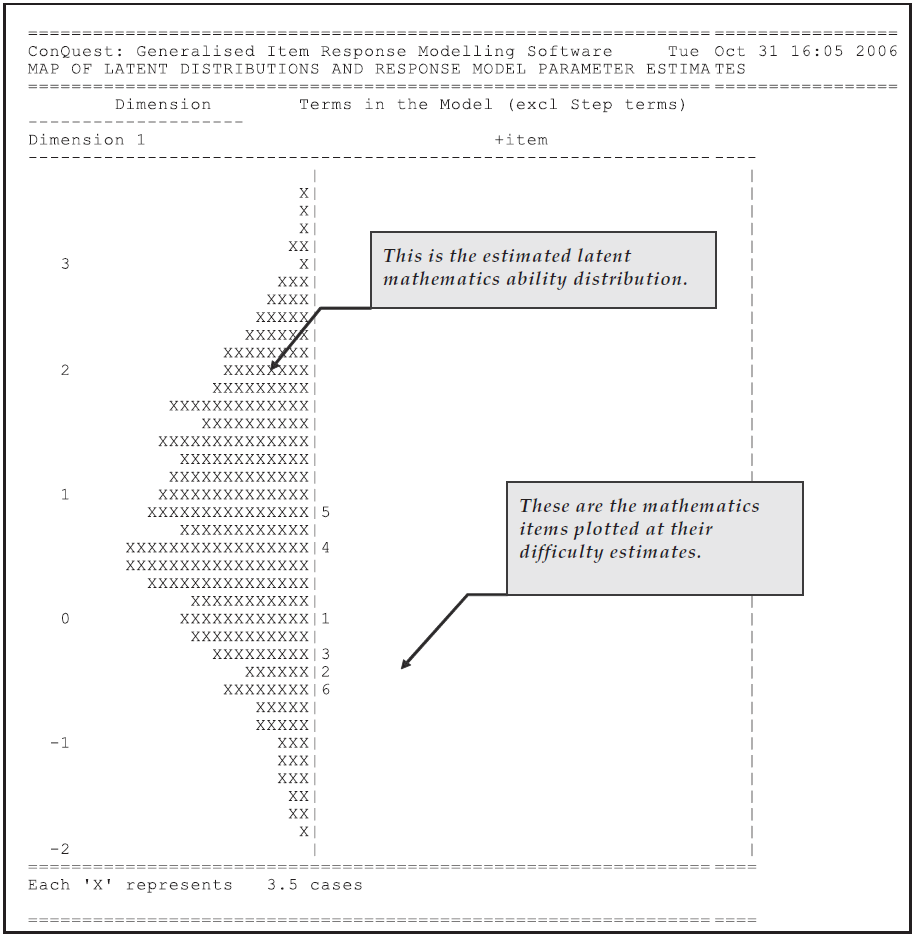

The fourth table in the output, Figure 2.11, provides a map of the item difficulty parameters.

Figure 2.11: The Item and Latent Distribution Map for the Simple Logistic Model

The file ex1_shw.txt contains one additional table, labelled Map of Latent Distributions and Thresholds.

In the case of dichotomously scored items and a model statement with a single term6, these maps provide the same information as that shown in Figure 2.11, so they are not discussed further.

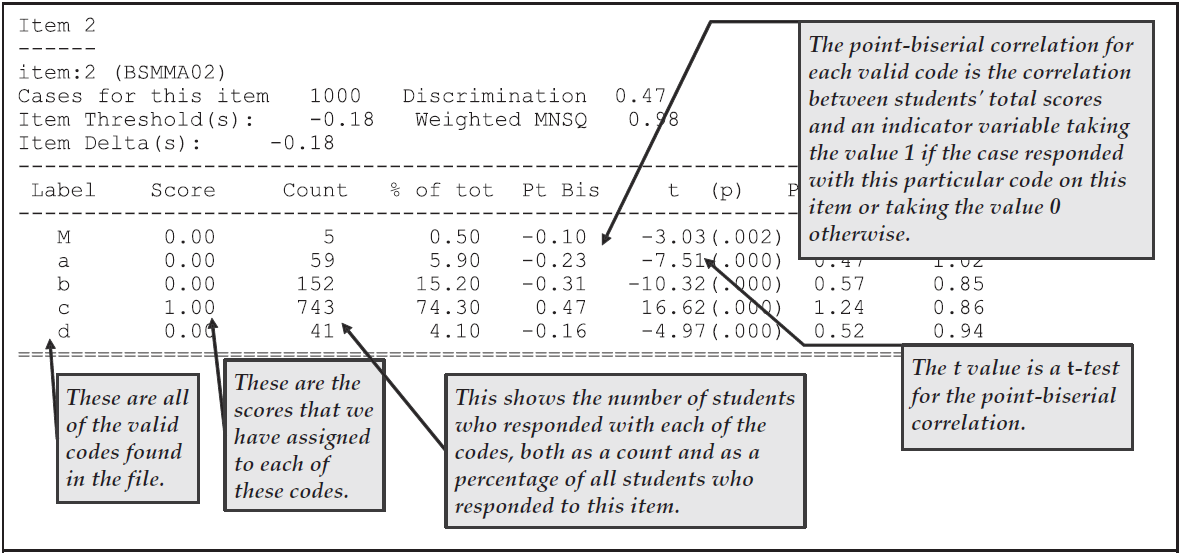

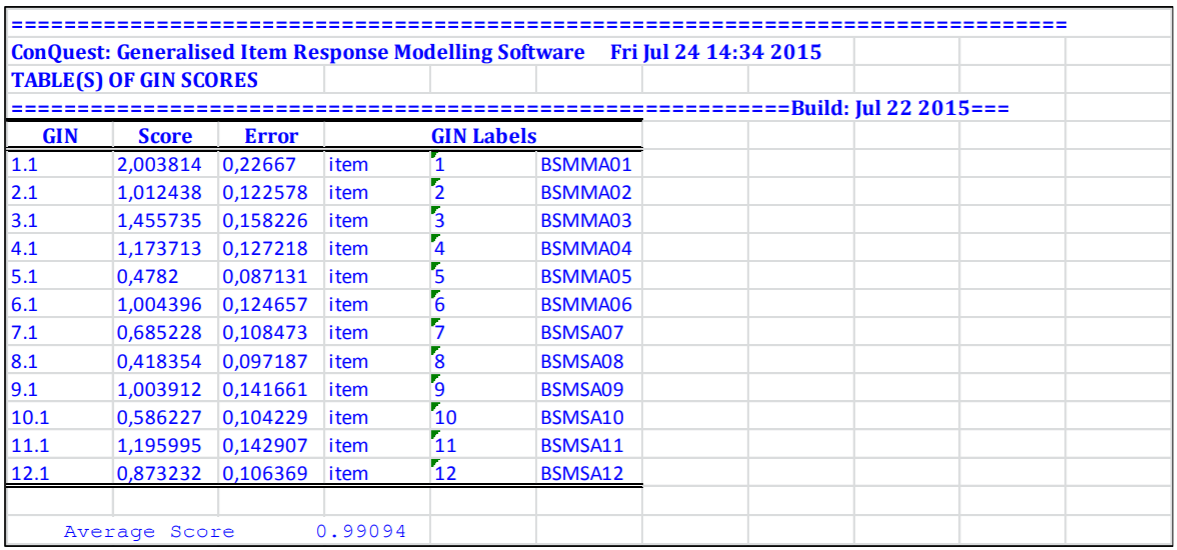

The traditional item analysis is invoked by the itanal statement, and its results have been written to the file ex1_itn.txt.

The itanal output includes a table showing classical difficulty, discrimination, and point-biserial statistics for each item.

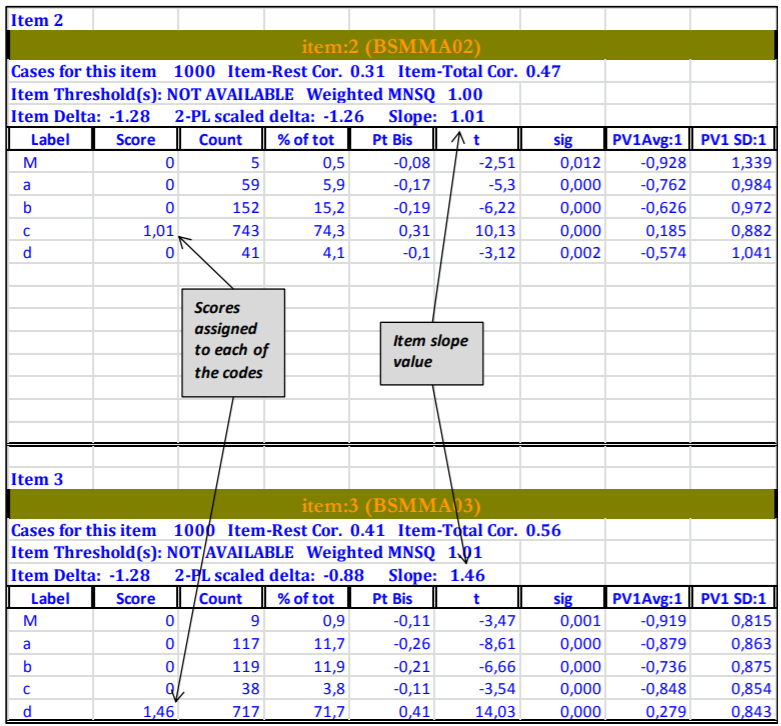

Figure 2.12 shows the results for item 2.

Figure 2.12: Example of Traditional Item Analysis Results

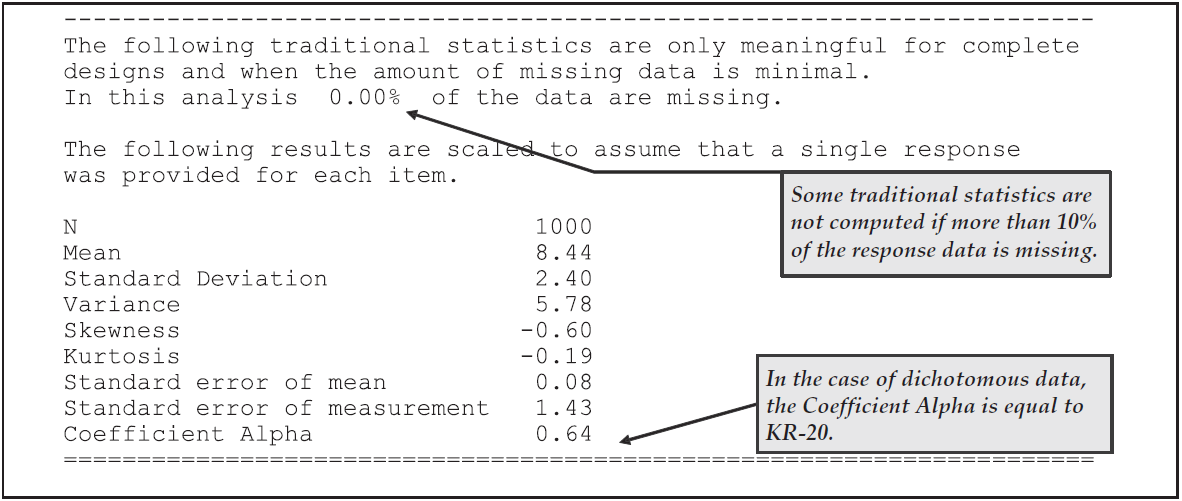

Summary results, including coefficient alpha for the test as a whole, are printed at the end of the file ex1_itn.txt as shown in Figure 2.13.

Discussion of the usage of the statistics can be found in any standard text book, such as Crocker & Algina (1986).

Figure 2.13: Summary Statistics from Traditional Item Analysis Results

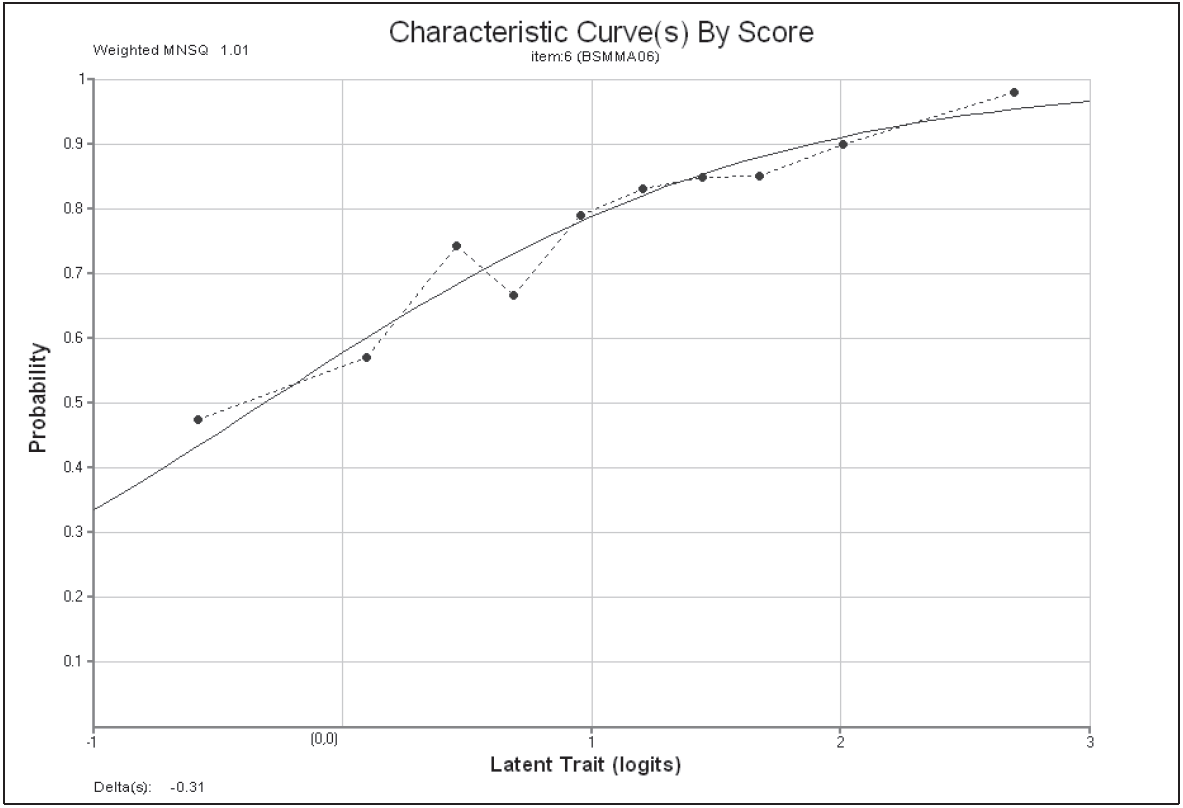

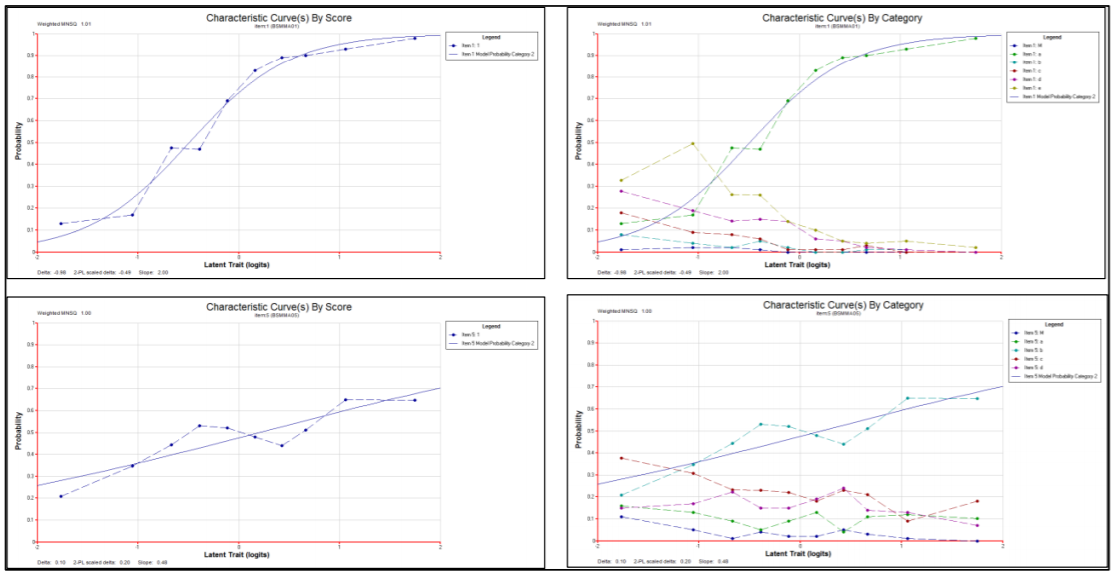

Figure 2.14 shows one of the 12 plots that were produced by the plot icc command.

The ICC plot shows a comparison of the empirical item characteristic curve (the broken line, which is based directly upon the observed data) with the modelled item characteristic curve (the smooth line).

Figure 2.14: Modelled and Empirical Item Characteristic Curves for Item 6

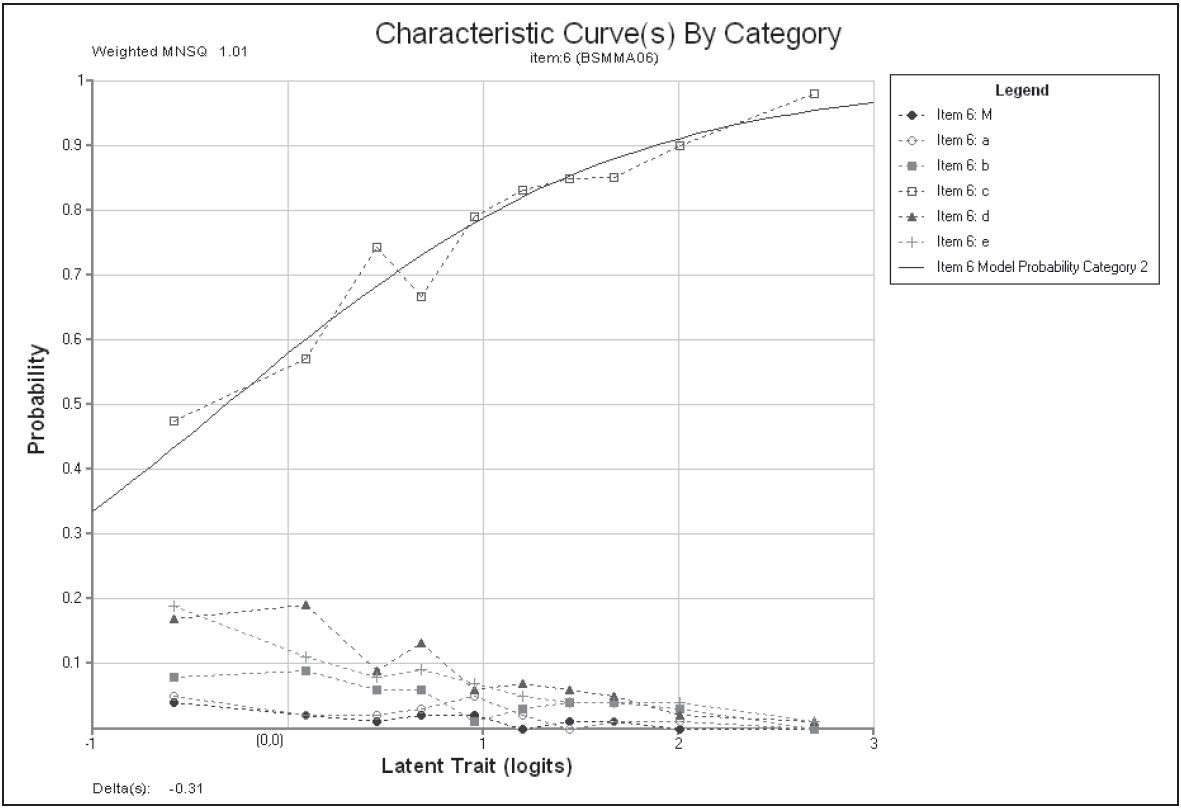

Figure 2.15 shows a matching plot produced by the plot mcc command.

In addition to showing the modelled curve and the matching empirical curve, this plot shows the characteristics of the incorrect responses—the distractors.

In particular it shows the proportion of students in each of a sequence of ten ability groupings7 that responded with each of the possible responses.

Figure 2.15: Modelled and Empirical Category Characteristics Curves for Item 6

TIP: Whenever a

keystatement is used, theitanalstatement will display results for all valid data codes. If akeystatement is not used, theitanalstatement will display the results of an analysis done after recoding has been applied.

2.2.4 Summary

This section shows how ACER ConQuest can be used to analyse a multiple-choice test. Some key points covered in this section are:

- the

datafile,formatandmodelstatements are prerequisites for data set analysis. - the

keystatement provides an efficient method for scoring multiple choice tests. - the

estimatestatement is used to fit an item response model to the data. - the

itanalstatement generates traditional item statistics. - the

plotstatement displays graphs which illustrate the relationship between the empirical data and the model’s expectation.

EXTENSION: ACER ConQuest can fit other models to multiple choice tests, including models such as the ordered partition model.

2.3 Modelling Polytomously Scored Items with the Rating Scale and Partial Credit Models

The rating scale model (Andrich, 1978; Wright & Masters, 1982) and the partial credit model (Masters, 1982; Wright & Masters, 1982) are extensions to Rasch’s simple logistic model and are suitable for use when items are scored polytomously. The rating scale model was initially developed by Andrich for use with Likert-style items, while Masters’ extension of the rating scale model to the partial credit model was undertaken to facilitate the analysis of cognitive items that are scored into more than two ordered categories. In this section, the use of ACER ConQuest to fit the partial credit and rating scale models is illustrated through two sets of sample analyses. In the first, the partial credit model is fit to some cognitive items; and in the second, the fit of the rating scale and partial credit models to a set of items that forms an attitudinal scale is compared.

2.3.1 a) Fitting the Partial Credit Model

The data for the first sample analysis are the responses of 515 students to a test of science concepts related to the Earth and space. Previous analyses of some of these data are reported in Adams et al. (1991).

2.3.1.1 Required files

The files used in this sample analysis are:

| filename | content |

|---|---|

| ex2a.cqc | The command statements. |

| ex2a_dat.txt | The data. |

| ex2a_lab.txt | The variable labels for the items on the partial credit test. |

| ex2a_shw.txt | The results of the partial credit analysis. |

| ex2a_itn.txt | The results of the traditional item analyses. |

(The last two files are created when the command file is executed.)

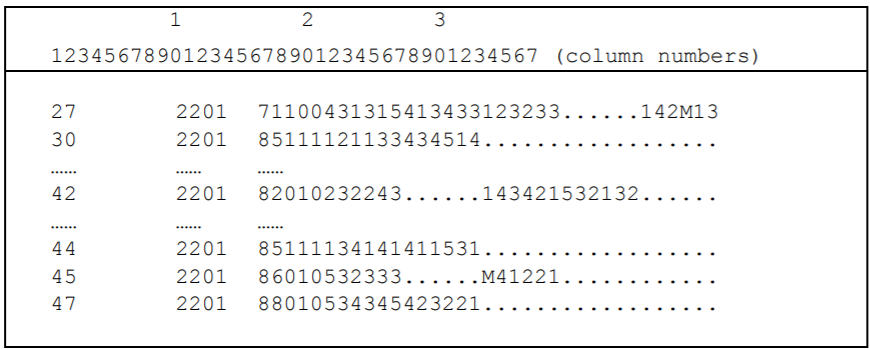

The data have been entered into the file ex2a_dat.txt, using one line per student.

A unique identification code has been entered in columns 2 through 7, and the students’ response to each of the items has been recorded in columns 10 through 17.

In this data, the upper-case alphabetic characters A, B, C, D, E, F, W, and X have been used to indicate the different kinds of responses that students gave to these items.

The code Z has been used to indicate data that cannot be analysed.

For each item, these codes are scored (or, more correctly, mapped onto performance levels) to indicate the level of quality of the response.

For example, in the case of the first item (the item in column 10), the response coded A is regarded as the best kind of response and is assigned to level 2, responses B and C are assigned to level 1, and responses W and X are assigned to level 0.

An extract of the file ex2a_dat.txt is shown in Figure 2.16.

Figure 2.16: Extract from the Data File ex2a_dat.txt

NOTE: In most Rasch-type models, a one-to-one match exists between the label that is assigned to each response category to an item (the category label) and the response level (or score) that is assigned to that response category. This need not be the case with ACER ConQuest.

In ACER ConQuest, the distinction between a response category and a response level is an important one. When ACER ConQuest fits item response models, it actually models the probabilities of each of the response categories for each item. The scores for each of these categories need not be unique. For example, a four-alternative multiple choice item can be modelled as a four-response category item with three categories assigned zero scores and one category assigned a score of one, or it can be modelled in the usual fashion as a two-category item where the scores identify the categories.

2.3.1.2 Syntax

The command file used in this analysis of a Partial Credit Test is ex2a.cqc, which is shown in the code box below.

Each line of the command file is described in the list underneath the code box.

ex2a.cqc:

Title Partial Credit Model: What happened last night;

data ex2a_dat.txt;

format name 2-7 responses 10-17;

labels << ex2a_lab.txt;

codes 3,2,1,0;

recode (A,B,C,W,X) (2,1,1,0,0) !items(1);

recode (A,B,C,W,X) (3,2,1,0,0) !items(2);

recode (A,B,C,D,E,F,W,X) (3,2,2,1,1,0,0,0)!items(3);

recode (A,B,C,W,X) (2,1,0,0,0) !items(4);

recode (A,B,C,D,E,W,X) (3,2,1,1,1,0,0) !items(5);

recode (A,B,W,X) (2,1,0,0) !items(6);

recode (A,B,C,W,X) (3,2,1,0,0) !items(7);

recode (A,B,C,D,W,X) (3,2,1,1,0,0) !items(8);

model item + item*step;

estimate;

show !estimates=latent >> results/ex2a_shw.txt;

itanal >> results/ex2a_itn.txt;

plot expected! gins=2;

plot icc! gins=2;

plot ccc! gins=2;Line 1

Gives a title for this analysis. The text supplied after the commandtitlewill appear on the top of any printed ACER ConQuest output. If a title is not provided, the default,ConQuest: Generalised Item Response Modelling Software, will be used.Line 2

Indicates the name and location of the data file. Any name that is valid for the operating system you are using can be used here.Line 3

Theformatstatement describes the layout of the data in the fileex2a_dat.txt. This format indicates that a field callednameis located in columns 2 through 7 and that the responses to the items are in columns 10 through 17 (the response block) of the data file.Line 4

A set of labels for the items are to be read from the fileex2a_lab.txt. If you take a look at these labels, you will notice that they are quite long. ACER ConQuest labels can be of any length, but most ACER ConQuest printouts are limited to displaying many fewer characters than this. For example, the tables of parameter estimates produced by theshowstatement will display only the first 11 characters of the labels.Line 5

Thecodesstatement is used to restrict the list of codes that ACER ConQuest will consider valid. In the sample analysis in section 2.2, acodesstatement was not used. This meant that any character in the response block defined by theformatstatement — except a blank or a period (.) character (the default missing-response codes) — was considered valid data. In this sample analysis, the valid codes have been limited to the digits 0, 1, 2 and 3; any other codes for the items will be treated as missing-response data. It is important to note that thecodesstatement refers to the codes after the application of any recodes.Lines 6-13

The eightrecodestatements are used to collapse the alphabetic response categories into a smaller set of categories that are labelled with the digits0,1,2and3. Each of theserecodestatements consists of three components:- The first component is a list of codes contained within parentheses.

These are codes that will be found in the data file

ex2a_dat.txt, and these are called the from codes. - The second component is also a list of codes contained within parentheses, these codes are called the to codes. The length of the to codes list must match the length of the from codes list. When ACER ConQuest finds a response that matches a from code, it will change (or recode) it to the corresponding to code.

- The third component (the option of the

recodecommand) gives the levels of the variables for which therecodeis to be applied. Line 11, for example, says that, for item 6, A is to be recoded to 2, B is to be recoded to 1, and W and X are both to be recoded to 0.

Any codes in the response block of the data file that do not match a code in the from list will be left untouched. In these data, the Z codes are left untouched; and since Z is not listed as a valid code, all such data will be treated as missing-response data.

When ACER ConQuest models these data, the number of response categories that will be assumed for each item will be determined from the number of distinct codes for that item. Item 1 has three distinct codes (2, 1 and 0), so three categories will be modelled; item 2 has four distinct codes (3, 2, 1 and 0), so four categories will be modelled.

- The first component is a list of codes contained within parentheses.

These are codes that will be found in the data file

Line 14

Themodelstatement for these data contains two terms (itemanditem*step) and will result in the estimation of two sets of parameters. The termitemresults in the estimation of a set of item difficulty parameters, and the termitem*stepresults in a set of item step-parameters that are allowed to vary across the items. This is the partial credit model.In the section

[The Structure of ACER ConQuest Design Matrices]in chapter 3, there is a description of how the terms in themodelstatement specify different versions of the item response model.Line 15

Theestimatestatement is used to initiate the estimation of the item response model.Line 16

Theshowstatement produces a display of the item response model parameter estimates and saves them to the fileex2a_shw.txt. The optionestimates=latentrequests that the displays include an illustration of the latent ability distribution.Line 17

Theitanalstatement produces a display of the results of a traditional item analysis. As with theshowstatement, the results have been redirected to a file (in this case,ex2a_itn.txt).Lines 18-20

Theplotstatements produce a sequence of three displays for item 2 only. The first requested plot is a comparison of the observed and the modelled expected score curve. The second plot is a comparison of the observed and modelled item characteristics curves, and the third plot shows comparisons of the observed and expected cumulative item characteristic curves.

2.3.1.3 Running the Partial Credit Sample Analysis

To run this sample analysis, start the GUI version.

Open the file ex2a.cqc and choose

Run\(\rightarrow\)Run All.

ACER ConQuest will begin executing the statements that are in the file ex2a.cqc; and as they are executed, they will be echoed on the screen.

When ACER ConQuest reaches the estimate statement, it will begin fitting the partial credit model to the data, and as it does so it will report on the progress of the estimation.

After the estimation is complete, the two statements that produce output (show and itanal) will be processed.

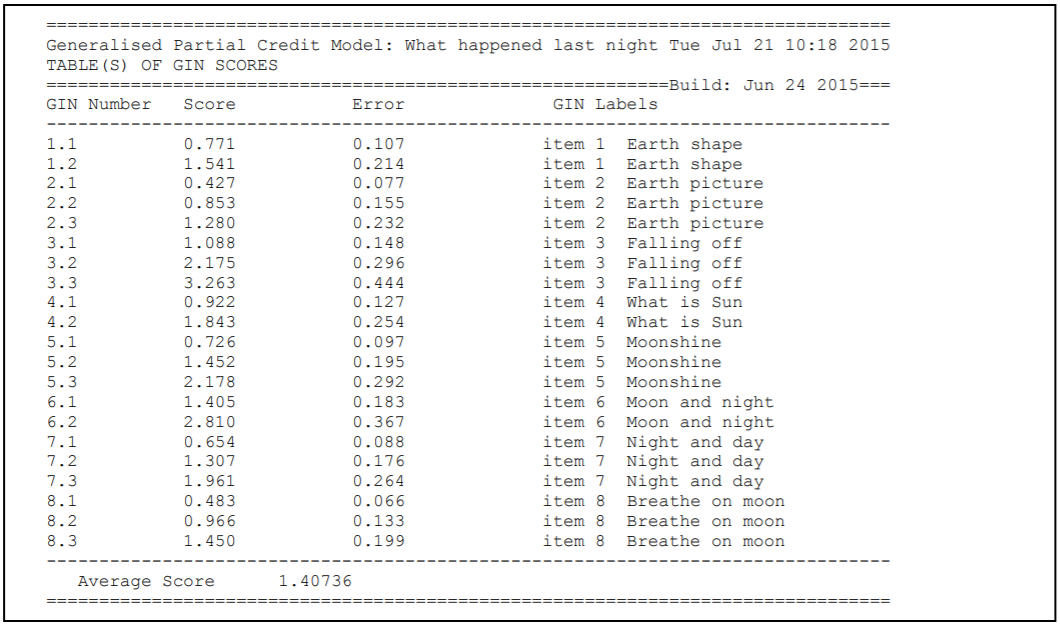

As in the previous sample analysis, the show statement will produce six separate tables.

All of these tables will be in the file ex2a_shw.txt.

The contents of the first table were discussed in section 2.2.

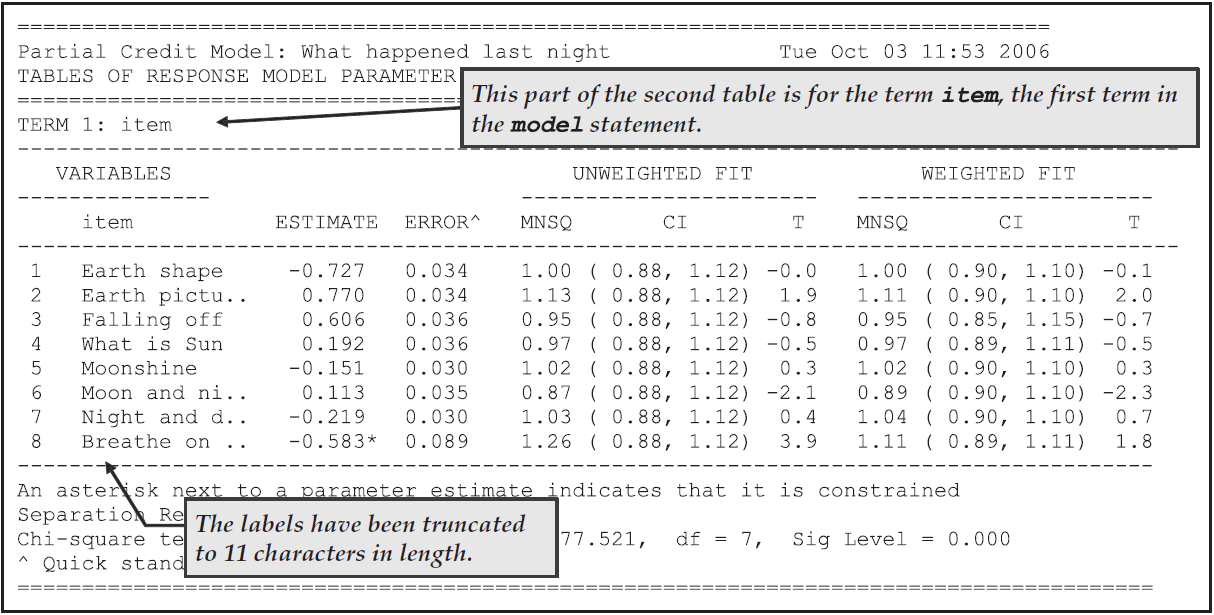

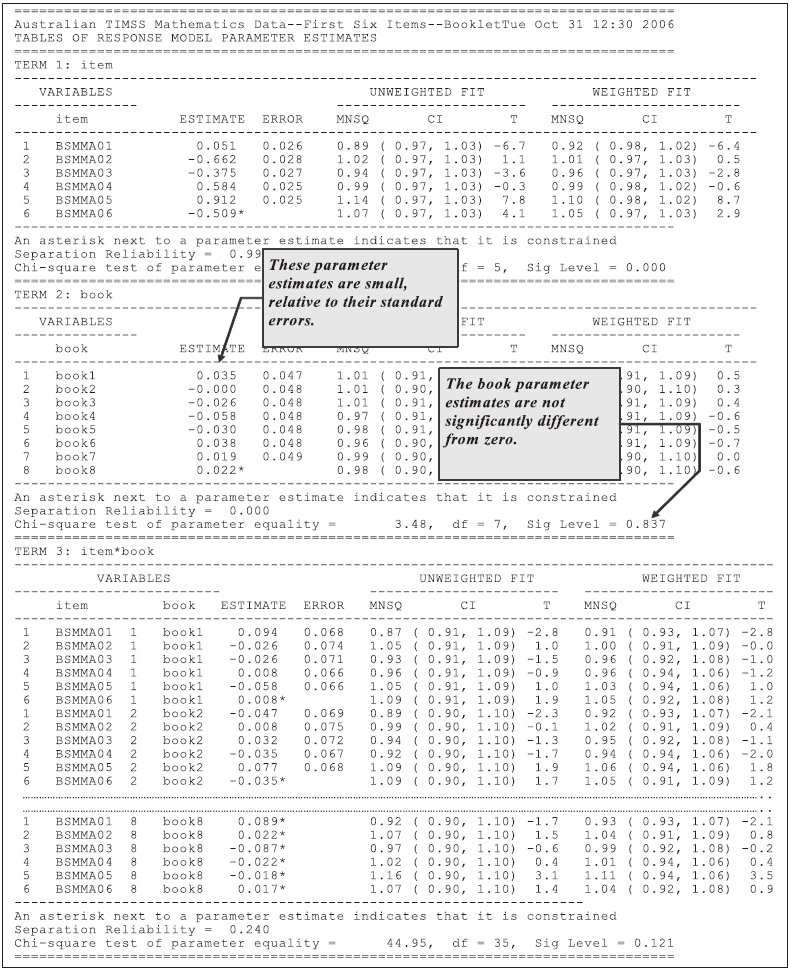

The first half of the second table, which contains information related to the parameter estimates for the first term in the model statement, is shown in Figure 2.17.

The parameter estimates in this table are for the difficulties of each of the items.

For the purposes of model identification, ACER ConQuest constrains the difficulty estimate for the last item to ensure an average difficulty of zero.

This constraint has been achieved by setting the difficulty of the last item to be the negative sum of the previous items.

The fact that this item is constrained is indicated by the asterisk (*) placed next to the parameter estimate.

Figure 2.17: Parameter Estimates for the First Term in the model Statement

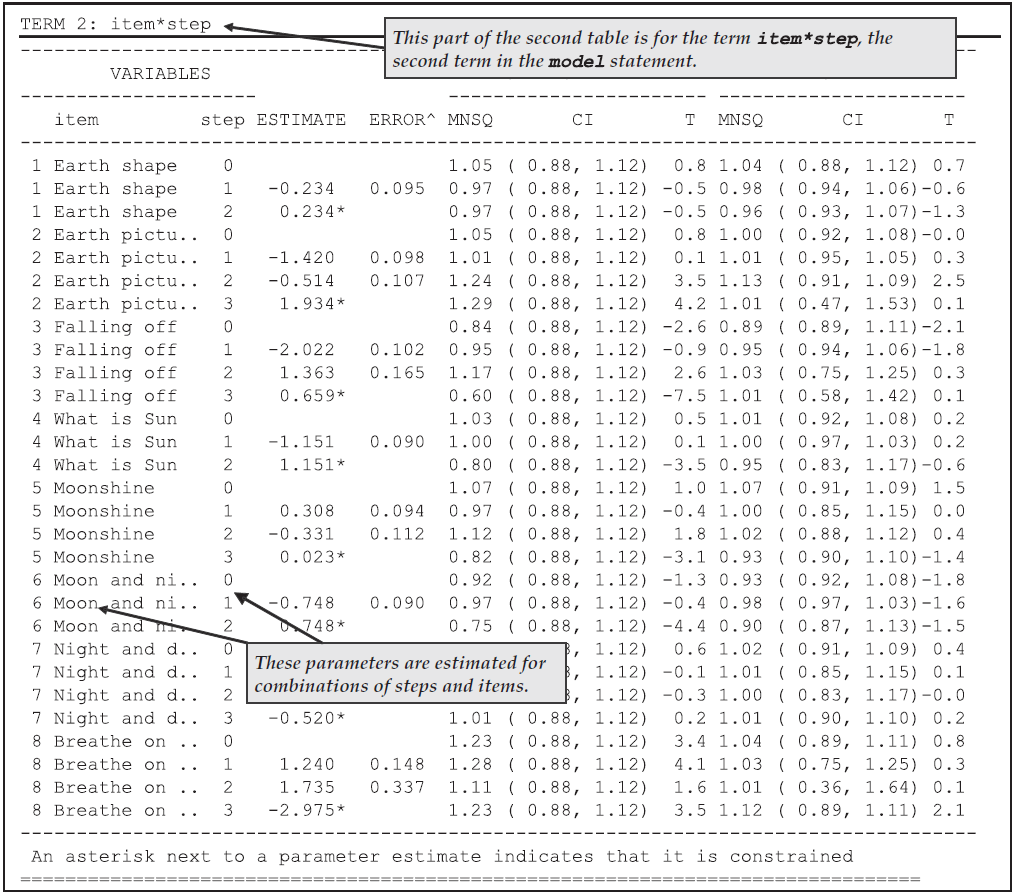

Figure 2.18 shows the second table, which displays the parameter estimates, standard errors and fit statistics associated with the second term in the model statement, the step parameters.

You will notice that the number of step parameters that has been estimated for each item is one less than the number of modelled response categories for the item.

Furthermore, the last of the parameters for each item is constrained so that the sum of the parameters for an item equals zero.

This is a necessary identification constraint.

In the case of item 1, for example, there are three categories, 0, 1 and 2.

Two values are reported, but only the first step parameter has been estimated.

The second is the negative of the first.

The parameter labelled as step 1, describes the transition from category 0 to 1, where the probability of being in category 1 is greater than the probability of being in category 0, while the second step describes the transition from 1 to 2.

The section The Structure of ACER ConQuest Design Matrices in Chapter 3 gives a description of why an item has two fewer step parameters than it has categories, and it discusses the interpretation of these parameters.

Figure 2.18: Parameter Estimates for the Second Term in the model Statement

There is a fit statistic reported for each category. This statistic provides a comparison of the expected number of students responding in the category with the observed number responding in that category.

The third table in the file (not shown here) gives the estimates of the population parameters. In this case, the mean of the latent ability distribution is –0.320, and the variance of that distribution is 0.526.

The fourth table reports the reliability coefficients. Three different reliability statistics are available (Adams, 2005). In this case just the third index (the EAP/PV reliability) is reported because neither of the maximum likelihood estimates has been computed at this stage. The reported reliability is 0.735.

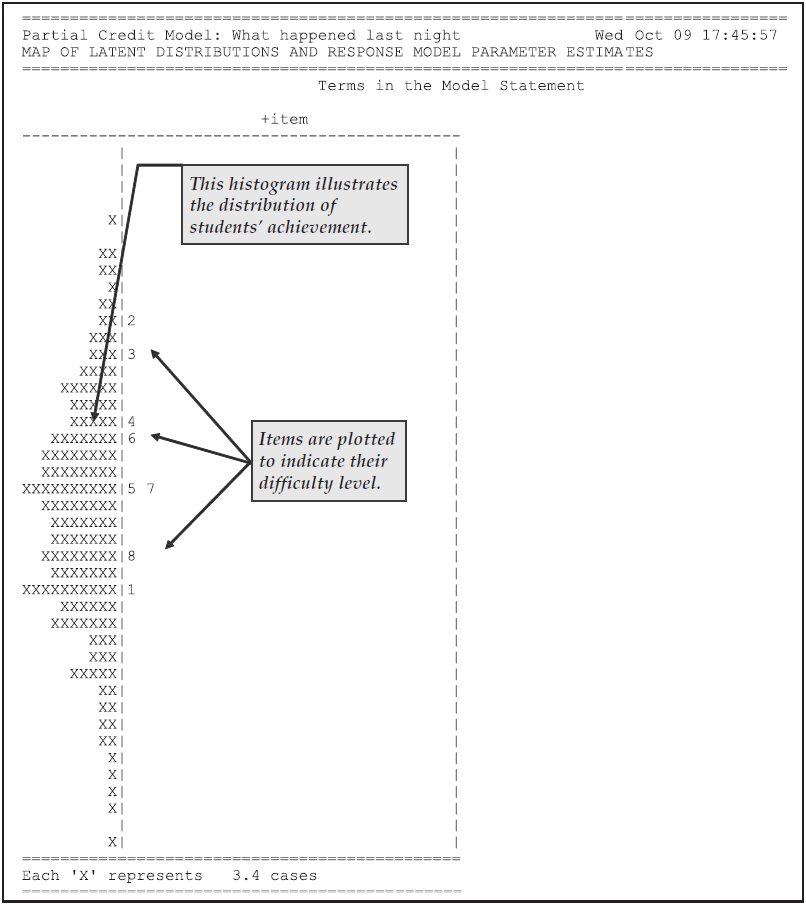

The fifth table Figure 2.19 is a map of the parameter estimates and latent ability distribution.

For this model, the map consists of two panels, one for the latent ability distribution and one for each of the terms in the model statement that do not include a step (in this case one).

In this case the leftmost panel shows the estimated latent ability distribution and the second shows the item difficulties.

Figure 2.19: The Item and Latent Distribution Map for the Partial Credit Model

EXTENSION: The headings of the panels in Figure 2.19 are preceded by a plus sign (

+). This indicates the orientation of the parameters. A plus indicates that the facet is modelled with difficulty parameters, whereas a minus sign (–) indicates that the facet is modelled with easiness parameters. This is controlled by the sign that you use in themodelstatement.

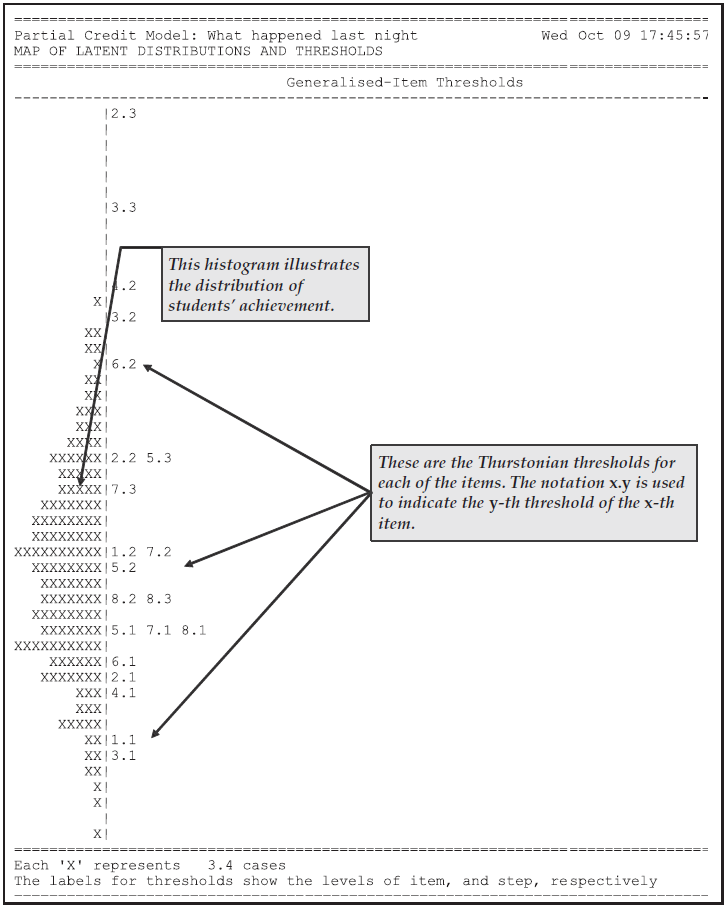

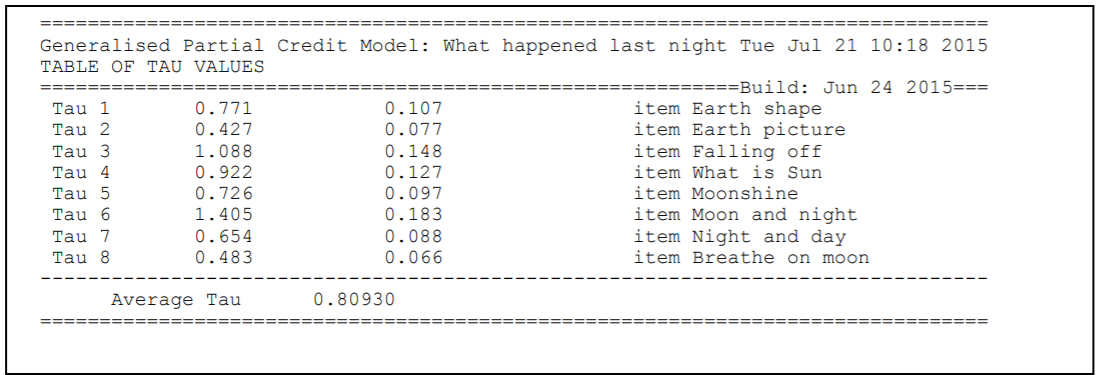

Figure 2.20, the sixth table from the file ex2a_shw.txt, is a plot of the Thurstonian thresholds for the items.

The definition of these thresholds is discussed in Computing Thresholds in Chapter 3.

Briefly, they are plotted at the point where a student has a 50% chance of achieving at least the indicated level of performance on an item.

Figure 2.20: Item Thresholds for the Partial Credit Model

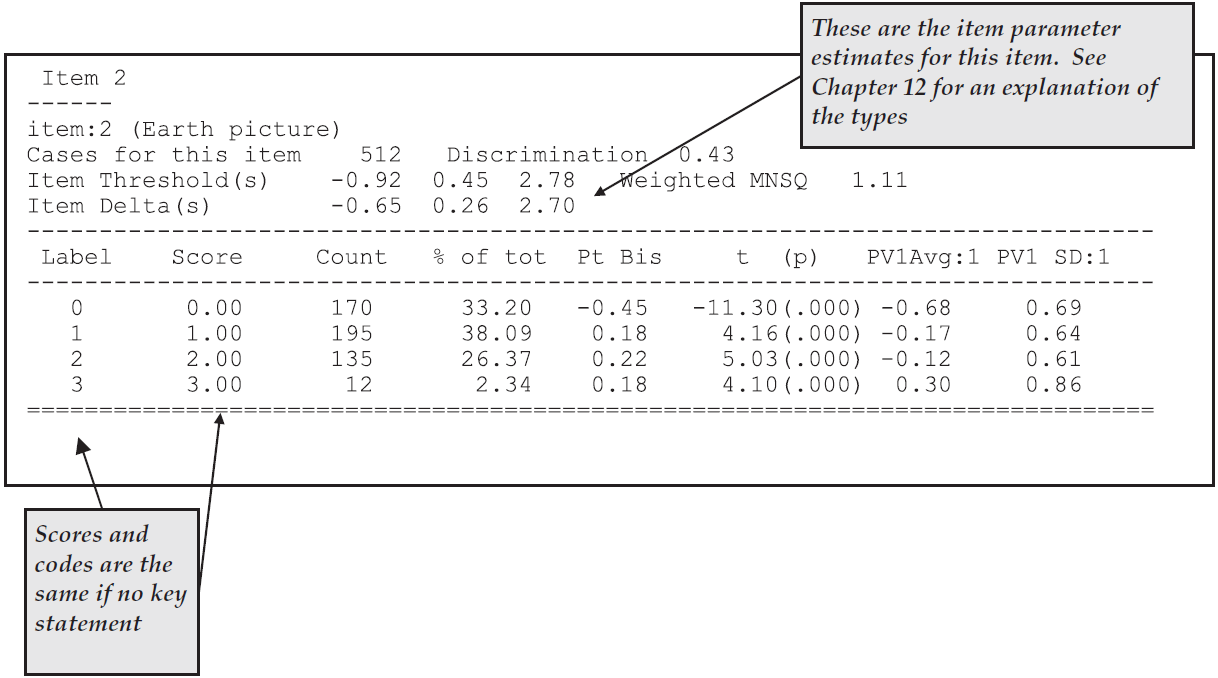

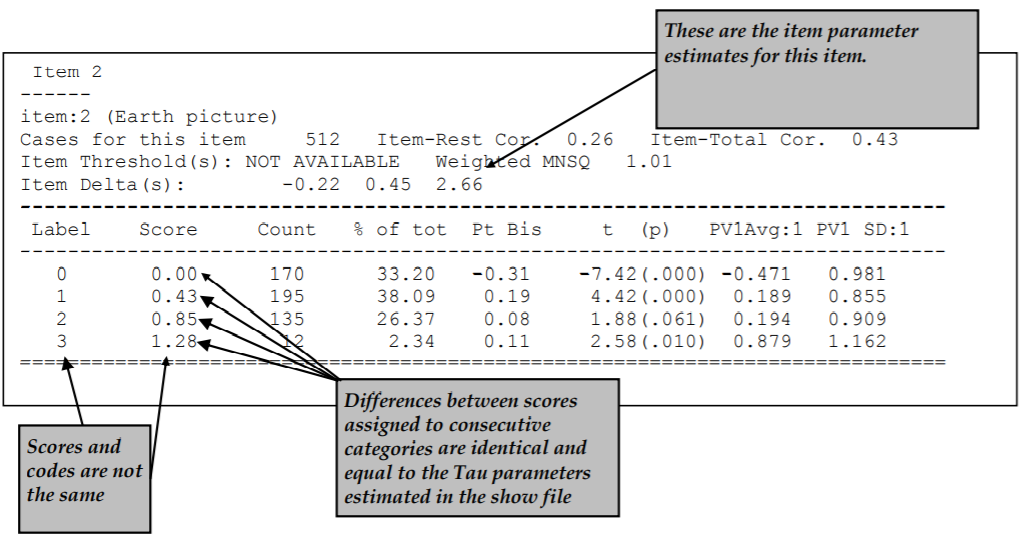

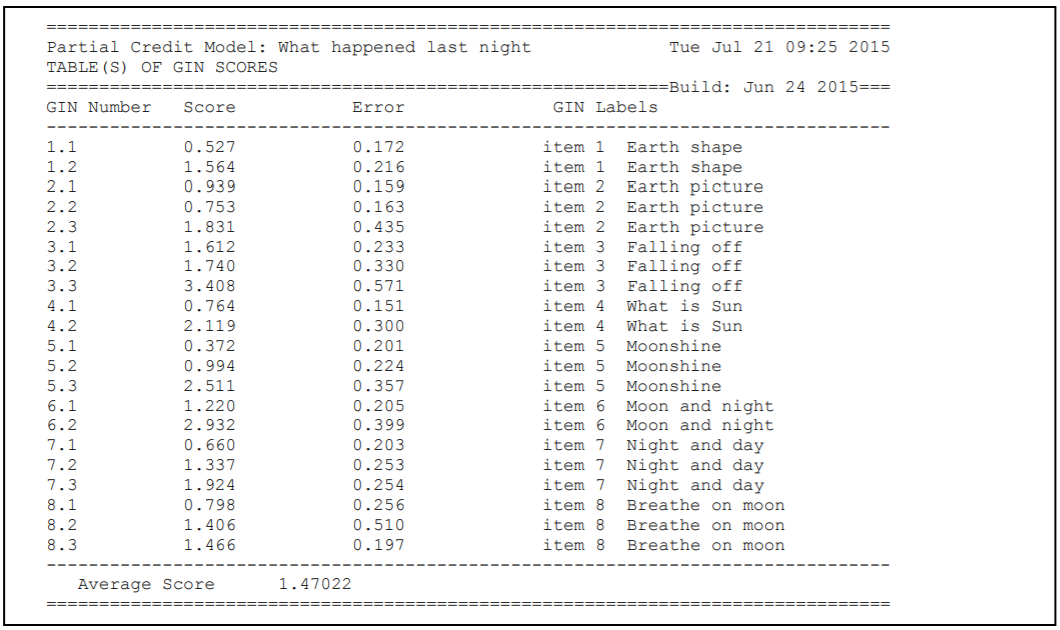

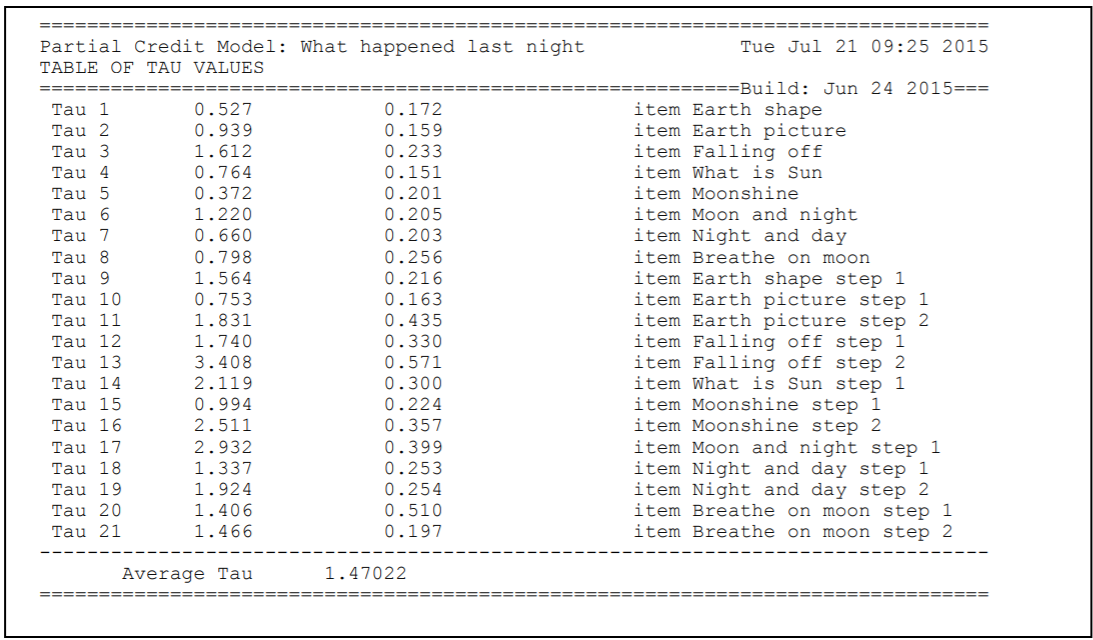

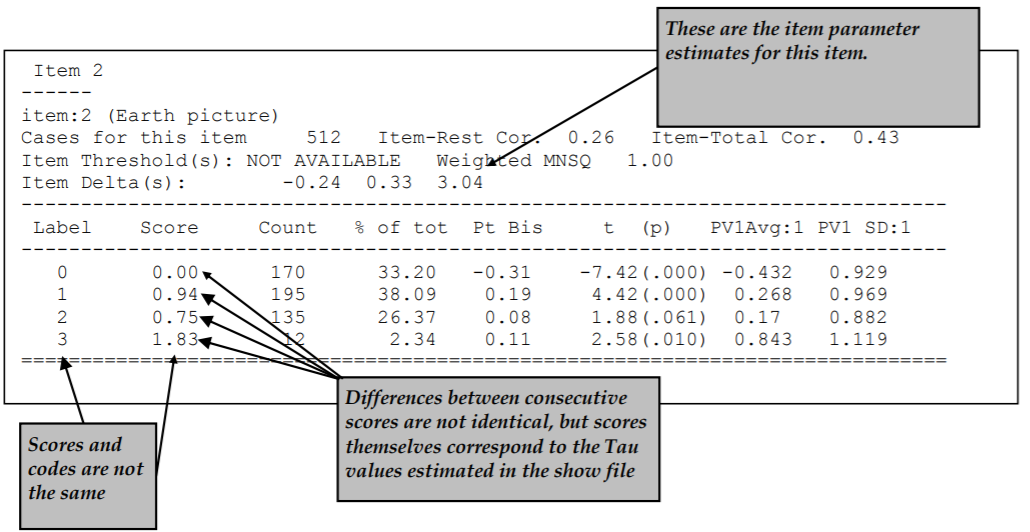

The itanal command in line 17 produces a file (ex2a_itn.txt) that contains traditional item statistics (Figure 2.21).

In the previous section a multiple-choice test was analysed and the itanal output for multiple-choice items was described.

In this example a key statement was not used and the items use partial credit scoring.

As a consequence the itanal results are provided at the level of scores, rather than response categories.

Figure 2.21: Extract of Item Analysis Printout for a Partial Credit Item

EXTENSION: The method used to construct the ability distribution is determined by the

estimates=option used in theshowstatement. Thelatentdistribution is constructed by drawing a set of plausible values for the students and constructing a histogram from the plausible values. Other options for the distribution areEAP,WLEandMLE, which result in histograms of expected a-posteriori, weighted maximum likelihood and maximum likelihood estimates, respectively. Details of these ability estimates are discussed in Latent Estimation and Prediction in Chapter 3.

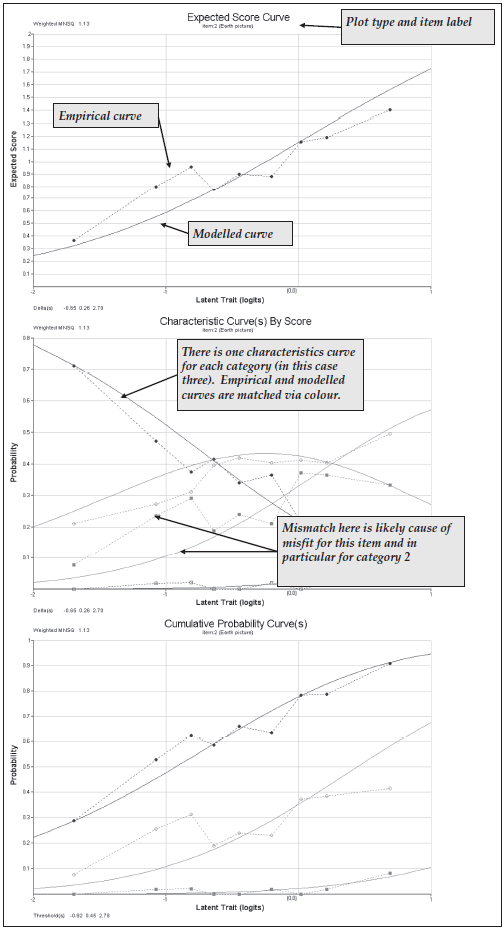

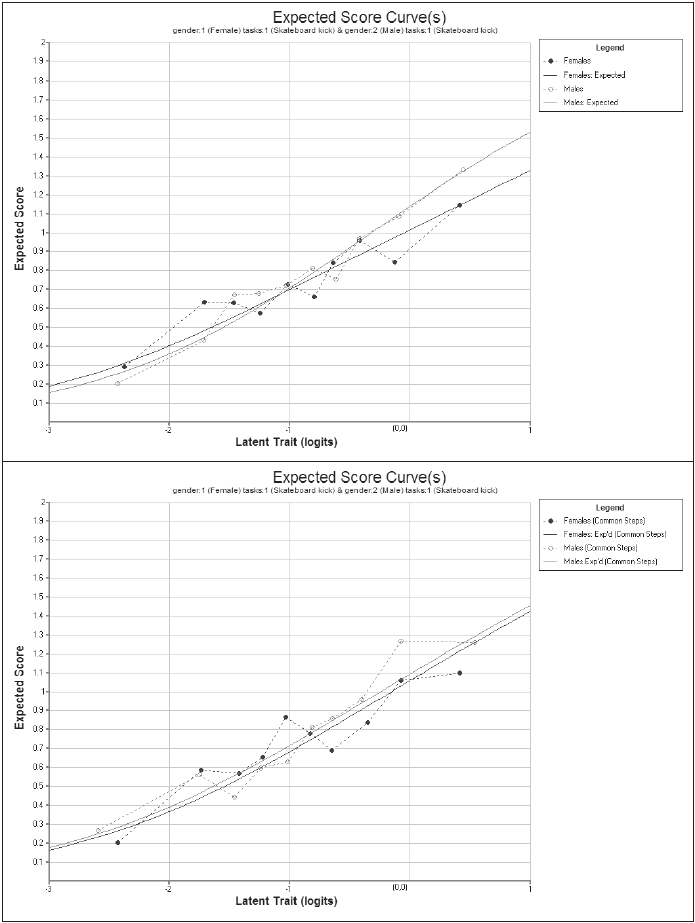

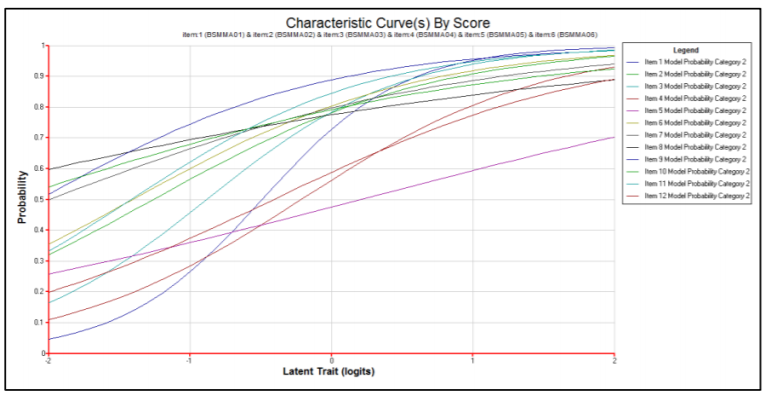

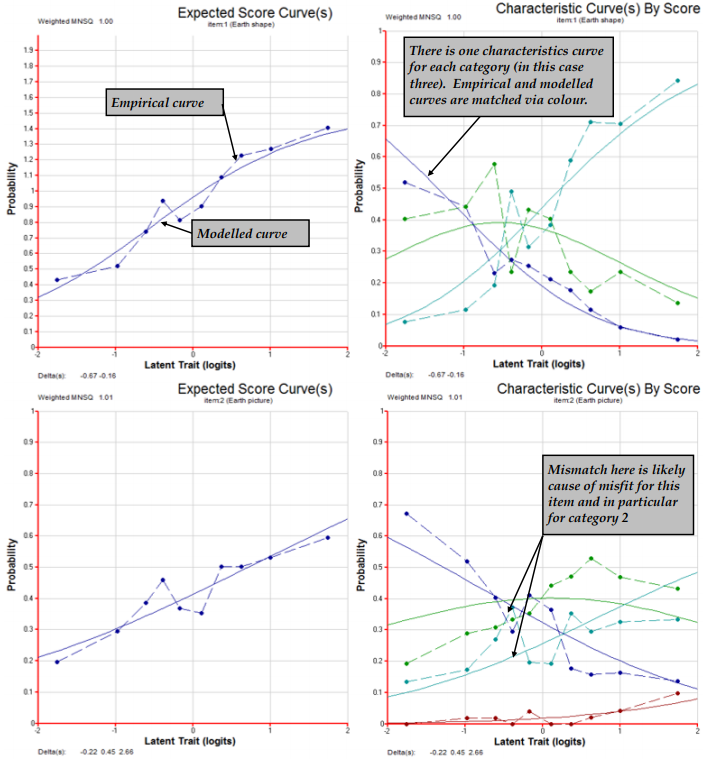

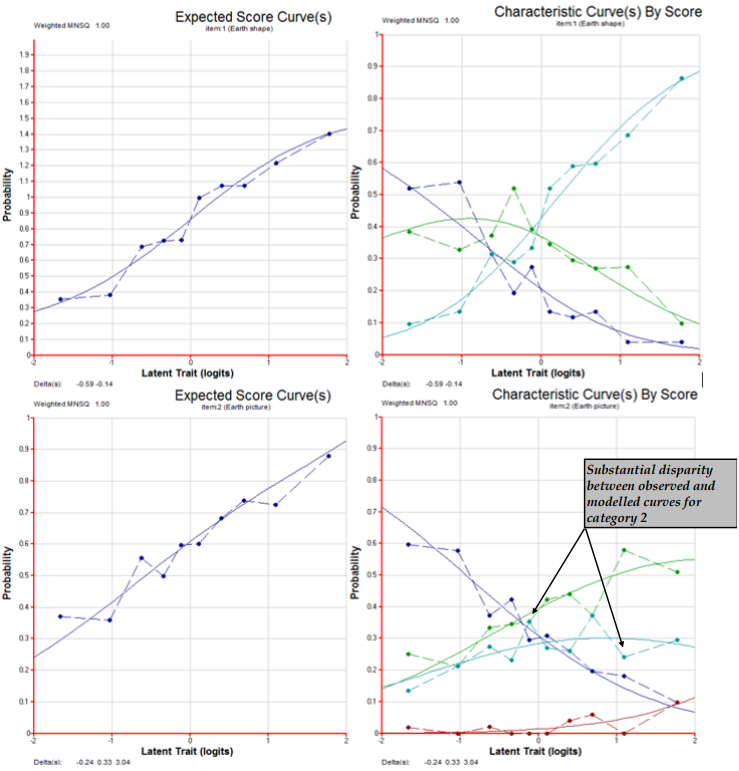

The three plot commands (lines 18–20) produce the graphs shown in Figure 2.22. For illustrative purposes only plots for item 2 are requested. This item showed poor fit to the scaling model — in this case the partial credit model.

The item fit MNSQ of 1.11 indicates that this item is less discriminating than expected by the model. The first plot, the comparison of the observed and modelled expected score curves is the best illustration of this misfit. Notice how in this plot the observed curve is a little flatter than the modelled curve. This will often be the case when the MNSQ is significantly larger than 1.0.

The second plot shows the item characteristic curves, both modelled and empirical. There is one pair of curves for each possible score on the item, in this case 0, 1, 2 and 3. Note that the disparity between the observed and modelled curves for category 2 is the largest and this is consistent with the high fit statistic for this category.

The third plot is a cumulative form of the item characteristic curves. In this case three pairs of curves are plotted. The rightmost pair gives the probability of a response of 3, the next pair is for the probability of 2 or 3, and the final pairing is for the probability of 1, 2 or 3. Where these curves attain a probability of 0.5, the value on the horizontal axis corresponds to each of the three threshold parameters that are reported under the figure.

Figure 2.22: Plots for Item 2

2.3.2 b) Partial Credit and Rating Scale Models: A Comparison of Fit

A key feature of ACER ConQuest is its ability to fit alternative Rasch-type models to the same data set. Here a rating scale model and a partial credit model are fit to a set of items that were designed to measure the importance placed by teachers on adequate resourcing and support to the success of bilingual education programs.

2.3.2.1 Required files

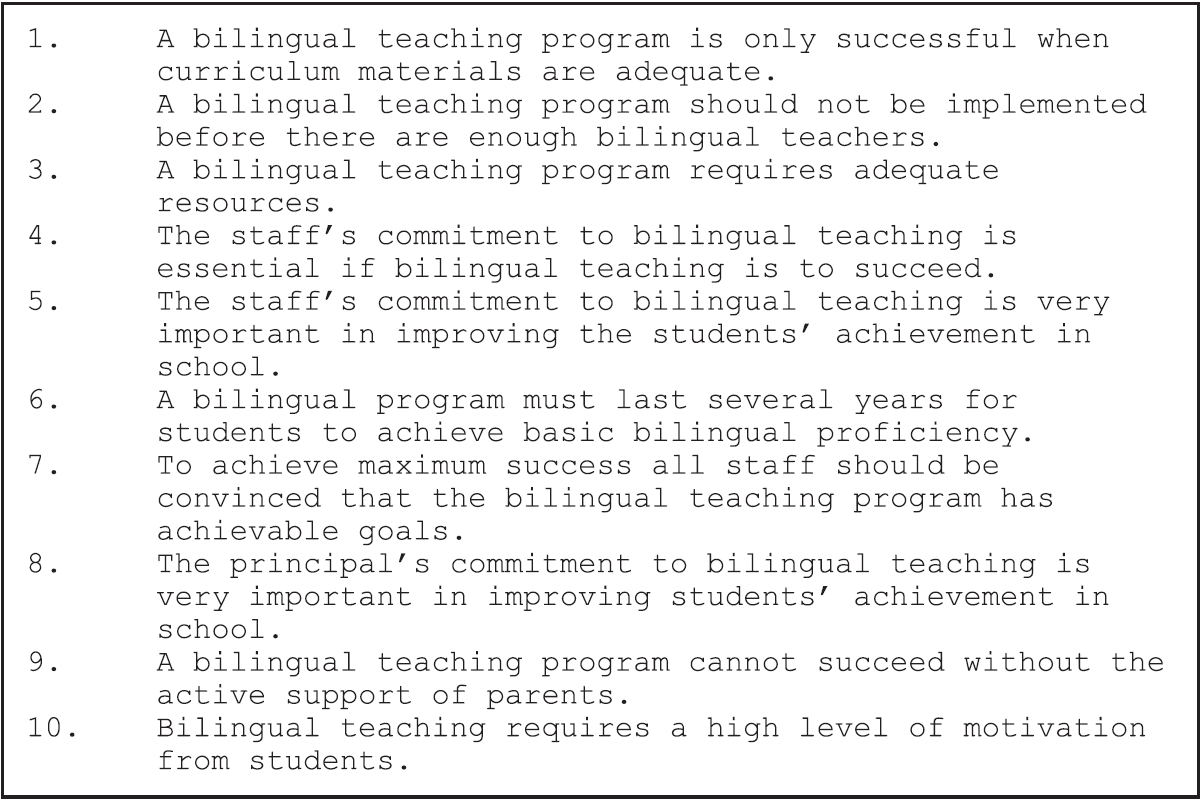

The data come from a study undertaken by Zammit (1997). The data consist of the responses of 582 teachers to the 10 items listed in Figure 2.23. Each item was presented with a Likert-style response format; and in the data file, strongly agree was coded as 1, agree as 2, uncertain as 3, disagree as 4, and strongly disagree as 5.

Figure 2.23: Items Used in the Comparison of the Rating Scale and the Partial Credit Models

The files that we use are:

| filename | content |

|---|---|

| ex2b.cqc | The command statements. |

| ex2b_dat.txt | The data. |

| ex2b_lab.txt | The variable labels for the items on the rating scale. |

| ex2b_shw.txt | The results of the rating scale analysis. |

| ex2b_itn.txt | The results of the traditional item analyses. |

| ex2c_shw.txt | The results of the partical credit analysis. |

(The last three files are created when the command file is executed.)

2.3.2.2 Syntax

The code box below contains the contents of ex2b.cqc.

This is the command file used in this analysis to fit a Rating Scale and then a Partial Credit Model to the same data we used in part a) of this tutorial.

The list underneath the code box explains each line from the command file.

ex2b.cqc:

title Rating Scale Analysis;

datafile ex2b_dat.txt;

format responses 9-15,17-19;

codes 0,1,2;

recode (1,2,3,4,5) (2,1,0,0,0);

labels << ex2b_lab.txt;

model item + step; /*Rating Scale*/

estimate;

show>>results/ex2b_shw.txt;

itanal>>results/ex2b_itn.txt;

reset;

title Partial Credit Analysis;

datafile ex2b_dat.txt;

format responses 9-15,17-19;

codes 0,1,2;

recode (1,2,3,4,5) (2,1,0,0,0);

labels << ex2b_lab.txt;

model item + item*step; /*Partial Credit*/

estimate;

show>>results/ex2c_shw.txt;Line 1

For this analysis, we are using the titleRating Scale Analysis.Line 2

The data for this sample analysis are to be read from the fileex2b_dat.txt.Line 3

Theformatstatement describes the layout of the data in the fileex2b_dat.txt. This format indicates that the responses to the first seven items are located in columns 9 through 15 and that the responses to the next three items are located in columns 17 through 19.Line 4

The valid codes, after recode, are 0, 1 and 2.Line 5

The original codes of 1, 2, 3, 4, and 5 are recoded to 2, 1, and 0. Because 3, 4, and 5 are all being recoded to 0, this means we are collapsing these categories (uncertain, disagree, and strongly disagree) for the purposes of this analysis.Line 6

A set of labels for the items is to be read from the fileex2b_lab.txt.Line 7

This is themodelstatement that corresponds to the rating scale model. The first term in themodelstatement indicates that an item difficulty parameter is modelled for each item, and the second indicates that step parameters are the same for all items.Line 8

Theestimatestatement is used to initiate the estimation of the item response model.Line 9

Item response model results are to be written to the fileex2b_shw.txt.Line 10

Traditional statistics are to be written to the fileex2b_itn.txt.Line 11

Theresetstatement can be used to separate jobs that are put into a single command file. Theresetstatement returns all values to their defaults. Even though many values are the same for these analyses, we advise resetting, as you may be unaware of some values that have been set by the previous statements.Lines 12-20

These lines replicate lines 1 to 9. The only difference is in themodelstatement (compare lines 18 and 7). In the first analysis, the second term of themodelstatement isstep, whereas in the second analysis the second term isitem*step. In the latter case, the step structure is allowed to vary across items, whereas in the first case, the step structure is constrained to be the same across items.

2.3.2.3 Running the Comparison of the Rating Scale and Partial Credit Models

To run this sample analysis, launch the GUI version of ACER ConQuest and open the command file ex2b.cqc and choose Run\(\rightarrow\)Run All.

ACER ConQuest will begin executing the statements that are in the file ex2b.cqc; and as they are executed, they will be echoed on the screen.

Firstly the rating scale model will be fit, followed by the partial credit model.

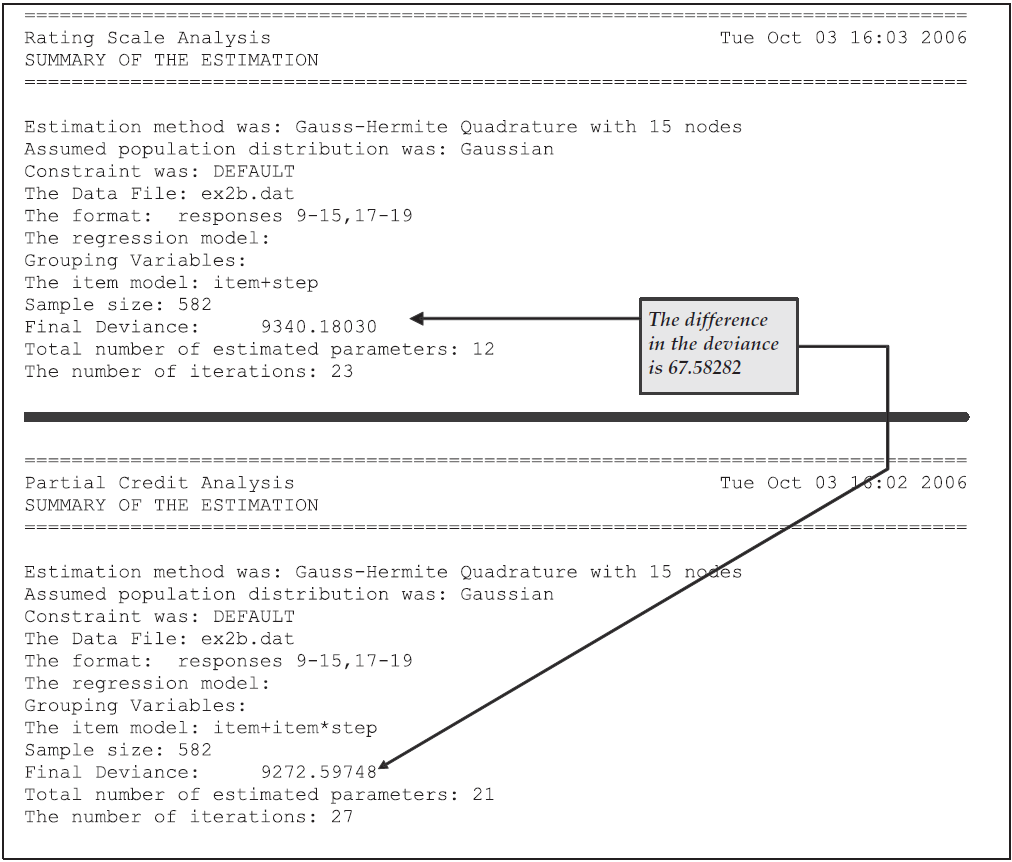

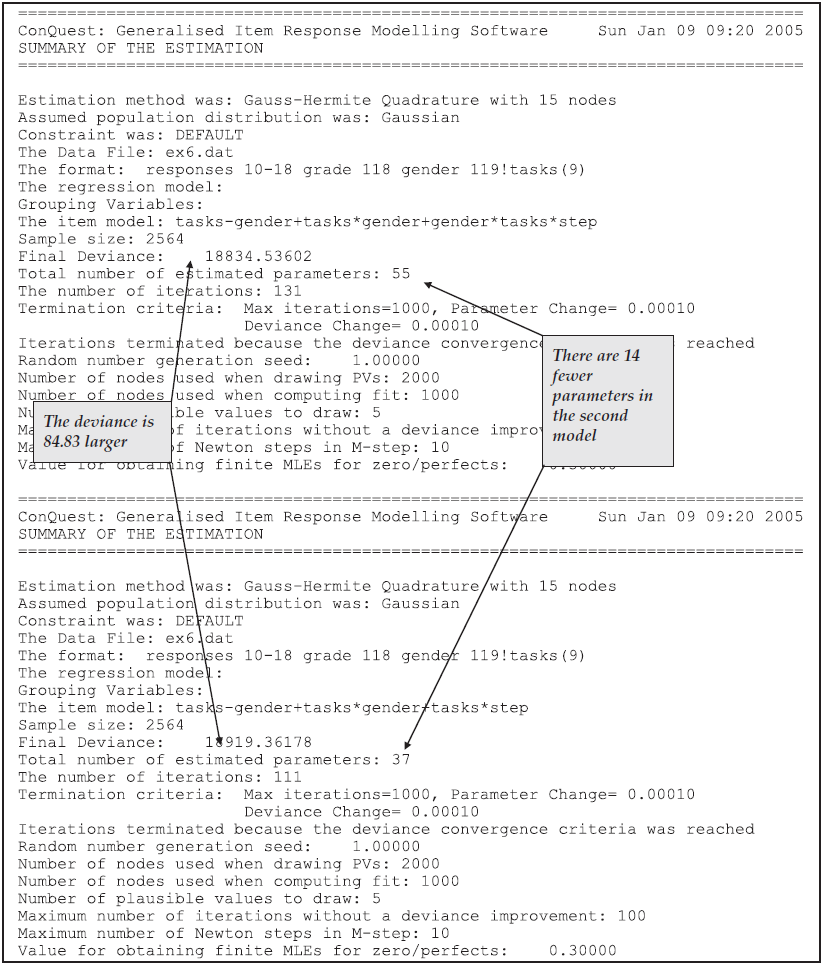

To compare the fit of the two models to these data, two tables produced by the show statements for each model are compared.

First, the summary tables for each model are compared.

These two tables are reproduced in Figure 2.24.

From these tables we note that the rating scale model has used 12 parameters, and the partial credit model has used 21 parameters.

For the rating scale model, the parameters are the mean and variance of the latent variable, nine item difficulty parameters, and a single step parameter.

For the partial credit model, the parameters are the mean and variance of the latent variable, nine item difficulty parameters and 10 step parameters.

A formal statistical test of the relative fit of these models can be undertaken by comparing the deviance of the two models. Comparing the deviance in the summary tables, note that the rating scale model deviance is 67.58 greater than the deviance for the partial credit model. If this value is compared to a chi-squared distribution with 9 degrees of freedom, this value is significant and it can be concluded that the fit of the rating scale model is significantly worse than the fit of the partial credit model.

Figure 2.24: Summary Information for the Rating Scale and Partial Credit Analyses

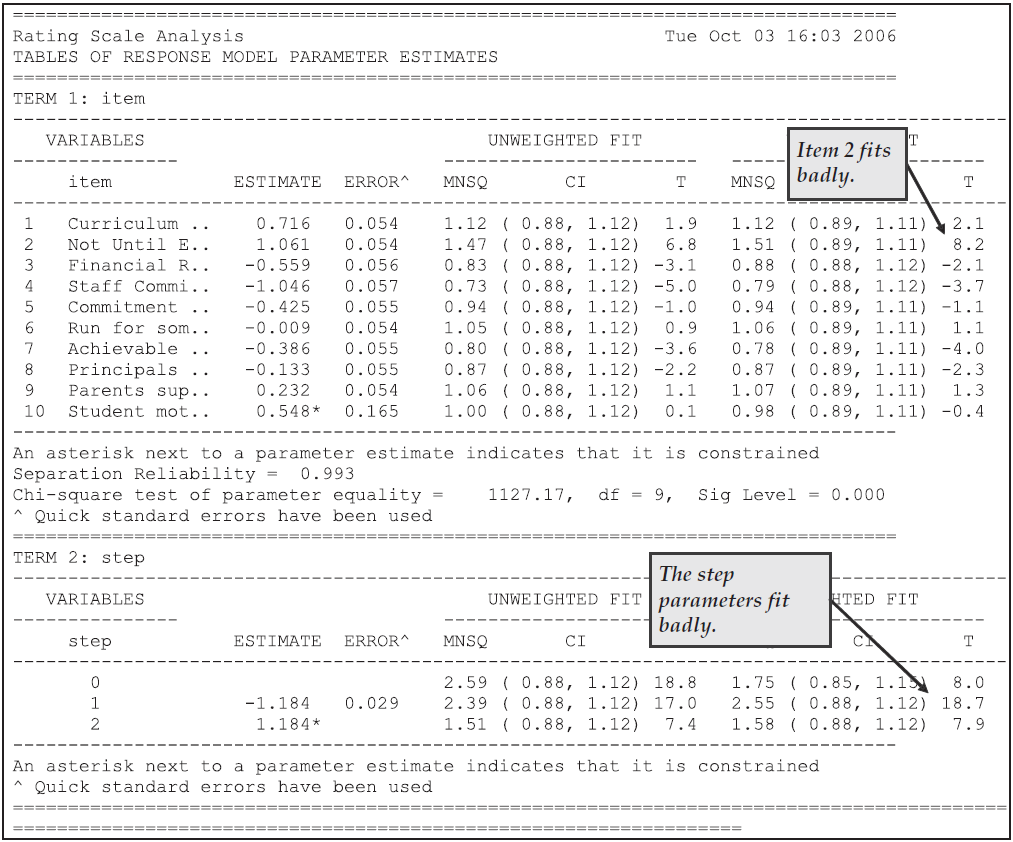

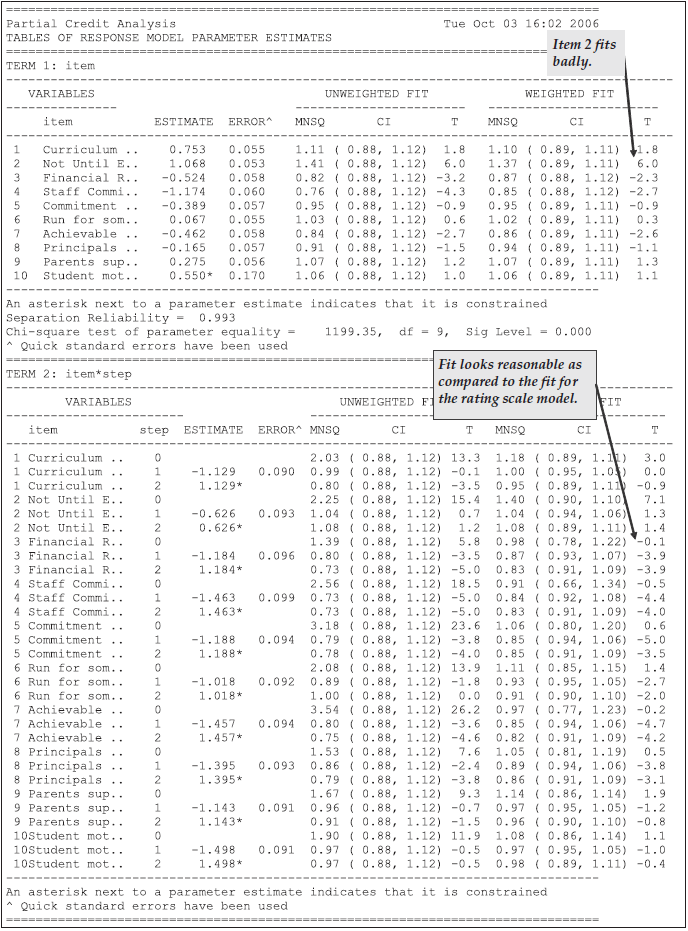

The difference in the fit of these two models is highlighted by comparing the contents of Figures 2.25 and 2.26.

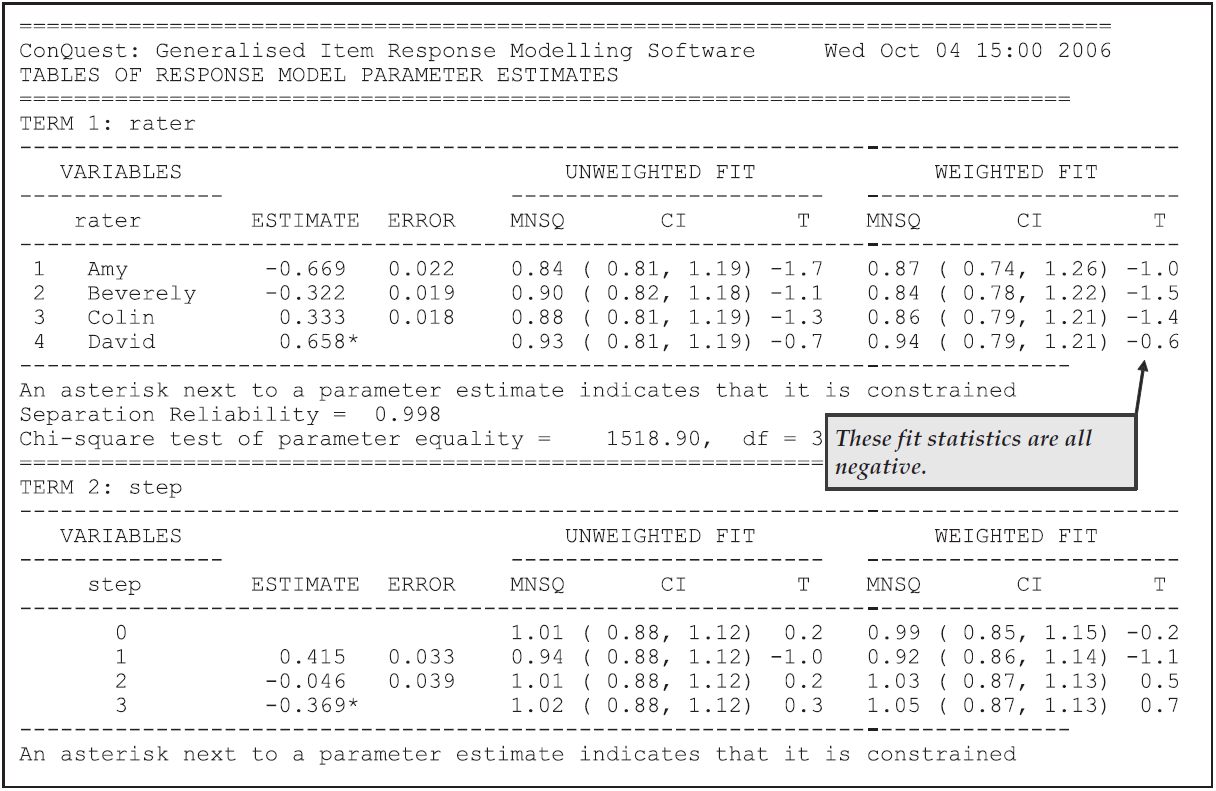

Figure 2.25 shows that, in the case of the rating scale model, the step parameter fits poorly, whereas in Figure 2.26 the fit statistics for the step parameters are generally small or less than their expected value (ie the t-values are negative). In both cases, the difficulty parameter for item 2 does not fit well. An examination of the text of this item in Figure 2.23 shows that perhaps the misfit of this item can be explained by the fact that it is slightly different to the other questions in that it focuses on the conditions under which a bilingual program should be started rather than on the conditions necessary for the success of a bilingual program. Thus, although overall the partial credit model fits better than the rating scale model as discussed previously, the persistence of misfit for the difficulty parameter for this item indicates that the inclusion of this item in the scale should be reconsidered.

Figure 2.25: Response Model Parameter Estimates for the Rating Scale Model

Figure 2.26: Response Model Parameter Estimates for the Partial Credit Model

2.3.3 Summary

In this section, ACER ConQuest has been used to fit partial credit and rating scale models. Some key points covered were:

- The

codesstatement can be used to provide a list of valid codes. - The

recodestatement is used to change the codes that are given in the response block (defined in theformatstatement) for the data file. - The number of response categories modelled by ACER ConQuest for each item is the number of unique codes (after recoding) for that item.

- Response categories and item scores are not the same thing.

- The

modelstatement can be used to fit different models to the same data. - The deviance statistic can be used to choose between models.

2.4 The Analysis of Rater Effects

The item response models, such as simple logistic, rating scale and partial credit, that have been illustrated in the previous two sections, assume that the observed responses result from the two-way interaction between the agents of measurement8 and the objects of measurement.9 With the increasing importance of performance assessment, Linacre (1994) recognised that the responses that are gathered in many contexts do not result from the interaction between an object and a single agent: the agent is often a composite of more fundamental subcomponents.10 Consider, for example, the assessment of writing, where a stimulus is presented to a student, the student prepares a piece of writing, and then a rater makes a judgment about the quality of the writing performance. Here, the object of measurement is clearly the student; but the agent is a combination of the rater who makes the judgment and the stimulus that serves as a prompt for the student’s writing. The response that is analysed by the item response model is influenced by the characteristics of the student, the characteristics of the stimulus, and the characteristics of the rater. Linacre (1994) would label this a three-faceted measurement context, the three facets being the student, the stimulus and the rater.

Using an extension of the partial credit model to this multifaceted context, Linacre (1994) and others have shown that item response models can be used to identify raters who are harsher or more lenient than others, who exhibit different patterns in the way they use rating schemes, and who make judgments that are inconsistent with judgments made by other raters. This section describes how ACER ConQuest can fit a multifaceted measurement model to analyse the characteristics of a set of 16 raters who have rated a set of writing tasks using two criteria.

2.4.1 a) Fitting a Multifaceted Model

2.4.1.1 Required files

The data that we are analysing are the ratings of 8296 Year 6 students’ responses to a single writing task. The data were gathered as part of a study reported in Congdon & McQueen (1997). Each of the 8296 students’ writing scripts was graded by two raters, randomly chosen from a set of 16 raters; and the second rating for each script was performed blind. The random allocation of scripts to the raters, in conjunction with the very large number of scripts, resulted in links between all raters being obtained. When assessing the scripts, each rater was required to provide two ratings, one labelled OP (overall performance) and the other TF (textual features).11 The rating of both the OP and TF was undertaken against a sixpoint scale, with the labels G, H, I, J, K and L used to indicate successively superior levels of performance. For a small number of scripts, ratings of this nature could not be made; and the code N was used to indicate this occurrence.

The files used in this sample analysis are:

| filename | content |

|---|---|

| ex3a.cqc | The command statements. |

| ex3_dat.txt | The data. |

| ex3a_shw.txt | The results of the multifaceted analysis. |

| ex3a_itn.txt | The results of the traditional item analyses. |

(The last two files are created when the command file is executed.)

The data were entered into the file ex3_dat.txt, using one line per student.

Rater identifiers (of two characters in width) for the first and second raters who rated the writing of each student are entered in columns 17 and 18 and columns 19 and 20, respectively.

Each of the two raters produced an OP and a TF rating for the script.

The OP and TF ratings made by the first rater have been entered in columns 21 and 22, and the OP and TF ratings made by the second rater have been entered in columns 25 and 26.

2.4.1.2 Syntax

ex3a.cqc is the command file used in this tutorial for fitting one possible multifaceted model to the data outlined above.

The command file is shown in the code box below, and the list underneath the code box analyzes each line of syntax.

ex3a.cqc:

Title Rater Effects Model One;

datafile ex3_dat.txt;

format rater 17-18 rater 19-20

responses 21-22 responses 25-26 ! criteria(2);

codes G,H,I,J,K,L;

score (G,H,I,J,K,L) (0,1,2,3,4,5);

labels 1 OP !criteria;

labels 2 TF !criteria;

model rater + criteria + step;

estimate!nodes=20;

show !estimates=latent >> results/ex3a_shw.txt;

itanal >> results/ex3a_itn.txt;Line 1

Gives a title for the analysis. The text supplied after thetitlecommand will appear on the top of any printed ACER ConQuest output.Line 2

Indicates the name and location of the data file.Lines 3-4

Multifaceted data can be entered into data sets in many ways. Here, two sets of ratings for each student have been included on each line in the data file, and explicit rater codes have been used to identify the raters. For each of the raters, there is a matching pair of ratings (one for OP and one for TF). The OP and TF ratings are implicitly identified by the columns in which the data are entered. The ACER ConQuestformatstatement is very flexible and can cater for many alternative data specifications. In thisformatstatement, you will notice thatrateris used twice. The first use indicates the column location of the rater code for the first rater, and the second use indicates the column location of the rater code for the second rater. This is followed by two variables indicating the location of the responses (referred to as response blocks). Each response block is two characters wide; and since the default width of a response is one column, each response block refers to two responses, an OP and a TF rating. The first response block (columns 21 and 22) will be associated with the first rater, and the second response block (columns 25 and 26) will be associated with the second rater.This

formatstatement also includes an option,criteria(2), which assigns the variable namecriteriato the two responses that are implicitly identified by each response block. If this option had been omitted, the default variable name for the responses would beitem.This

formatstatement spans two lines in the command file. Command statements can be 1023 characters in length and can cover any number of lines in a command file. The semi-colon (;) is the separator between statements, not the return or new line characters.Line 5

Thecodesstatement restricts the list of valid response codes to G, H, I, J, K, and L. All other responses will be treated as missing-response data.Line 6

Thescorestatement assigns score levels to each of the response categories. Here, the left side of thescoreargument shows the six valid codes defined by thecodesstatement, and the right side gives six matching scores. The six distinct codes on the left indicate that the item response model will model six categories for each item; the scores on the right are the scores that will be assigned to each category.NOTE: As discussed in the previous section, ACER ConQuest makes an important distinction between response categories and response levels (or scores). The number of item response categories that will be modelled by ACER ConQuest is determined by the number of unique codes that exist after all recodes have been performed. ACER ConQuest requires a score for each response category. This can be provided via the

scorestatement. Alternatively, if thescorestatement is omitted, ACER ConQuest will treat the recoded responses as numerical values and use them as scores. If the recoded responses are not numerical values, an error will be reported.Lines 7-8

In the previous sample analyses, variable labels were read from a file. Here thecriteriafacet contains only two levels (the OP and TF ratings), so the labels are given in the command file usinglabelscommand syntax. Theselabelsstatements have two arguments. The first argument indicates the level of the facet to which the label is to be assigned, and the second argument is the label for that level. The option gives the facet to which the label is being applied.Line 9

Themodelstatement here contains three terms;rater,criteriaandstep. Thismodelstatement indicates that the responses are to be modelled with three sets of parameters: a set of rater harshness parameters, a set of criteria difficulty parameters, and a set of parameters to describe the step structure of the responses.EXTENSION: The

modelstatement in this sample analysis includes main effects only. An interaction termrater*criteriacould be added to model variation in the difficulty of the criteria across the raters. Similarly, the model specifies a single step-structure for all rater and criteria combinations. Step structures that were common across the criteria but varied with raters could be modelled by using the termrater*step, step structures that were common across the raters but varied with criteria could be modelled by using the termcriteria*step, and step structures that varied with rater and criteria combinations could be modelled by using the termrater*criteria*step.Line 10

Theestimatestatement initiates the estimation of the item response model.Line 11

Theshowstatement produces a display of the item response model parameter estimates and saves them to the fileex3a_shw.txt. The optionestimates=latentrequests that the displays include an illustration of the latent ability distribution.Line 12

Theitanalstatement produces a display of the results of a traditional item analysis. As with theshowstatement, we have redirected the results to a file (in this case,ex3a_itn.txt).

2.4.1.3 Running the Multifaceted Sample Analysis

To run this sample analysis, start the GUI version of ACER ConQuest and open the control file ex3a.cqc.

Select Run\(\rightarrow\)Run All.

ACER ConQuest will begin executing the statements that are in the file ex3a.cqc;

and as they are executed, they will be echoed on the screen.

When ACER ConQuest reaches the estimate statement, it will begin fitting the

multifaceted model to the data; and as it does, it will report on the progress of

this estimation.

Due to the large size of this data file, ACER ConQuest will take some time to perform

this analysis.

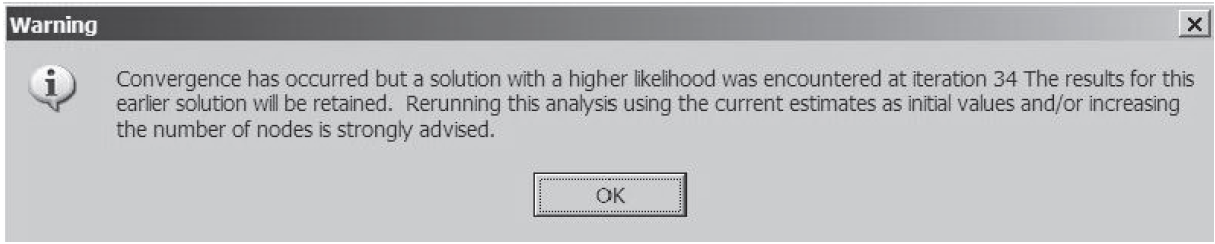

During estimation, ACER ConQuest reports a warning message:

As the scores of the writing test spread students far apart, as indicated by the estimated variance of the ability distribution (5.7 logits), this suggests that more nodes to cover the ability range are required in the estimation process.

To re-run ACER ConQuest with more nodes during the estimation, modify the estimate command as follows:

- Line 10

estimate ! nodes=30;

The default number of nodes is 15.

The above estimate command requests ACER ConQuest to use 30 nodes to cover the ability range.

Re-run ACER ConQuest by selecting Run\(\rightarrow\)Run All from the menu.

This time, ACER ConQuest no longer reports a warning for convergence problems.

After the estimation is complete, the two statements that produce output (show and itanal) will be processed.

The results of the show statement can be found in the file ex3a_shw.txt, and the results of the itanal statement can be found in the file ex3a_itn.txt.

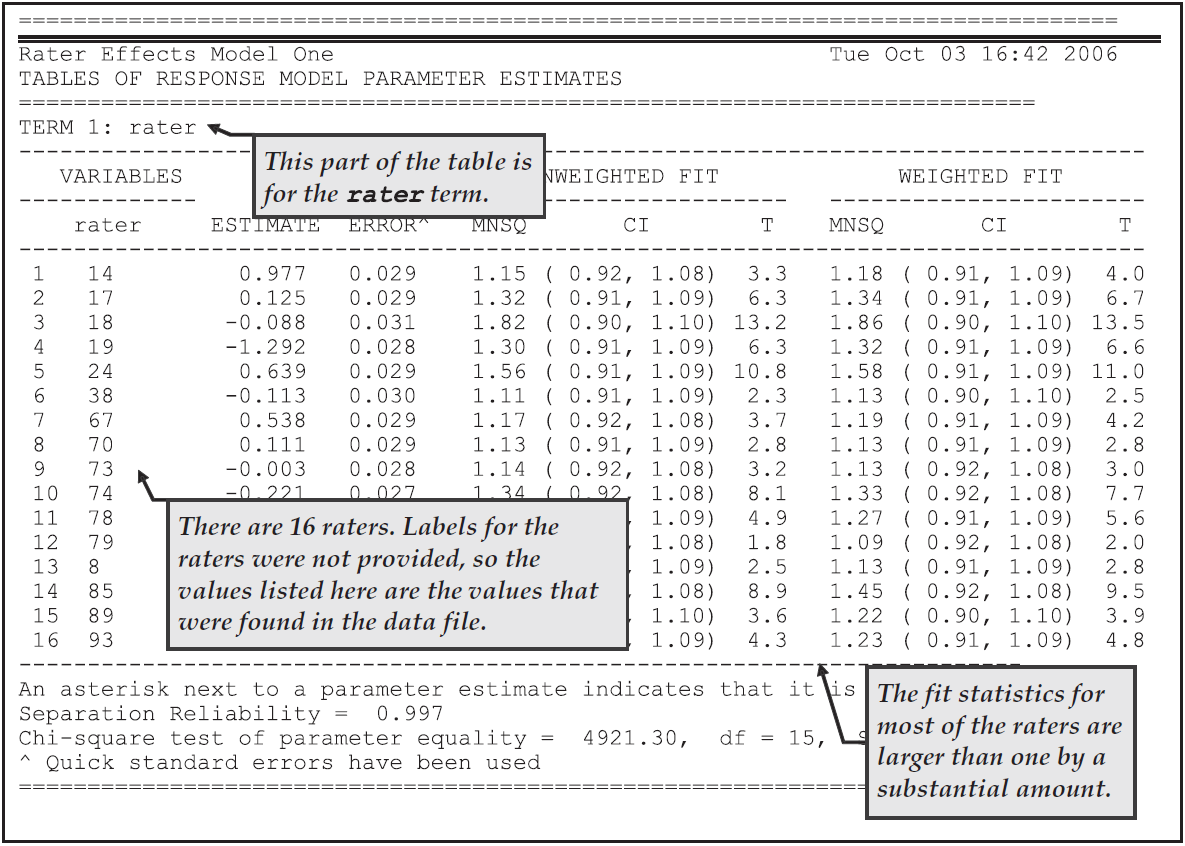

On this occasion, the show statement will produce six tables.

From Figure 2.27, we note that there were 16 raters and that the harshness ranges from a high of 0.977 logits for rater 14 (the first rater in the table) to a low of –1.292 for rater 19 (the fourth rater in the table).

This is a range of 2.123, which appears quite large when compared to the standard deviation of the latent distribution, which is estimated to be 2.37 (the square root of the variance that is reported in the third table (the population model) in ex3a_shw.txt).

That means that ignoring the influence of the harshness of the raters may move a student’s ability estimate by as much as one standard deviation of the latent distribution.

We also note that, with this model, the raters do not fit particularly well.

The high mean squares (and corresponding positive t values) suggest quite a bit of unmodelled noise in the ratings.

Figure 2.27: Parameter Estimates for Rater Harshness

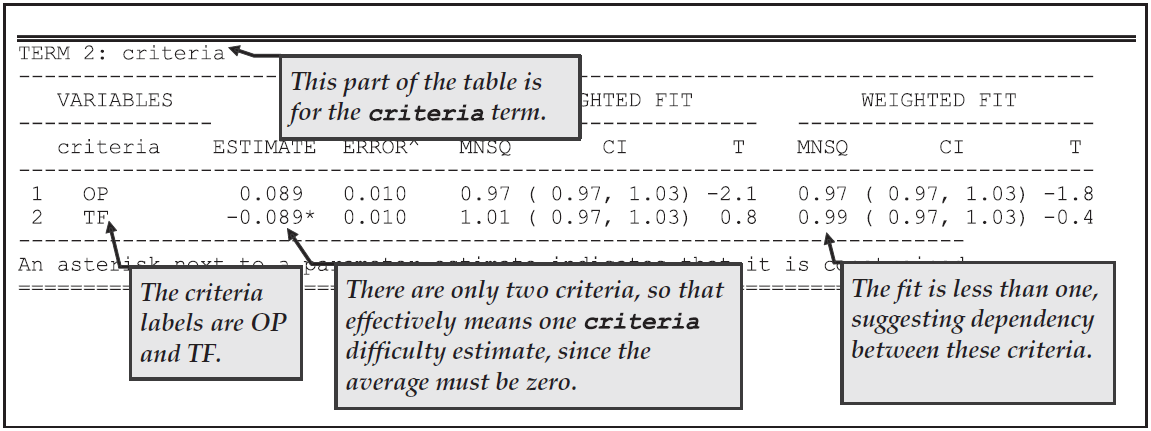

In Figure 2.28, we note that the OP and TF difficulty estimates are very similar, differing by just 0.178 logits. This difference is significant but very small. The mean square fit statistics are less than one, suggesting that the criteria could have unmodelled dependency.

Figure 2.28: Parameter Estimates for the Criteria

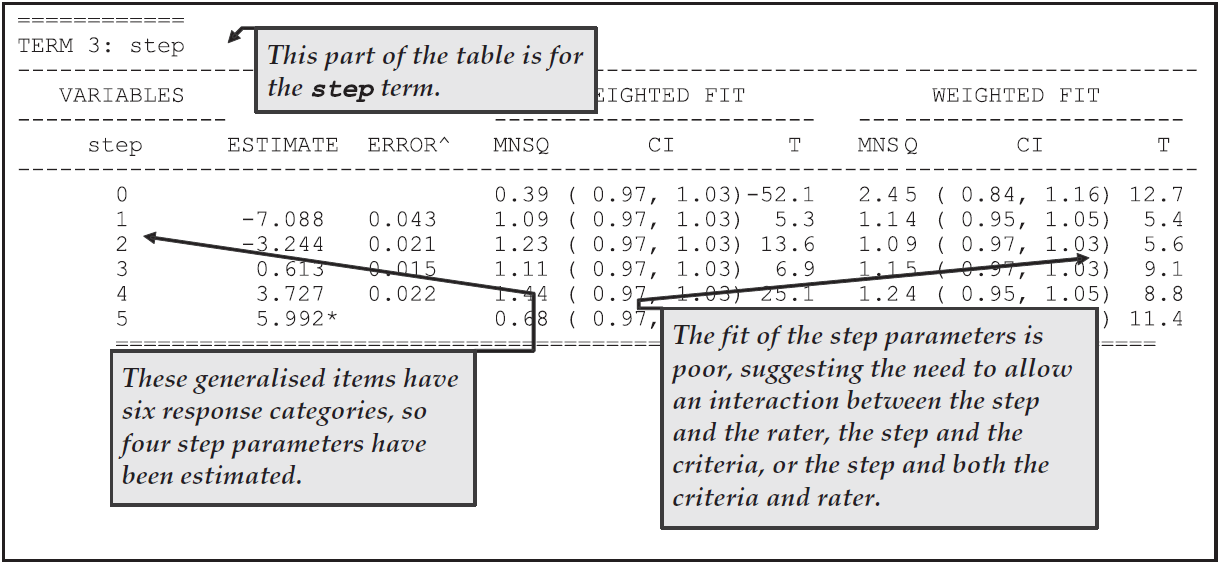

Figure 2.29 shows the step parameter estimates. The fit here is not very good, particularly for steps 1 and 4, suggesting that we should model step structures that interact with the facets. It is pleasing to note that the estimates for the steps themselves are ordered and well separated.

Figure 2.29: Parameter Estimates for the Steps

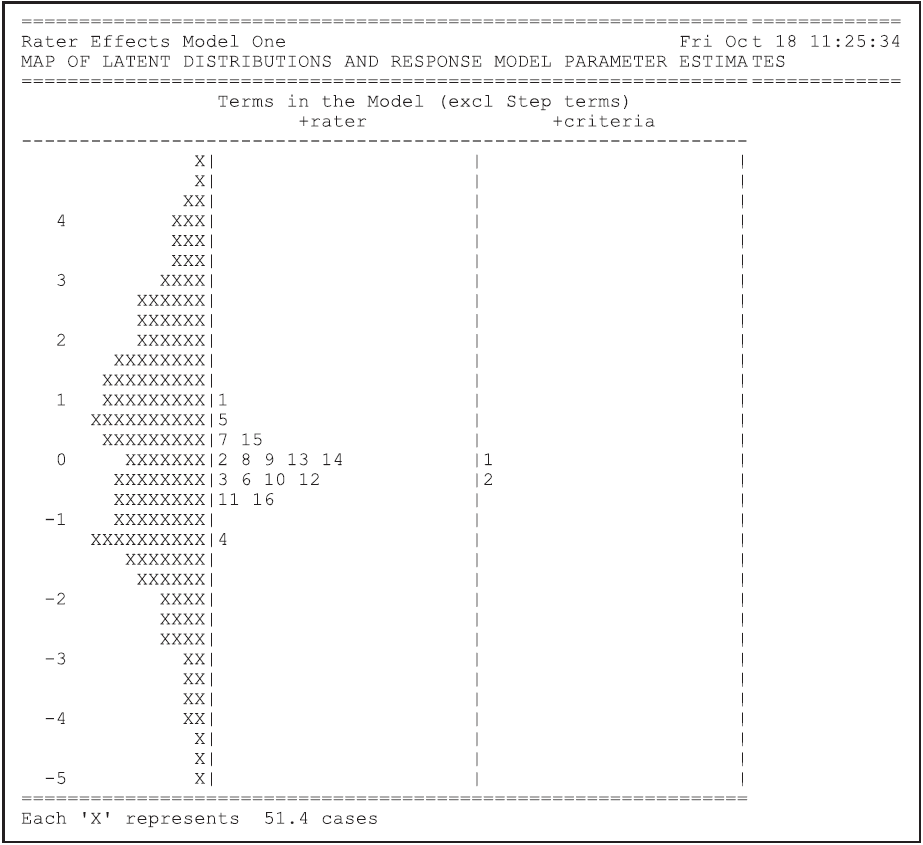

Figure 2.30 is the map of the parameter estimates that is provided in ex3a_shw.txt.

The map shows how the variation between raters in their harshness is large relative to the difference in the difficulty of the two tasks.

It also shows that the rater harshness estimates are well centred for the estimated ability distribution.

Figure 2.30: Map of the Parameter Estimates for the Multifaceted Model

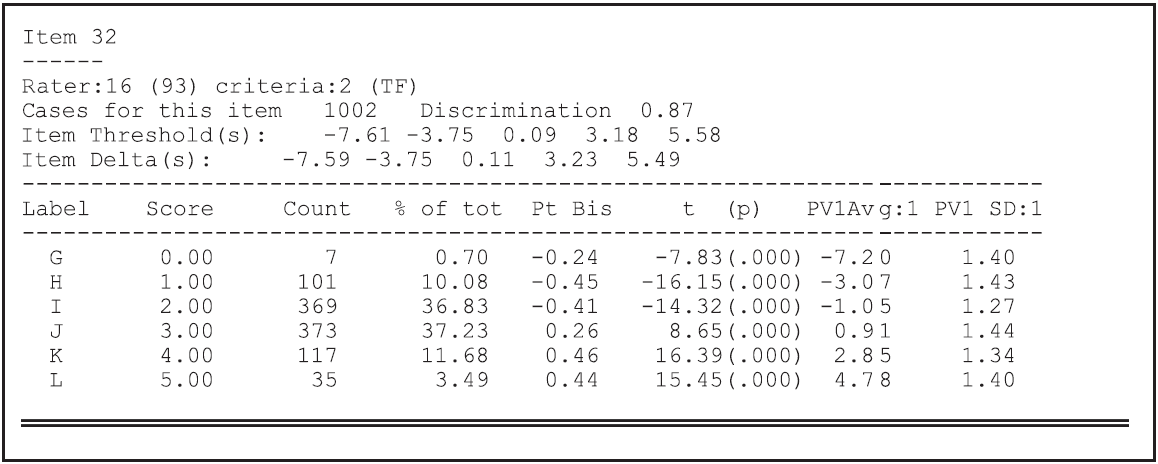

The file ex3a_itn.txt contains basic traditional statistics for this multifaceted analysis, extracts of which are shown in Figures 2.31 and 2.32.

In this analysis, the combination of the 16 raters and two criteria leads to 32 generalised items.12

The statistics for each of these generalised items is reported in the file ex3a_itn.txt.

Figure 2.31 shows the statistics for the last generalised item, which is the combination of rater 93 (the sixteenth rater) and criterion TF (the second criterion). For this generalised item, the total number of students rated by this rater on this criteria is shown (in this case, 1002); and an index of discrimination (the correlation between students’ scores on this item and their total score) is shown (in this case, 0.87). This discrimination index is very high, but it should be interpreted with care since only four generalised items are used to construct scores for each student. Thus, a student’s score on this generalised item contributes 25% to their total score.

For each response category of this generalised item, the number of observed responses is reported, both as a count and as a percentage of the total number of responses to this generalised item. The point-biserial correlations that are reported for each category are computed by constructing a set of dichotomous indicator variables, one for each category. If a student’s response is allocated to a category for an item, then the indicator variable for that category will be coded to 1; if the student’s response is not in that category, it will be coded to 0. The point biserial is then the correlation between the indicator variable and the student’s total score. It is desirable for the point biserials to be ordered in a fashion that is consistent with the category scores. However, sometimes point biserials are not ordered when a very small or a very large proportion of the item responses are in one category. This can be seen in Figure 2.31, where only seven of the 1002 cases have responses in category G.

Figure 2.31: Extract from the Item Analysis for the Multifaceted Analysis

The itanal statement’s output concludes with a set of summary statistics (Figure 2.32).

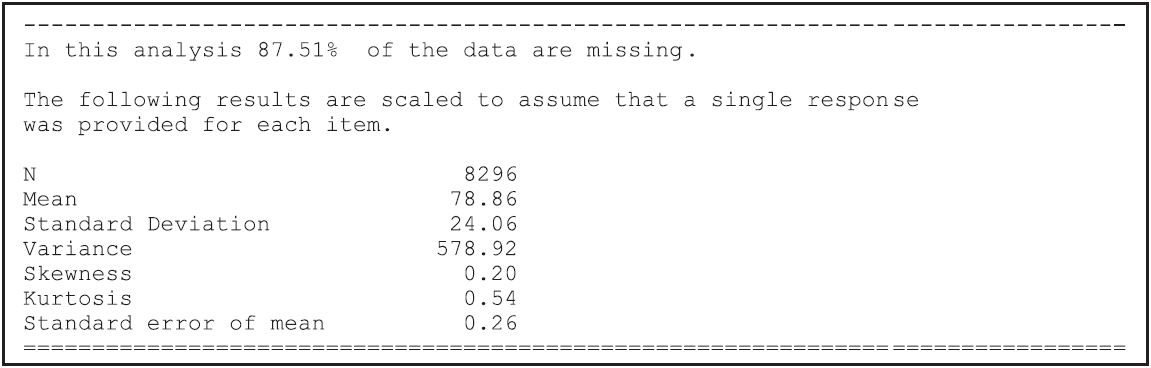

For the mean, standard deviation, variance and standard error of the mean, the scores have been scaled up so that they are reported on a scale consistent with students responding to all of the generalised items.

NOTE: Traditional methods are not well suited to multifaceted measurement. If more than 10% of the response data is missing — either at random or by design (as will often be the case in multifaceted designs) — the test reliability and standard error of measurement will not be computed.

Figure 2.32: Summary Statistics for the Multifaceted Analysis

2.4.2 b) The Multifaceted Analysis Restricted to One Criterion

In analysing these data with the multifaceted model, the fit statistics have suggested a lack of independence between the raters’ judgments for the two criteria and evidence of unmodelled noise in the raters’ behaviour. Here, therefore, an additional analysis is undertaken that adds some support to the hypothesis that the raters’ OP and TF judgments are not independent. In this second analysis, only one criterion (OP) is analysed.

2.4.2.1 Required files

The files that we use in this sample analysis are:

| filename | content |

|---|---|

| ex3b.cqc | The command statements. |

| ex3_dat.txt | The data. |

| ex3b_shw.txt | The results of the single-criterion multifaceted analysis. |

(The last file is created when the command file is executed.)

2.4.2.2 Syntax

ex3b.cqc is the command file used in this tutorial for fitting the multifaceted model to our data, but using only one of the criteria.

The code listed here is very similar to ex3a.cqc, the command file from the previous analysis (as shown in section 2.4.1.2).

So only the differences will be discussed in the list underneath the code box.

ex3b.cqc:

Title Rater Effects Model Two;

datafile ex3_dat.txt;

format rater 17-18 rater 19-20

responses 21 responses 25 ! criteria(1);

codes G,H,I,J,K,L;

score (G,H,I,J,K,L) (0,1,2,3,4,5);

labels 1 OP !criteria;

/*labels 2 TF !criteria;*/

model rater + criteria + step;

estimate !nodes=20;

show ! estimates=latent >> Results/ex3b_shw.txt;Lines 1-2

As in the command file of the previous analysis,ex3b.cqc.Line 3-4

The response blocks in theformatstatement now refer to one column only, the column that contains the OP criteria for each rater. Note that in the option we now indicate that there is just one criterion in each response block.Lines 5-7

As in the command file of the previous analysis,ex3b.cqc.Line 8

Thelabelsstatement for the TF criterion is now unnecessary, so we have enclosed it inside comment markers (/*and*/).Lines 9-11

As for lines 9, 10, and 11 inex3a.cqc, except theshowstatement output is directed to a different file,ex3b_shw.txt.

2.4.2.3 Running the Multifaceted Model for One Criterion

To run this sample analysis, start the GUI version of ACER ConQuest and open the control file ex3b.cqc.

Select Run\(\rightarrow\)Run All.

ACER ConQuest will begin executing the statements that are in the file ex3b.cqc; and as they are executed, they will be echoed on the screen.

When ACER ConQuest reaches the estimate statement, it will begin fitting the multifaceted model to the data; and as it does so, it will report on the progress of the estimation.

Due to the large size of this data file, ACER ConQuest will take some time to perform this analysis.

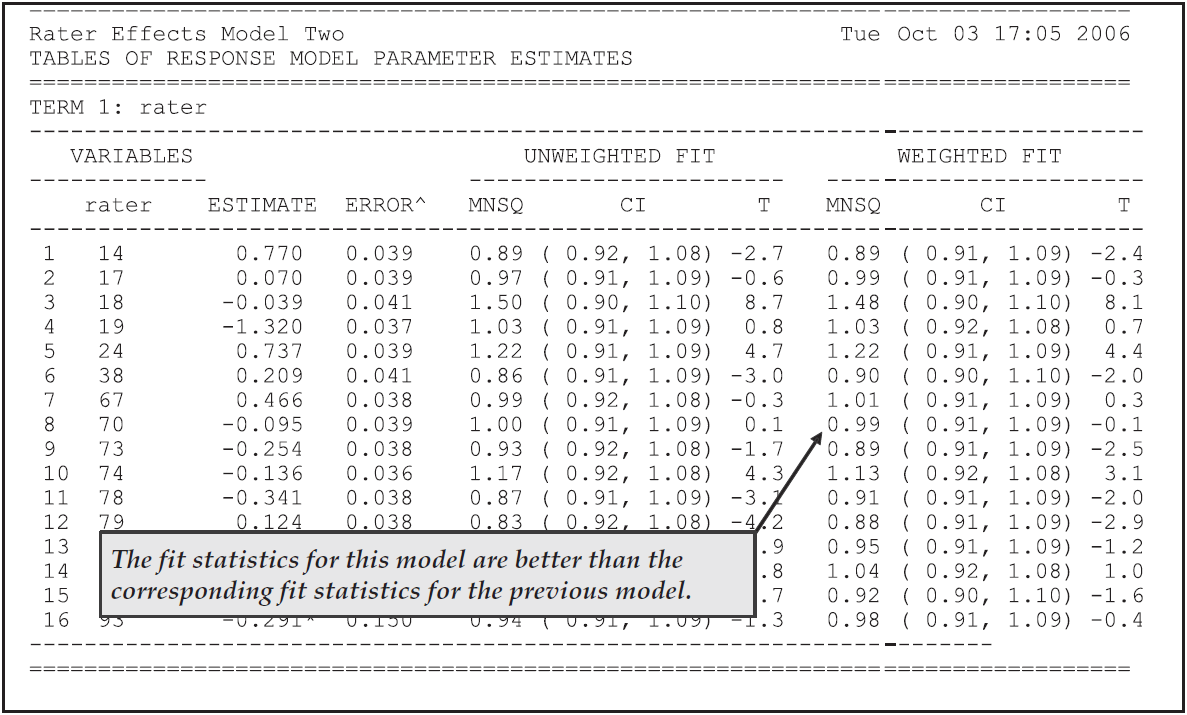

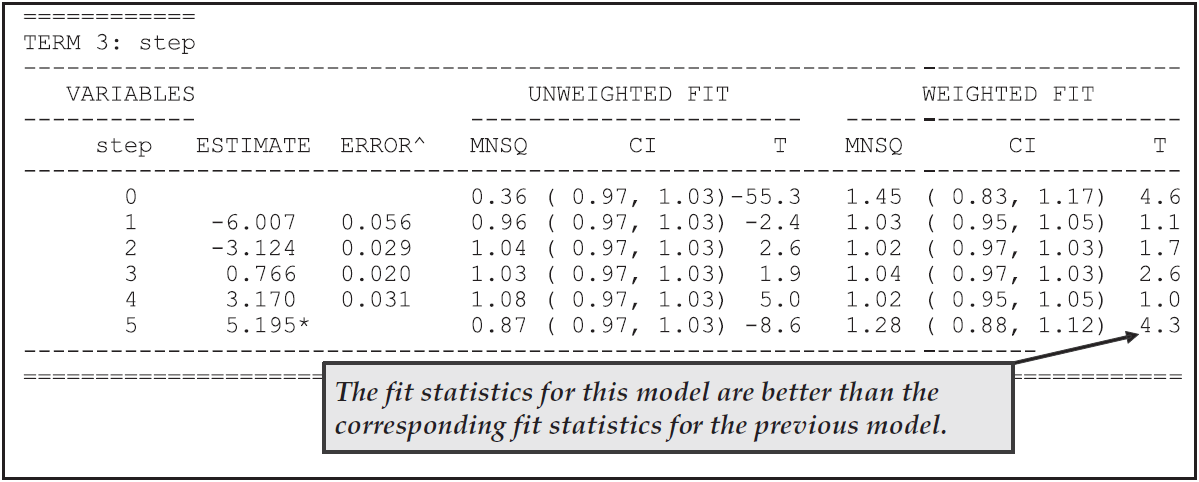

In Figures 2.33 and 2.34, the rater and step parameter estimates are given for this model from the second table in the file ex3b_shw.txt.

The part of the table that reports on the criteria facet is not shown here, since there is only one criterion and it must therefore have an estimate of zero.

In fact, the inclusion of the criteria term in the model statement was redundant.

A comparison of Figures 2.33 and 2.34 with Figures 2.27, 2.28, and 2.29 shows that this second model leads to an improved fit for both the rater and step parameters.

It would appear that the apparent noisy behaviour of the raters, as illustrated in Figure 2.27, is a result of the redundancy in the two criteria and is not evident if a single criterion is analysed.

The fit statistics for the steps are similarly improved, suggesting either that the redundancy between the criteria was influencing the step fits or that there is a rater by criteria interaction.

Figure 2.33: Rater Harshness Parameter Estimates

Figure 2.34: Step Parameter Estimates

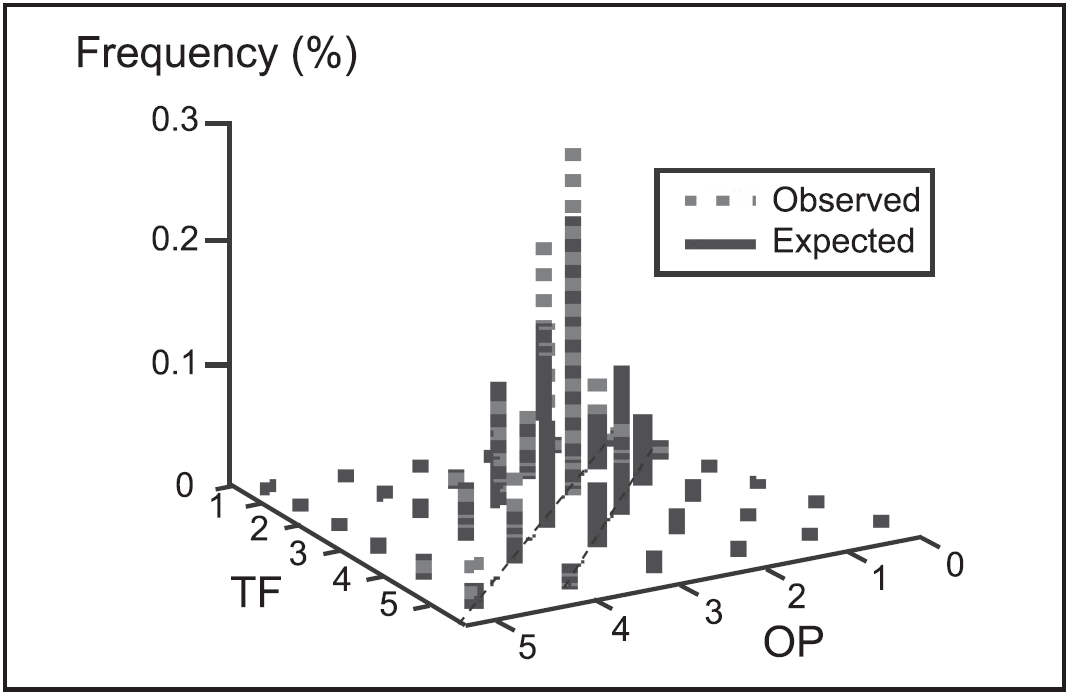

The dependency possibility can be further explored by using the model that assumed independence (the first sample analysis in this section) to calculate the expected frequencies of various pairs of OP and TF ratings and then comparing the expected frequencies with the observed frequencies of those pairs. Figure 2.35 shows a two-dimensional frequency plot of the observed and expected number of scores for pairs of values of TF and OP given by rater 85. The diagonal line shows the points where the TF and OP scores are equal. It is noted that the observed frequencies are much higher than the expected frequencies along this diagonal, indicating that rater 85 tends to give more identical scores for TF and OP than one would expect. Similar patterns are also observed for other raters. It appears that a model that takes account of the severity of the rater and the difficulty of the criteria does not fit these data well.

Figure 2.35: Observed Versus Expected Frequencies for Pairs of OP and TF Scores

WARNING: In section 2.3, the deviance statistic was used to compare the fit of a rating scale and partial credit model. It is not appropriate to use the deviance statistic to compare the fit of the two models fitted in this section. The deviance statistic can only be used when one model is a submodel of the other. For this to occur, the models must result in response patterns that are the same length, and each of the items must have the same number of response categories in each of the analyses (which was not the case here).

2.4.3 Summary

In this section, we have seen how to fit multifaceted models with ACER ConQuest. Our sample analysis has used only one additional facet (rater), but ACER ConQuest can analyse up to 50 facets.

Some key points we have covered in this section are:

- ACER ConQuest can be used to fit multifaceted item response models easily.

- The

formatstatement is very flexible and can deal with many of the alternative ways that multifaceted data can be formatted (see the command reference in Section 4 for more examples). - A

scorestatement can be used to assign scores to the response categories that are modelled. - We have reiterated the point that response categories and item scores are not the same thing.

- Fit statistics can be used to suggest alternative models that might be fitted to the data.

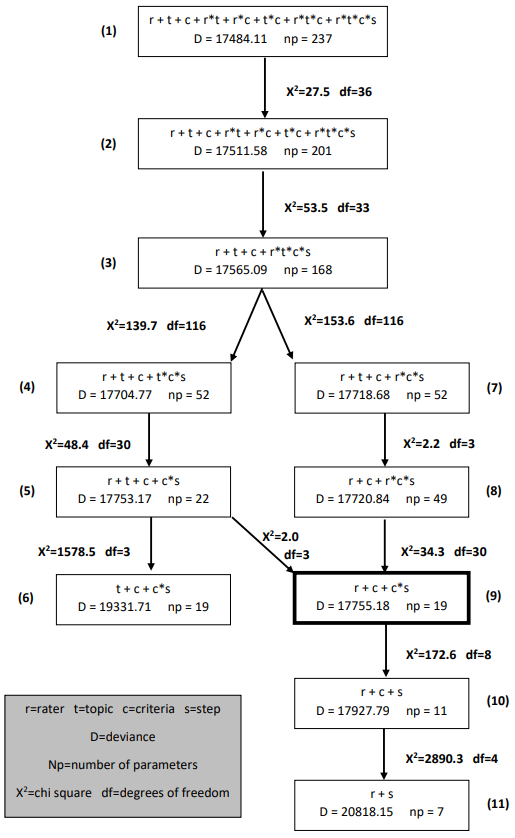

2.5 Many Facets and Hierarchical Model Testing

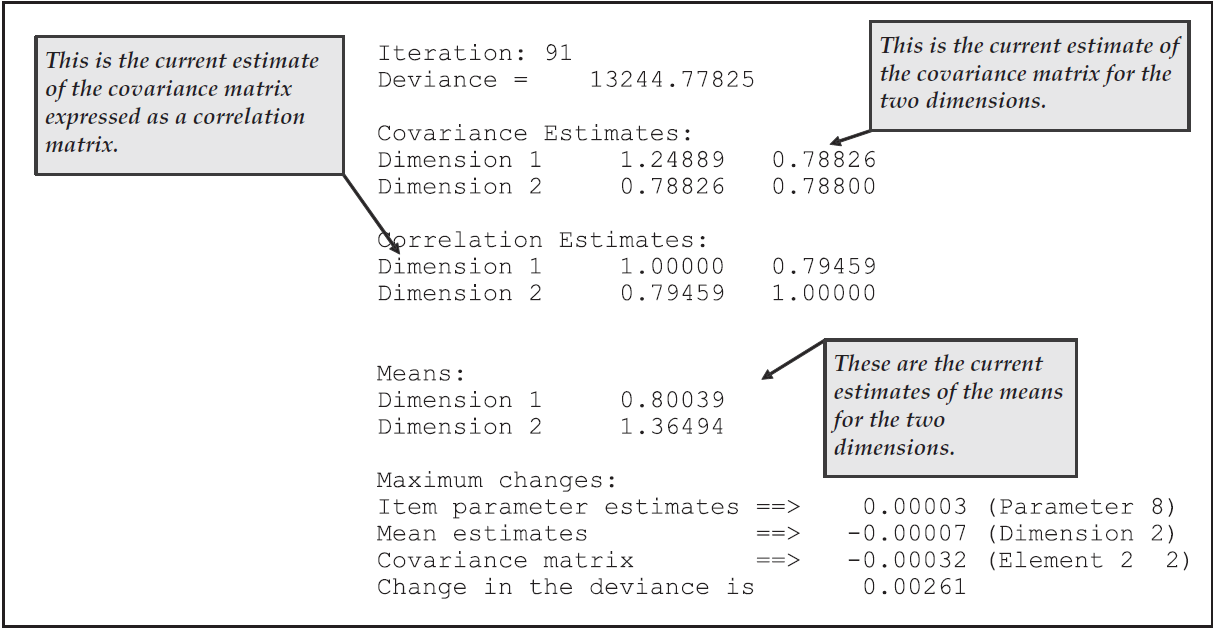

In section 2.4, the notion of additional measurement facets is introduced, and data was analysed with one additional facet, a rater facet. The number of facets that can be used with multifaceted measurement models is theoretically unlimited, although, as shall be seen in this section, the addition of each new facet adds considerably to the range of models that need to be considered.13 A number of techniques are available for choosing between alternative models for multifaceted data. First, the deviance statistic of alternative models can be compared to provide a formal statistical test of the relative fit of models. Second, the fit statistics for the parameter estimates can be used, as was done in the previous section. Third, the estimated values of the parameters associated with a term in a model can be examined to see if that term is necessary. In this section, we illustrate these strategies for choosing between the many alternative multifaceted models that can be applied to data that have more than two facets.

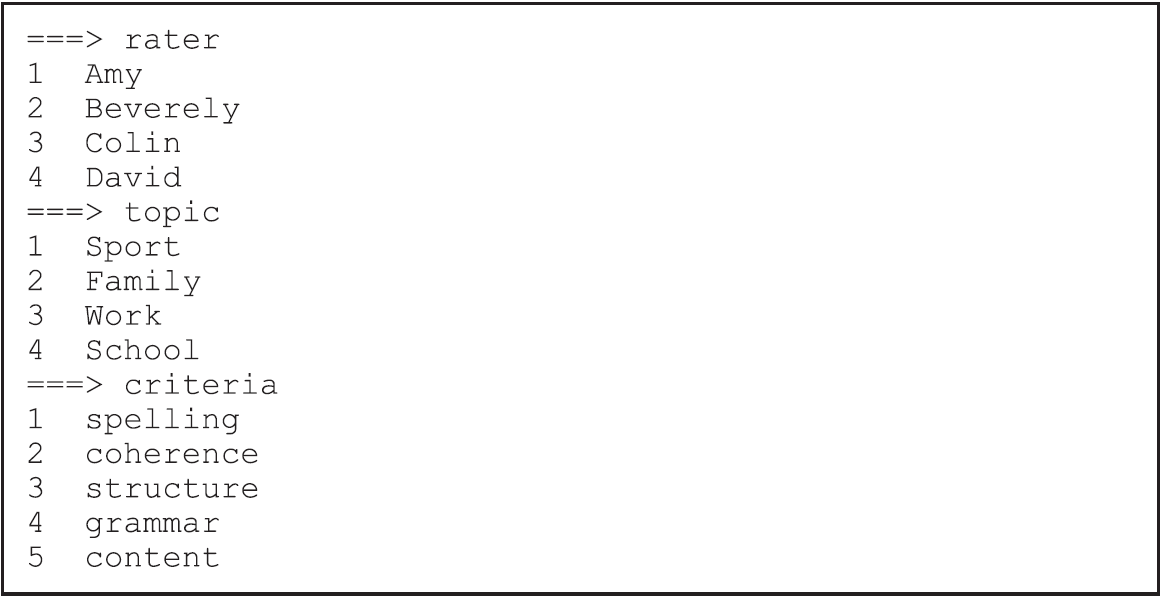

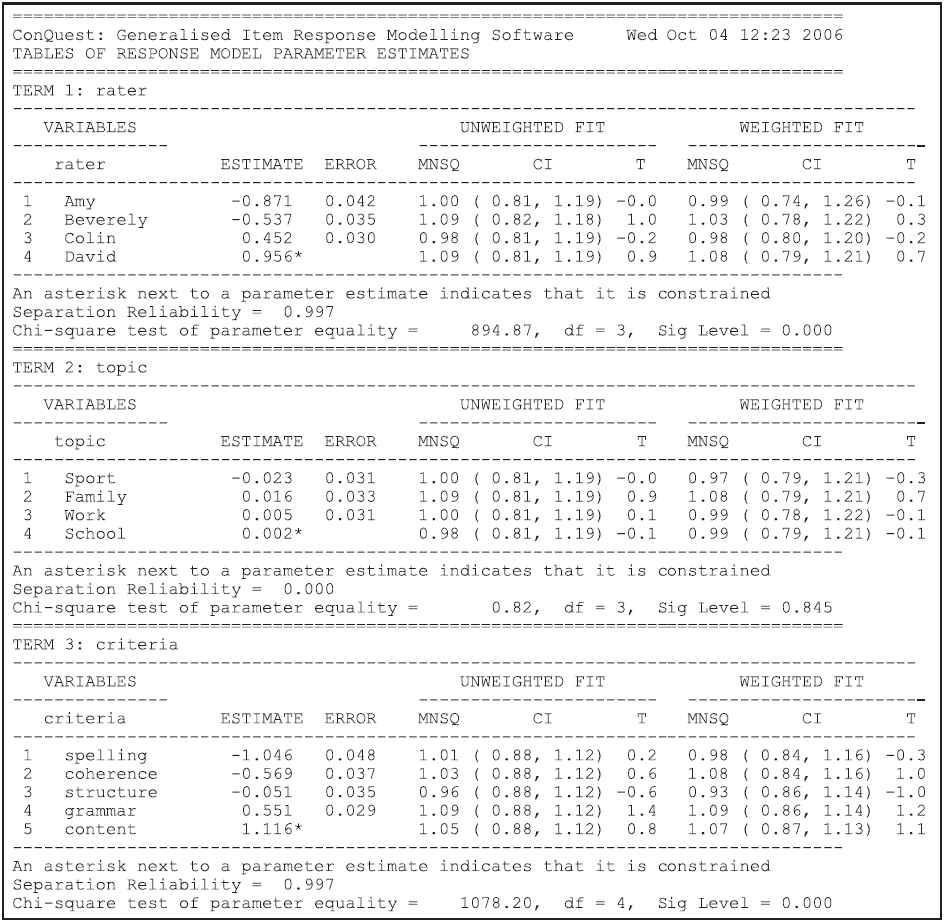

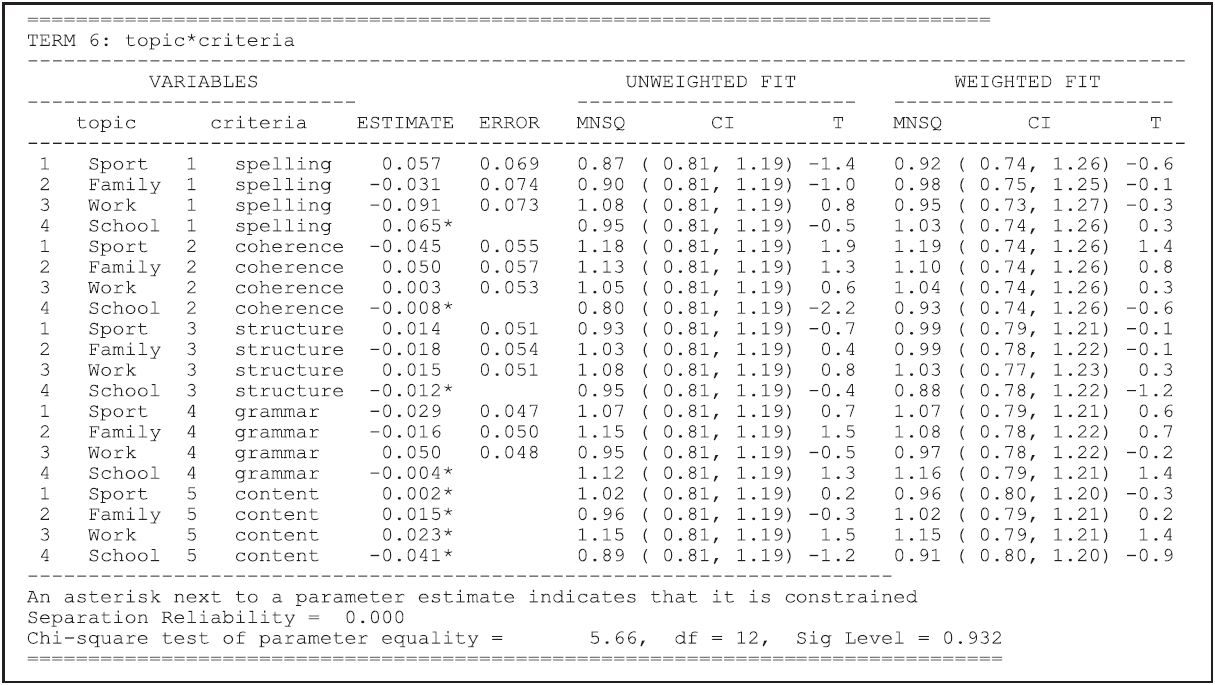

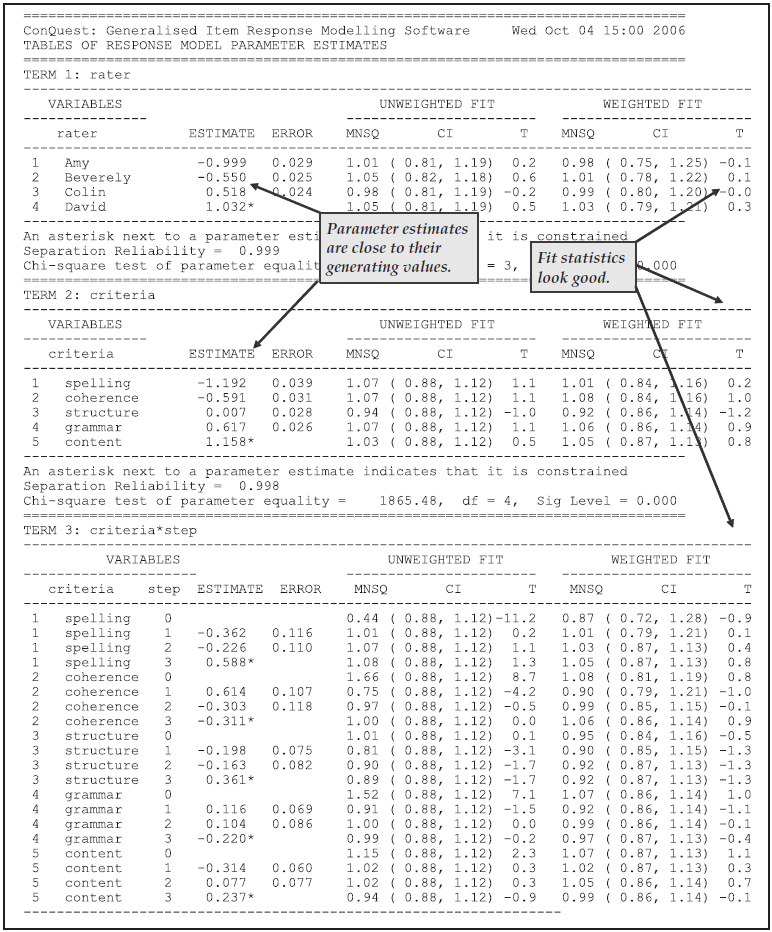

The data that we are analysing in this section are simulated three-faceted data.14

The data were simulated to reflect an assessment context in which 500 students have each provided written responses to two out of a total of four writing topics.

Each of these tasks was then rated by two out of four raters against five assessment criteria.

For each of the five criteria, a four-point rating scale was used with codes 0, 1, 2 and 3.

This results in four sets of ratings (two essay topics by two raters’ judgments) against the five criteria for each of the 500 students.

In generating the data, two raters and two topics were randomly assigned to the students, and the model used assumed that the raters differed in harshness, that the criteria differed in difficulty, and that the rating structure varied across the criteria.

The topics were assumed to be of equal difficulty; there were no interactions between the topic, criteria and rater facets; and the step structure did not vary with rater or topic.

The files used in this sample analysis are:

| filename | content |

|---|---|

| ex4a.cqc | The command statements used for the first analysis. |

| ex4_dat.txt | The data. |

| ex4_lab.txt | The variable labels for the facet elements. |

| ex4a_prm.txt | Initial values for the item parameter estimates. |

| ex4a_reg.txt | Initial values for the regression parameter estimates. |

| ex4a_cov.txt | Initial values for the variance parameter estimates. |

| ex4a_shw.txt | Selected results of the first analysis. |

| ex4b.cqc | The command statements used for the second analysis. |

| ex4b_1_shw.txt and ex4b_2_shw.txt | Selected results of the second analysis. |

| ex4c.R | The R command file used for the third analysis. |

| ex4c.cqc | The ACER ConQuest command statements used for the third analysis. |

| ex4c_1_shw.txt through ex4c_11_shw.txt | Selected results of the third analysis. |

(The _prm.txt, _reg.txt, _cov.txt, and _shw.txt files are created when the command file is executed.)

The data were entered into the file ex4_dat.txt using four lines per student, one for each rater and topic combination.

For each of the lines, column 1 contains a rater code, column 3 contains a topic code and columns 5 through 9 contain the ratings of the five criteria given by the matching rater and topic combination.

2.5.1 a) Fitting a General Three-Faceted Model

In the first analysis, we fit a model that assumes main effects for all facets, the set of three two-way interactions, and a step structure that varies with topic, item and rater.

2.5.1.1 Syntax

ex4a.cqc is the command file used in the first analysis to fit one possible multifaceted model to these data.

The code box below shows the contents of the file, and the list underneath the code box explains each line of syntax.

ex4a.cqc:

datafile ex4_dat.txt;

format rater 1 topic 3 responses 5-9 /

rater 1 topic 3 responses 5-9 /

rater 1 topic 3 responses 5-9 /

rater 1 topic 3 responses 5-9 ! criteria(5);

label << ex4_lab.txt;

set update=yes,warning=no;

model rater + topic + criteria + rater*topic + rater*criteria +

topic*criteria + rater*topic*criteria*step;

export parameters >> Results/ex4a_prm.txt;

export reg >> Results/ex4a_reg.txt;

export cov >> Results/ex4a_cov.txt;

estimate ! nodes=10, stderr=empirical;

show parameters !estimates=latent,tables=1:2:4>> Results/ex4a_shw.txt;Line 1

Indicates the name and location of the data file.Lines 2-5

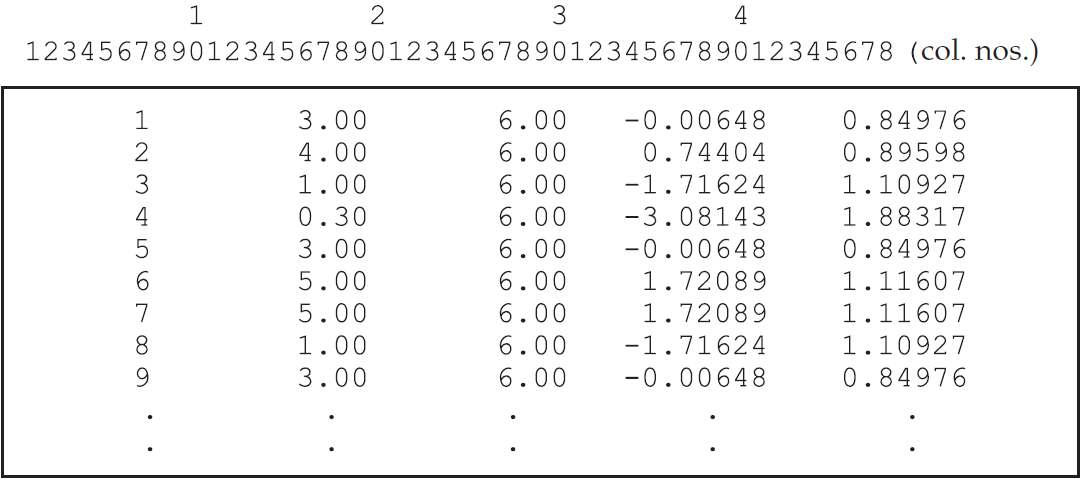

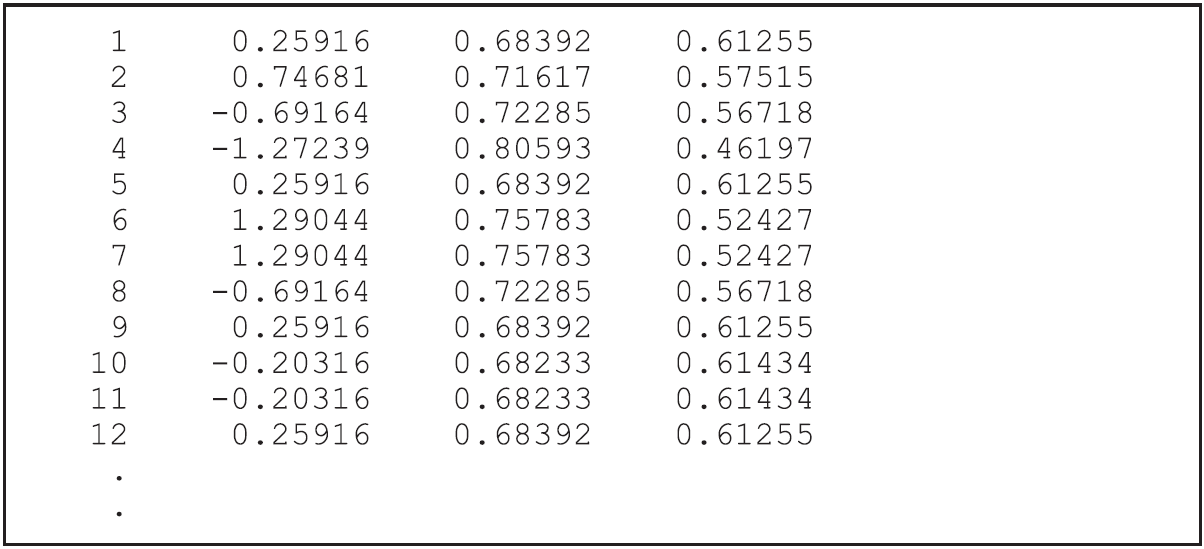

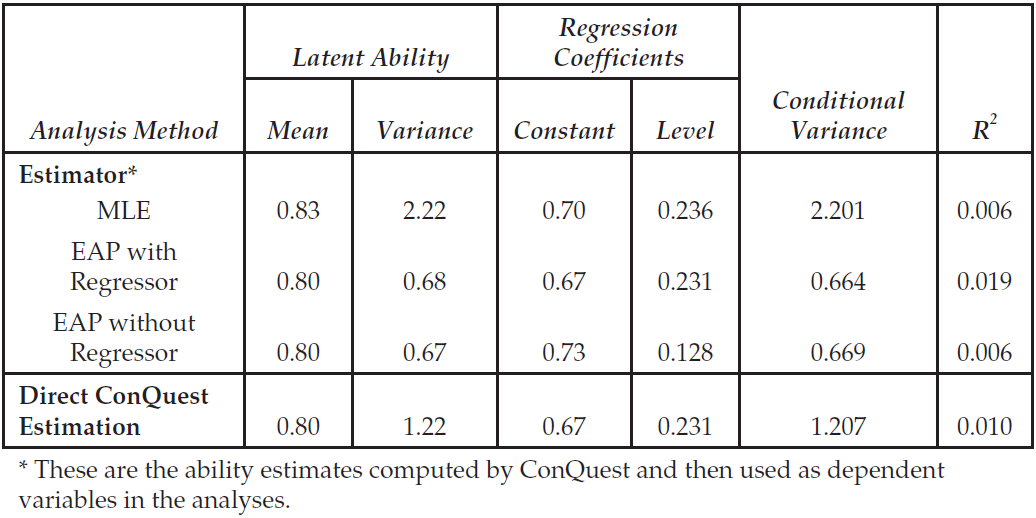

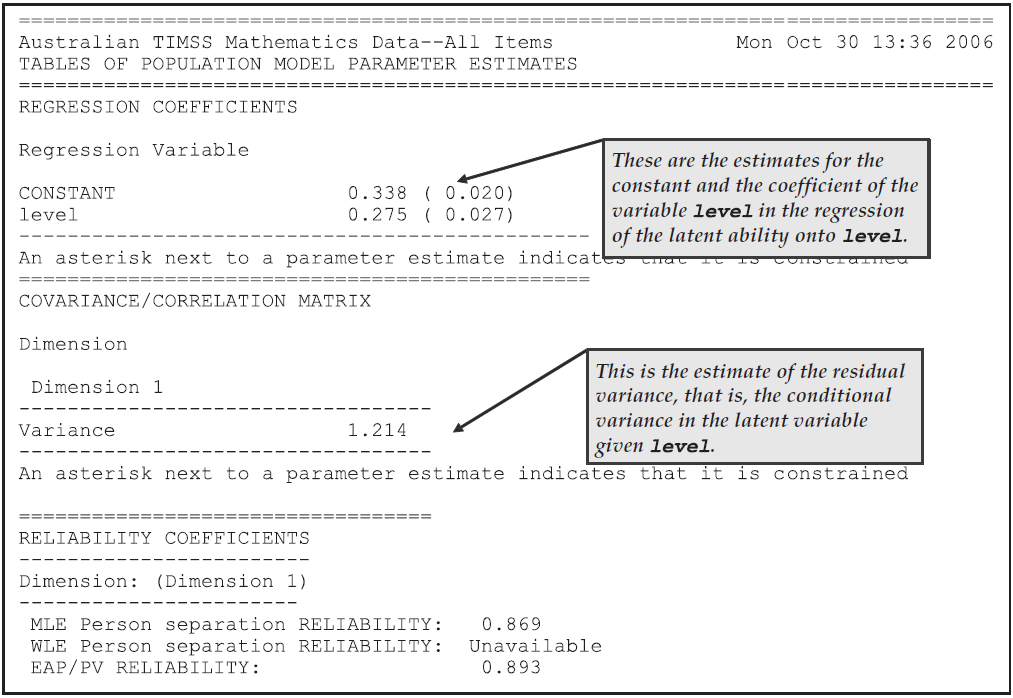

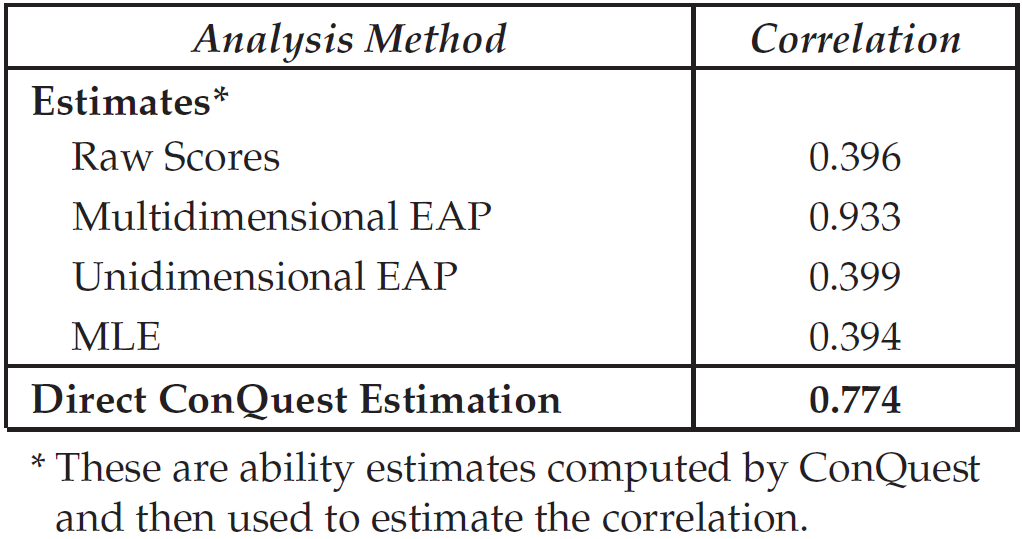

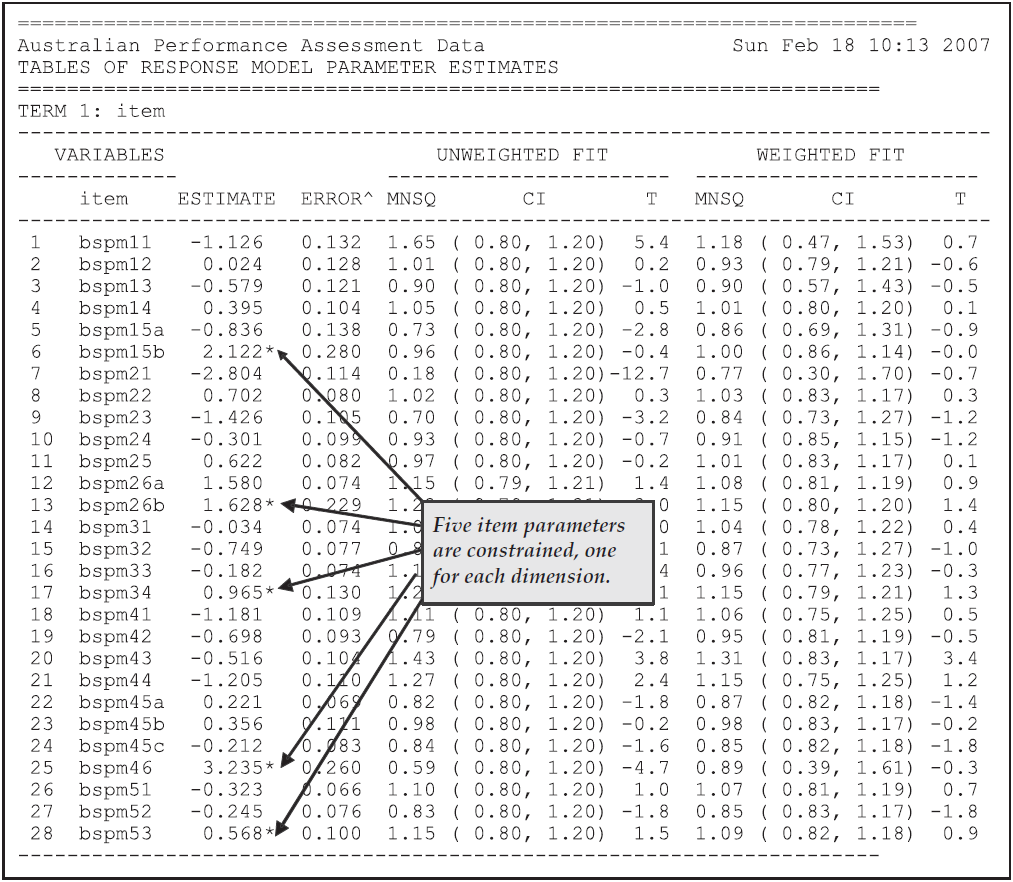

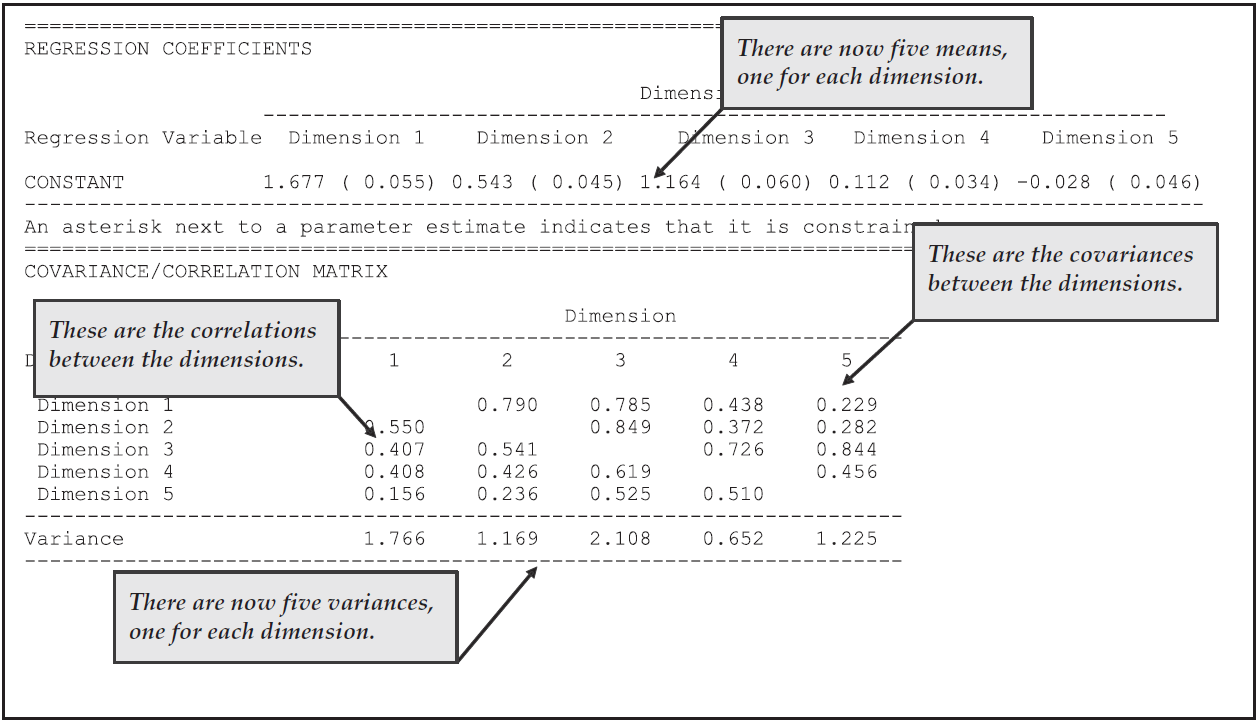

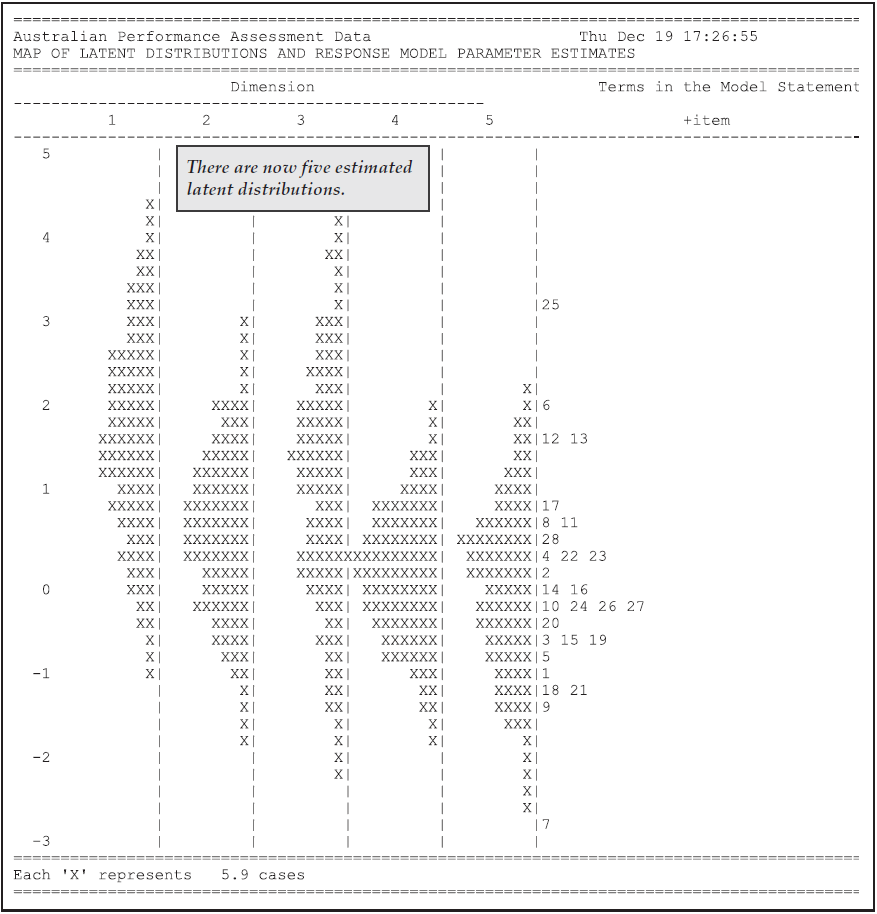

Multifaceted data can be entered into data sets in many ways. The ACER ConQuestformatstatement is very flexible and can cater for many alternative data specifications. Here the data are spread over four lines for each student. Each line contains a rater code, a topic code and five responses. The slash (/) character is used to indicate that the following data should be read from the next line of the data file. The multiple use of the termsrater,topicandresponsesallows us to read the multiple sets of ratings for each student. In this case, the termrateris used four times,topicfour times andresponsesfour times. Thus, the rater and topic indicated on the first line for each case will be associated with the responses on the first line, the rater and topic on the second line will be associated with the responses on the second line, and so on. More generally, if variables are repeated in aformatstatement, the n-th occurrence ofresponseswill be associated with the n-th occurrence of any other variable, or the n-th occurrence ofresponseswill be matched with the highest occurrence of any other variable if n is greater than the number of occurrences of that variable.This